Bigger Crawl Budget

No More Wasted Crawl Budgets

Manage your own crawl budget before Google does.

With JavaScript-heavy websites, crawl budgets can be depleted in the blink of an eye. As it is, you’re allocated a limited budget on a given day—and the more dynamic the content is, the harder it is to “read” against standard HTML sites.

If Googlebot struggles to read your content, some pages will have missing text, links, or images (or be left out entirely). This means you won’t compete with fully-rendered pages.

Work Smarter with Resources

Use Your Crawl Budget Wisely

Gain a competitive advantage with a bigger crawl budget. Help Google to crawl your JavaScript pages.

Search engines dish out resources according to the size of your website, popularity in SERPs, quality of content, industry niche, health status, and more when compared to competitors. Regardless of your budget, bots will continue to crawl—even if it means using up your whole budget on a page that doesn’t add value to your bottom line. That’s where optimization comes into play.

Render and Optimize Your Crawl Budget

Maximize Your Crawl Potential

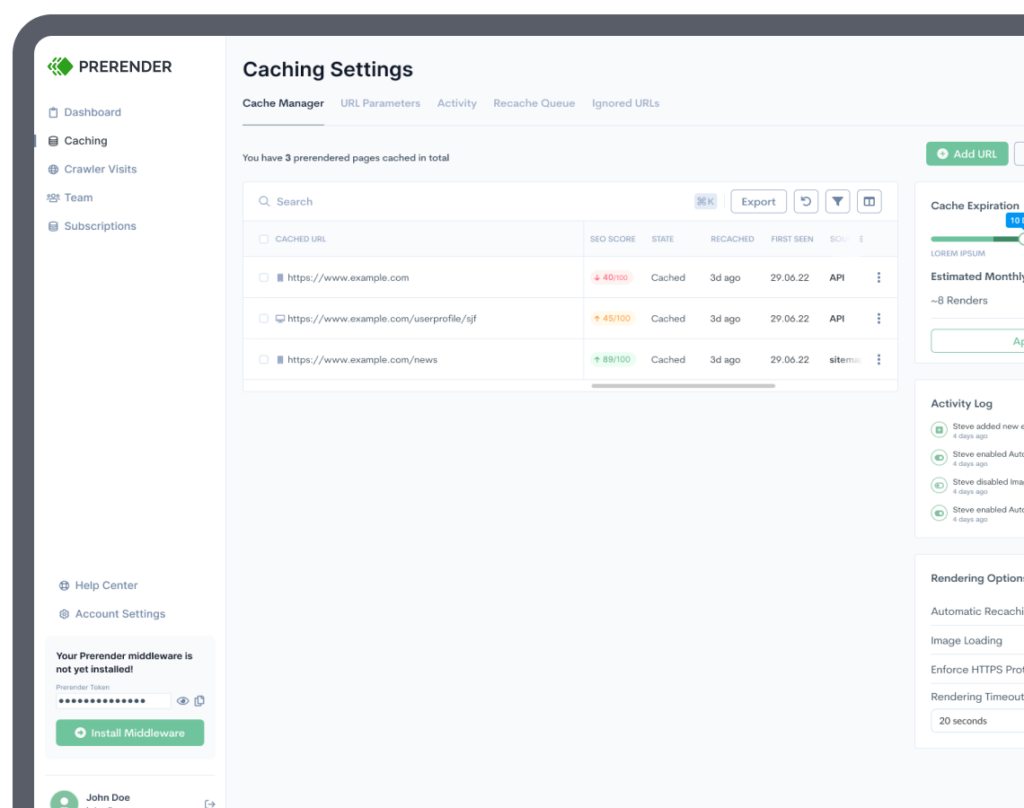

We make it possible to optimize crawl budgets so that JavaScript doesn’t eat it up on slow pages. Our prerendering solution will render your content in static HTML so that Google can see it, crawl it, and index it, without errors.

Better JavaScript SEO Crawling with Prerender

Try it yourself and see the difference it makes!

Get started with 1,000 URLs free.

Bigger Crawl Budget FAQs

Learn about Prerender, technical SEO, and JavaScript on our dedicated FAQs page.

What is a Crawl Budget

A crawl budget is a calculated number of pages search engine bots can crawl within a certain period of time. Once your dedicated budget is exhausted, bots can no longer crawl or index the pages until the next crawl. If a page is half-indexed or unindexed after this process, your content is invisible from any search query. This blog will tell you more about optimizing your crawl budget for better results, including how to calculate your limit.

Why is a Crawl Budget Important for SEO?

Googlebot uses a crawl budget to index a page. [Note: you cannot be indexed if your content isn’t crawled.] Once indexed, search engines will determine the page rank based on its technical SEO qualities and other related factors. If you do not get indexed due to JS SEO issues, your content won’t appear in SERPs. Therefore, crawl rate optimization will help Google to crawl your JavaScript pages.

Can I Get a Bigger Crawl Budget?

You can’t ask search engines like Google to increase your crawl budget—but you can configure your website and use a web optimization tool like Prerender to distribute your crawl budget more efficiently. This can also be achieved by alleviating the amount of rendering that Google needs to do on a JS-heavy web page before crawling it. Below are some optimization ideas that you can implement on your site. For the step-by-step guide, find it here.

- Reduce 404 error codes

- Resolve 301-redirect chains

- Use robots.txt to crawl selected pages only

Can Prerender Optimize My Crawl Budget?

Prerender renders your JS pages in advance. So, when bots crawl your pages, we feed them the HTML version of your JS pages, saving you plenty of crawl budget that can be allocated for web pages that affect your bottom line. Here is an in-depth explanation of the Prerender process (including visual help).

We Help Clients Everywhere in the World!

Prerender currently serves 2.7 billion web pages to crawlers.

Trusted by 65K businesses across the globe.