The world of web development has come a long way since the invention of the World Wide Web.

The technologies and techniques that engineers and web developers use to build websites seem to dramatically change with increasing frequency. The way search engines like Google categorize and understand websites has had to become more sophisticated to keep up with it.

AJAX is one of the technologies that developers use to improve user experience and make websites that have more functionality. It is widely used by websites across the internet. Historically, AJAX has been prohibitively difficult for search engines to process and has thus posed a problem for website owners seeking to rank on Google.

Is AJAX SEO-friendly? How do you get Google and other search engines to correctly crawl and index your AJAX website? We’ll explore these questions and provide some recommendations.

🔍 Want to boost your SEO? Download the free technical SEO guide to crawl budget optimization and learn how to improve your site’s visibility on search engines.

What Is AJAX?

To understand why AJAX has been so problematic for SEO teams and website owners, it helps to understand what AJAX is and what it does.

AJAX stands for Asynchronous JavaScript and XML. It’s a protocol that allows for a browser to make HTTP requests with servers without having to refresh the page.

AJAX can send and receive information in:

- JSON

- XML

- HTML

- Text files

Normal web applications send information between you and the server using synchronous HTTP requests. This means that the page has to stop and wait for a server response to reload whenever the page gets new content.

AJAX, meanwhile, loads JavaScript asynchronously between you and the server in the background and retrieves new content in real time after the page has loaded. Rather than loading the data via an HTTP via the URL bar, AJAX uses the XMLHttpRequest to update individual parts of the page while the page as a whole remains interactive.

Imagine the way a Twitter feed works. You follow any number of people who likely tweet multiple times a day. Normally, this is indicated by the “Load More Tweets” button at the top or the bottom of your feed.

Imagine having to hit the “refresh” button in your browser to update your Twitter feed rather than just hitting the button on the page itself and having it instantly update? This would be frustrating, time consuming and needlessly tiresome.

These are the types of problems that AJAX solves. It has other similar applications as well. It makes the collapsable information boxes you see on many modern websites possible, or the “load more” functionality seen at the bottom of blog articles. The modern internet would be very different without it.

That said, AJAX comes with its pitfalls.

Is AJAX SEO-Friendly

Can Google crawl AJAX content? The short answer is yes. The longer answer is yes, but it’s a little more complicated.

Single page web applications that use AJAX frameworks have historically been very problematic from an SEO standpoint and caused problems such as:

- Crawling issues: Important content was hidden behind unparsed JavaScript which only rendered on the client side, meaning Google would essentially just see a blank screen

- Broken website navigation and navbar issues

- Cloaking: Webmasters might unintentionally create content for the user that’s different from what the web crawlers see, resulting in ranking penalization

For years, Google advised webmasters to make use of the AJAX crawling scheme to signal to Google that a website had AJAX content, as advised in its 2009 proposal. The AJAX crawling scheme made use of the _escaped_fragment parameter. This parameter instructed Google to get a pre-rendered version of a web page with static, machine-readable HTML that Google could parse and index. The server would instruct the web crawler to crawl a different page than what was available in the source code, similar to how dynamic rendering works today.

Then, things changed. In 2015 Google announced that Google was now generally able to crawl, read and parse JavaScript without any issues, making the AJAX crawling scheme obsolete. This was the point when Google began recommending dynamic rendering solutions instead.

SEO Problems That Can Occur With AJAX

Google might claim that it’s able to crawl and parse AJAX websites, yet it’s risky to just take its word for it and leave your website’s organic traffic up to chance. Even though Google can usually index dynamic AJAX content, it’s not always that simple.

Some of the things that can go wrong include:

Buried HTML

If important content is buried beneath AJAX JavaScript, the crawler might have more difficulty accessing it. This can delay the crawl, render, index and ranking process by a week or more.

To make sure your content gets ranking on Google effectively, make sure your important content is stored in HTML so it can be indexed by Google and other search engines — or make sure your content is prerendered so that Google can easily access it.

Missing Links

Google uses your website’s internal links to understand how your content relates to each other, and your external links as proof that your content uses authoritative, credible and trustworthy information to substantiate it.

For this reason, it’s very important that all your links be readable and not buried under AJAX JavaScript.

How AJAX Impacts SEO

So does that mean you don’t need to worry about whether Google can crawl your AJAX website? Not necessarily.

While it’s true that the AJAX crawling scheme proposed in 2009 is now obsolete, we only have Google’s assurances that its web crawlers can now crawl and parse JavaScript websites.

If we take a look at the exact wording of Google’s deprecation of the AJAX crawling scheme, it says:

“… as long as you’re not blocking Googlebot from crawling your JavaScript or CSS files, we are generally able to render and understand your web pages like modern browsers.”

The operative word here is generally. This is essentially Google’s way of covering its tracks, shrugging its shoulders when it comes to AJAX websites, and saying “it’s not our problem.”

So, while you no longer need to use special workarounds to make sure that Google can crawl your website, it’s still within your best interest as a website owner to ensure that Google can crawl your website.

So, what are the rules now?

For one thing, Google now advocates the use of progressive enhancement, a philosophy of web development that emphasizes the importance of the content itself before anything else.

One way you can adhere to these standards is by using the History API with the pushState() function, which changes the path of the URL that appears in the client-side address bar.

Using pushState enables you to have the speed and performance benefits of AJAX while still being crawlable. The URL accurately reflects the “real” location of the web page giving a more accurate picture of the content.

Another thing that Google pays attention to now is what’s known as #!, or “hashbang” markup.

Google looks for #! parameters to identify dynamic URLs and treat them differently. It takes everything after the hashtag and passes it to the website as a URL parameter, then requests the static version of the page that it can read, index and rank.

How You Can Make Your AJAX Website Crawlable

It’s completely possible to optimize a JavaScript website that not only displays correctly but also is indexed and ranked by Google without any missing content, crawl errors or any other problems that can affect your search rankings.

Some things to look out for include:

Optimize URL Structure

It’s strongly advised that you use the pushState History API in place of the _escaped_fragment protocol. The function updates the URL in your address bar so that any JavaScript content displays without any issues.

A clean URL means using short phrases and text in a way that makes it easy to tell what the page is about.

Optimize Website Speed

When the client-side browser creates a DOM, it can end up creating a lot of bloated coding inside your HTML which bogs down your page speed and subsequently makes it harder for Googlebot to crawl your content in one sitting. Make sure you remove any render-blocking resources so that Google can parse your CSS and scripts efficiently.

AJAX Websites Can Work for You

AJAX websites have been a headache for website owners who want to provide their users with the best possible user experience. Most of the issues that have historically been problems seem to have been resolved, but AJAX can still be cumbersome for your website’s maintenance and detrimental to your website’s SEO health.

Use the History API function so you can have the performance benefits and user experience perks of AJAX while still ensuring your website can reach top-ranking positions in Google search results.

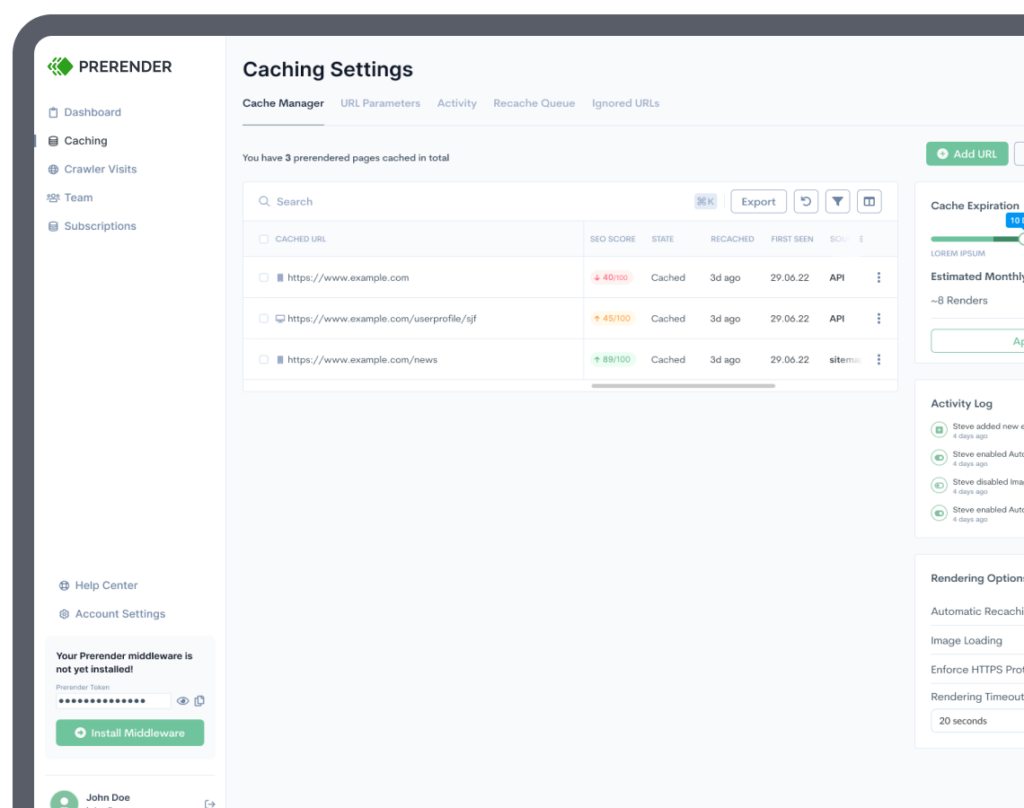

Better yet, use Prerender to make sure your AJAX website is crawled seamlessly every time Google visits your website.

Sign up for a demo today, and get your AJAX website ranking on the SERPs without any hassle or headaches.