If you’ve read about SEO, you’ve probably heard about “black hat” techniques like cloaking. Even if you’re not interested in using it, it’s easy to assume that it’s instantly a shady strategy from the outside.

You’ve probably heard about ‘black hat’ SEO techniques called ‘cloaking’ and wondered, “Is dynamic rendering cloaking?”

The short answer is no. Dynamic rendering is not cloaking. We’ll explain to you why this is the case by comparing how cloaking and dynamic rendering works. Plus, shares the process of Prerender’s dynamic rendering and its impacts on your crawling and indexing, as well as the overall SEO performance of your JavaScript-based websites.

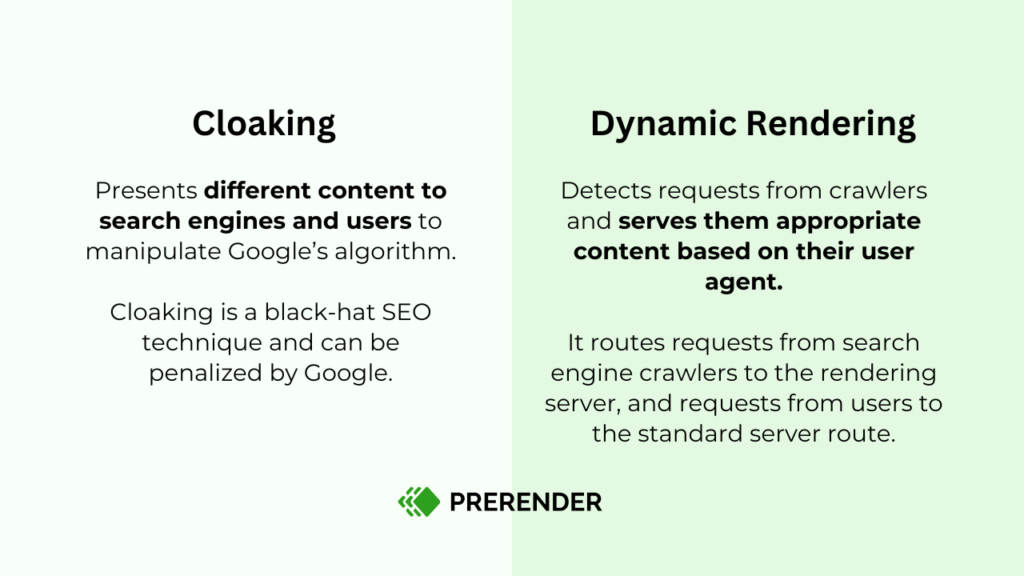

What is Cloaking?

Cloaking refers to the action of presenting different content to search engines and users to manipulate Google’s algorithm. The goal of cloaking is to deceive Google to rank higher (and mislead users.) For instance, a website presents a travel deal to Google but a black market liquor ecommerce site to users.

As stated in Google’s guidelines, this SEO black hat technique is considered spam and violates Google policy. This practice can result in severe penalties, such as deindexing of affected pages or demotion/removal of the entire website.

🔍 Want to boost your SEO? Download the free technical SEO guide to crawl budget optimization and learn how to improve your site’s visibility on search engines.

What is Dynamic Rendering?

Dynamic rendering, on the other hand, refers to the process of detecting requests from crawlers and serving them based on the user agent that makes the requests.

- If the requests come from crawlers without JavaScript issues or human users, they are served normally (through your website’s server route).

- If the requests come from search engine crawlers that don’t support or require JavaScript content rendering, they will be routed to the rendering server.

Source: Google’s Dynamic Rendering Documentation

This means that dynamic rendering serves the same content to both search engines and users. Dynamic rendering is, therefore, NOT cloaking. It is a legitimate JavaScript SEO technique that aims to improve the crawling and indexing performance of JS pages.

Find out why JavaScript websites are prone to indexing issues and why relying only on Google won’t be enough if you own a large and dynamic content website.

Prerender’s Dynamic Rendering Explained (It’s Not Cloaking)

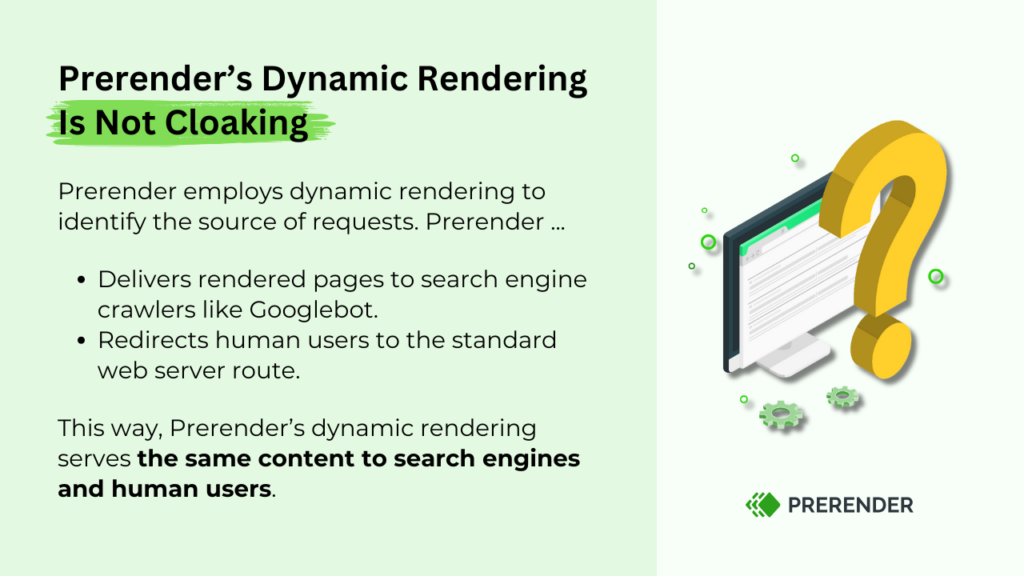

Now that you know dynamic rendering is not cloaking, let’s explore the dynamic rendering solution offered by Prerender.

Prerender renders JavaScript-heavy content ahead of time (pre-render JS content to its HTML version), saves it as caches, and feeds it whenever a request is made. This is where the dynamic rendering solution plays a part.

Using the dynamic rendering technique, Prerender bots can smartly detect who makes the requests to the page:

- If Googlebot requests the page, Prerender feeds it with the HTML version.

- If a human user requests the page, Prerender redirects it to your website’s normal server.

This means that with Prerender’s dynamic rendering, you serve the same content to search engines and bots but with extra benefits. Since Prerender renders the content to its static HTML version, search engines don’t need to render it again. They can quickly and more accurately index your pages, allowing your JS-based content to show up on the SERPs in days, not months!

Watch this video, or read this article, to learn more about Prerender and all the SEO benefits you can get.

How to Avoid Undeserved Cloaking Penalties

However, because of the way dynamic rendering works, many different variables can go wrong, making your website breach the cloaking guidelines without intention.

Here are some of the details you’ll need to keep in mind to stay in the clear:

1. Check for Hacks

A very common tactic used by evil-doers is hacking websites with decent traffic and then cloaking their pages to send traffic to their main websites.

If you’ve experienced a hack recently or you’re not sure why you’re receiving a cloaking penalty, this may be one of the reasons.

Check your website for any weird redirects and do a full audit of your backend to ensure no leftover scripts are cloaking your users. Here’s how to do it using Google Search Console:

- Navigate to Google Search Console > Crawl > Fetch as Google and compare the content on your website’s browser version against the fetched by Google.

- Check for weird redirects sending users to unwanted, broken, or sneaky destinations.

- Use a tool like SiteChecker to scan for cloaked pages.

- If you’re using Prerender, check for any sign of partially rendered pages in the crawl or recache stats dashboard.

Timeout errors are highlighted in red, but you should check any page with a response time close or higher to 20 seconds.

Not a fan of Google Search Console? Try out these SEO auditing tools instead.

It’s also a common practice for hackers to use cloaking to make it harder for you to detect the hack. So it’s a good habit to check for cloaking processes regularly.

2. Check for Hidden Text

More often than not, your JavaScript script could be changing some of your text attributes and creating hidden text issues. These elements are still picked up by crawlers and are considered keyword-stuffing attempts – which potentially can turn into ranking penalties.

It can also be considered cloaking if enough hidden elements make your dynamically rendered page different from what users can see.

The good news is that you can use a tool like Screaming Frog to look for hidden text on your pages.

3. Partially Rendered Pages

Cloaking is about the differences between what search engines and users see. The problem with partially rendered pages is that some of the content will be missing, probably making Google think you’re trying to trick them. When using Prerender, there are two common reasons for why this happens:

- Page rendering times out – by default, Prerender has a 20-second render timeout but can be configured to be more. If your page fails to render completely by that time, Prerender will save the page in its current state, resulting in a partially rendered page.

- Page errors – things like your CDN blocking all non-standard requests (so blocking Prerender) or having a geo-blocked will cause rendering problems, as Prerender won’t be able to access the files from your server.

Check our documentation for a complete walkthrough of what causes empty or partially rendered pages when using Prerender.

4. Uncached Changes

Keeping in mind the main distinction between dynamic rendering and cloaking, there’s a clear issue that could come up with constantly changing pages.

Because of the way Prerender works, it’ll only fetch your page the first time it’s requested by a search crawler after installing the middleware. This first rendering process will take a couple of seconds, creating a high response time.

From there on, Prerender will use the cache version of the page to avoid hurting your site’s performance.

However, what happens when you change the page partially or even completely? Google will receive an old version of your page, which would be interpreted as cloaking.

To avoid this issue, setting a timer using Prerender’s recaching functionality is important. After the time is up, Prerender will automatically fetch all necessary elements from your server and create a new snapshot of the updated content.

Improve Your JS SEO Ethically with Dynamic Rendering

Cloaking issues can be a real pain if they go unresolved. After your website gets banned, there’s pretty much nothing you can do about it – even if you put everything in order, there’s no guarantee that Google will take the penalty off.

If you want to improve the SEO performance of your JavaScript pages, consider implementing a dynamic rendering technique like Prerender. Prerender’s dynamic rendering solution is legitimate, white-hat SEO that is approved by Google and other search engines.

Try out Prerender for free for 14 days. Sign up now!

All You Need to Know About Cloaking vs. Dynamic Rendering

Answering your questions about cloaking, dynamic rendering for JavaScript, and Prerender.

1. Is Dynamic Rendering the Same as Cloaking?

No, dynamic rendering is not cloaking. Cloaking refers to serving different content to search engines and human users. Dynamic rendering, in contrast, refers to the ability to detect and redirect page requests based on the user agent requesting them. It serves the same content to both Google and users.

2. Does Prerender Use a Cloaking Technique?

No, Prerender doesn’t use a cloaking technique. It only renders JavaScript content ahead of time and serves the same content to both users and search engines. Prerender’s dynamic rendering solution detects who makes the requests, serves the pre-rendered content to search engines, and redirects human users’ requests to the normal website route. Dive deeper into how Prerender renders JS websites.

3. Will I Get Penalized or Deindexed for Using Prerender?

No, Prerender is a legitimate, white-hat SEO technique. Prerender renders your JS content into a ready-to-index version (HTML). This process is carried out by search engine crawlers and requires a lot of resources and time. By taking this burden out of the search engines, Prerender accelerates the crawling and indexing process of your JS website. You can achieve up to 300x better crawling and 260% faster indexing. Learn more about how Prerender works.