Missed Content

No More Missed Content in Crawls

Serve rendered content that’s ready to rank.

Missing content is often a result of JavaScript crawlability issues. It leads to one of two problems: either no-crawl or no-index due to exhausted resources or half-crawled pages that don’t fully load information. Half-pages will compromise your search performance, and you don’t want that.

Become a Crawl Priority

Content Visibility Takes Priority

Google crawls content every four and thirty days—make sure it’s yours.

Priority goes to websites that follow technical SEO rules and deliver the most in-depth, helpful content. For yours to be one of them, your content needs to meet customer search intent—but moreover—it needs to be indexed first.

Ten to one, you’re competing with static HTML sites vying for the same attention. So, if your pages have missing content, kiss your rankings goodbye.

Indexed to Rank

Content That's Ready to Go

Gone are the days when SEO was fairly simple, and websites had built-in HTML that was easy for search engines to process. Prerender makes it possible to create immersive website experiences without compromising SEO.

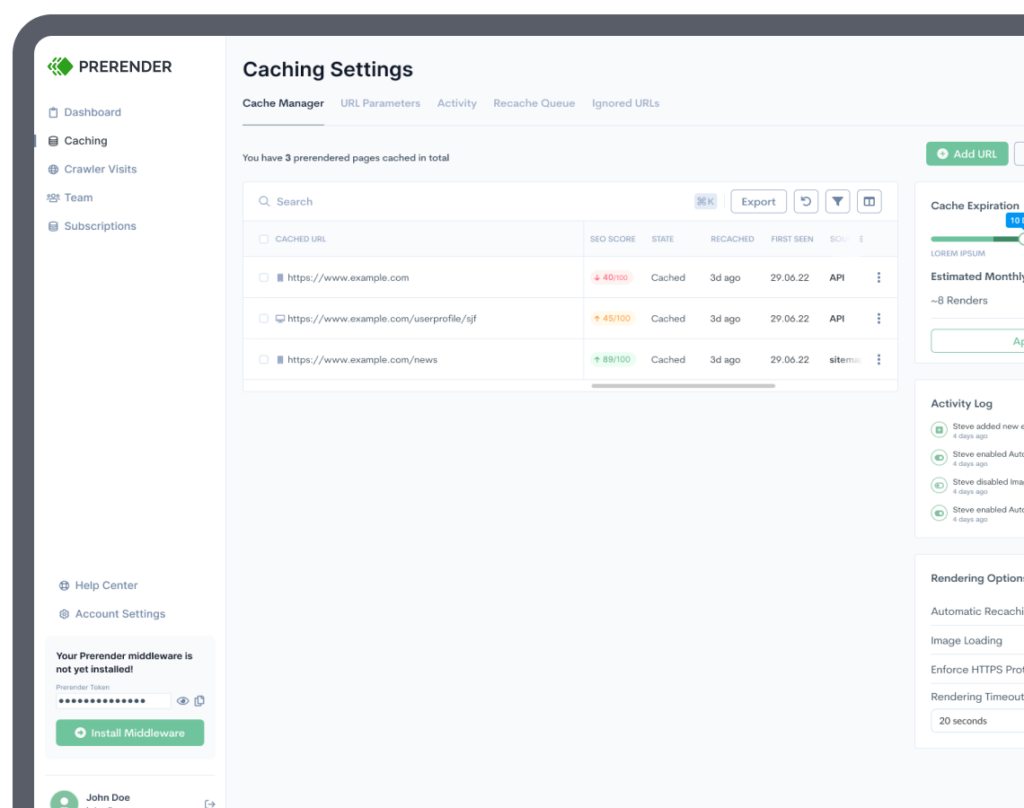

To avoid failed or incomplete crawls, Prerender will create a snapshot of your content and show it to Google’s crawlers as static content. This will ensure your text, links, and images are correctly crawled and indexed every time.

Better Content Performance Starts Here!

You’ll never have to worry about missed or half-crawled pages.

Get started with 1,000 URLs free.

No More Missing Content FAQs

Learn about Prerender, technical SEO, and JavaScript on our dedicated FAQs page.

Does JavaScript Help SEO?

No, JavaScript can harm SEO if you don’t follow SEO best practices.

Search engines require bigger crawl budgets to index JavaScript pages in comparison to HTML-based content. In fact, search engines like Bing and DuckDuckGo can’t parse JS at all.

Processing JS pages is resource intensive, therefore, many of your SEO elements (e.g., links, metadata, and canonical tags) are likely partially indexed or aren’t indexed at all. Consequently, your content may show up weeks later on Google SERPs with imperfect SEO and low rank.

How to Improve JavaScript SEO?

The traditional method of solving JavaScript SEO is Server-Side Rendering (SSR). This, however, is a costly and risky solution that can drag down your rankings. Prerender, on the other hand, is a much cheaper and more reliable solution that doesn’t come with unwanted side effects. See how Prerender is compared with SSR and other JavaScript SEO solutions here.

How Does Prerender Solve JavaScript SEO Issues?

Our dynamic rendering solution allows your JavaScript-heavy websites to get indexed fast and smoothly without taking away the interactive experience JS frameworks offer your users.

For requests made by search engine bots, Prerender creates a snapshot of your JS pages and feeds them to the crawlers—reducing the amount of used crawl budget and page load time.

As for requests made by human users, your server will process this through the normal route. This way, your JS website is loved both by search engines and human users.

Why Does JavaScript Often Cause Missing Content?

Every time search engine bots crawl or index a page, they use the website’s limited crawl budget. When a budget is exhausted, the page won’t be indexed 100%, causing missing content and poor organic SEO performance.

How Can Prerender Avoid Missing Content?

Prerender enables you to have a bigger crawl budget by pre-rendering your JavaScript pages into their HTML version. With more crawl budget, search engine bots will always have enough crawl budget to index your pages to perfection. As a result, every SEO feature can boost the rank of your page on SERPs.

Resources on how prerendering helps search engines to find your content

Want to improve the chances of search engines to find your dynamic content? Check out Prerender’s awesome JavaScript SEO resources – we’ve got everything you need to know. Looking for our technical documentation? Find it here.

Trusted by 75,000+ Brands Worldwide

Serving +2.7 billion pages to crawlers.