If your website is constructed using Javascript, becoming well-versed in JavaScript SEO (search engine optimization) is crucial for enhancing your organic traffic. Google’s team is perpetually striving to refine its search engine. One aspect of this advancement involves ensuring that its crawlers are capable of accessing JavaScript content, thereby adjusting to the evolving paradigm of web development.

Without the capability to access JavaScript content, Google’s crawlers would lack the ability to view web pages the way users do, making it challenging to index and rank these pages correctly. In response to this, Google has created a system called the Web Rendering Service (WRS) to effectively handle pages that depend on JavaScript rendering for proper functionality. As an effort to solve common Javascript SEO problems, you might face.

In this piece, we’ll identify typical problems arising when websites are developed using JavaScript frameworks. Additionally, we’ll dissect the fundamental knowledge every technical SEO requires related to rendering to solve common javascript SEO problems.

8 Common JavaScript SEO Issues and How to Handle Them

Is JavaScript bad for SEO? Definitely not! However, when making JavaScript SEO-friendly, these are some of the most common issues you’re likely to encounter:

1. Robot.txt Blocking Resources

Remember that the Renderer works by caching all the building blocks of your site. If Google can’t access them, then they won’t be available to the Renderer and thus your page won’t be indexed properly.

To fix this, add this snippet to your robot.txt file:

User-Agent: GooglebotAllow: .jsAllow: .css

2. Google Can’t See Internal Links

Google doesn’t navigate from page to page as a normal user would. Instead, it downloads a stateless version of the page.

This means it won’t catch any changes on a page that rely on what happens on a previous page.

What does that have to do with links? Well, if your links depend on an action, Google won’t be able to find said link, and won’t be able to find all the pages on your site.

Things like:

<a onclick=”goTo(‘page’)”>Anchor</a> <a href=”javascript:goTo(‘page’)”>Anchor</a> <span onclick=”goTo(‘page’)”>Anchor</span>

That won’t work.

All links need to be between <a></a> tags and define a target URL within the href attribute for Google to be able to find and follow it.

If this sounds like your page, then it’s time to change all your links to a more SEO-friendly format.

If there’s a reason you need to declare an action for the link, you can then combine both syntaxes:

<a href=”https://prerender.io/blog/” onclick=”goTo(‘https://prerender.io/blog/’)”>Anchor</a>

Note: This rule also applies to paginations. Add the corresponding tags and attributes to your pagination menu.

3. Outdated Sitemaps

Google loves sitemaps because they help the Googlebot crawl websites and find new pages faster. Keeping them up to date manually can become a time-consuming challenge, however.

Luckily, most JavaScript frameworks have routers to help you clean URLs and generate sitemaps.

Just search Google for the framework you’re using plus the phrase “router sitemap”. Here’s an example of a sitemap router for React.

Note: We are not necessarily recommending this router- always make sure to do your research before committing to one.

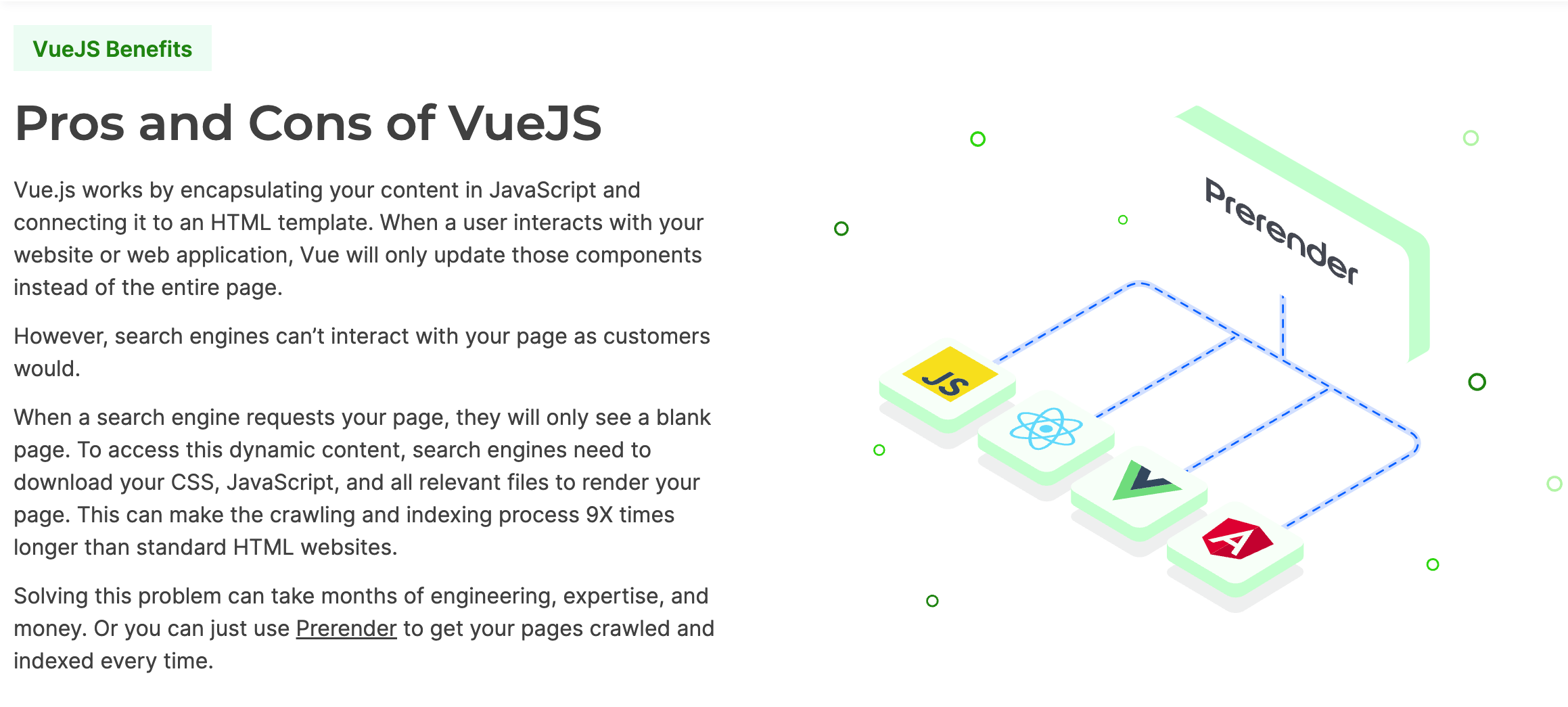

4. Client-Side Rendering

Sites built with React, Angular, Vue, and other JavaScript frameworks are all set to client-side rendering (CSR) as default.

The problem with this is that Google crawlers can’t actually see what’s on the page. All they’ll see is a blank page.

One possible solution is using the more traditional choice: server-side rendering (SSR).

Note: Here’s a full comparison between server-side and client-side rendering and how they impact your SEO.

If you go with SSR however, you’ll be losing user experience advantages you can only get with CSR.

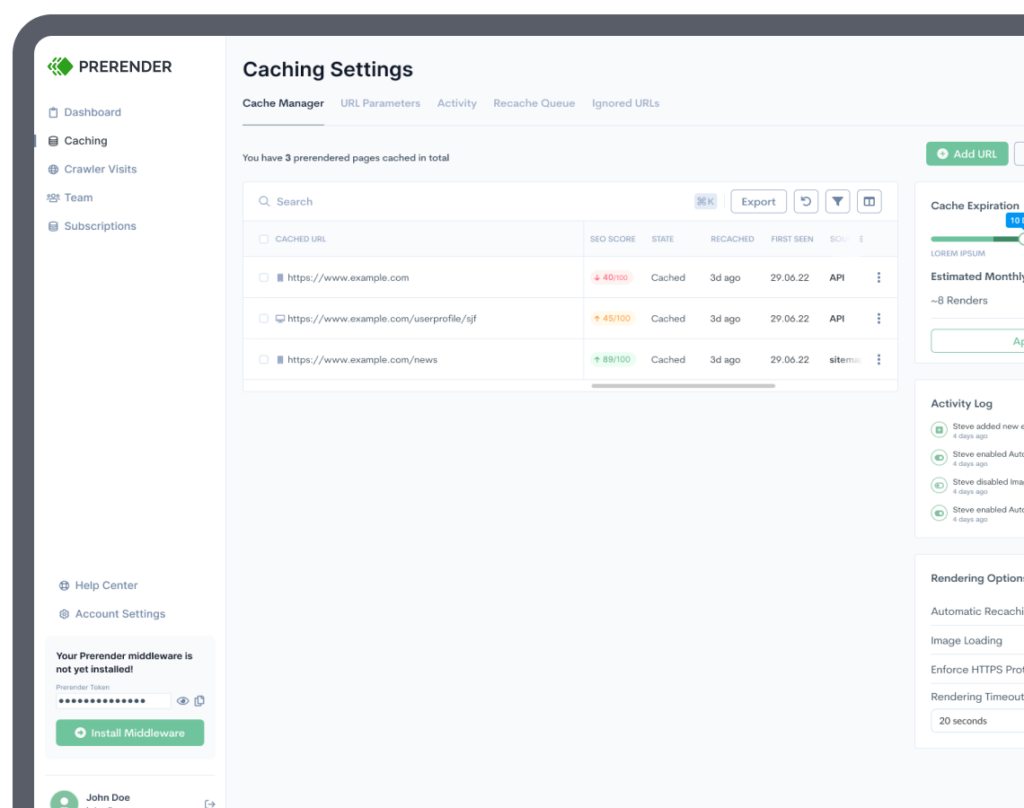

To get the best of both worlds, we recommend you use prerendering.

Just install Prerender’s middleware and it will verify every user agent requesting your page. If it’s a search engine crawler – like Googlebot – it will send a rendered HTML version of your page. If it’s a human, it will redirect the user through the normal server route.

Note: If you want to enable SSR for your site, here’s a guide on how to enable server-side rendering for Angular and React.

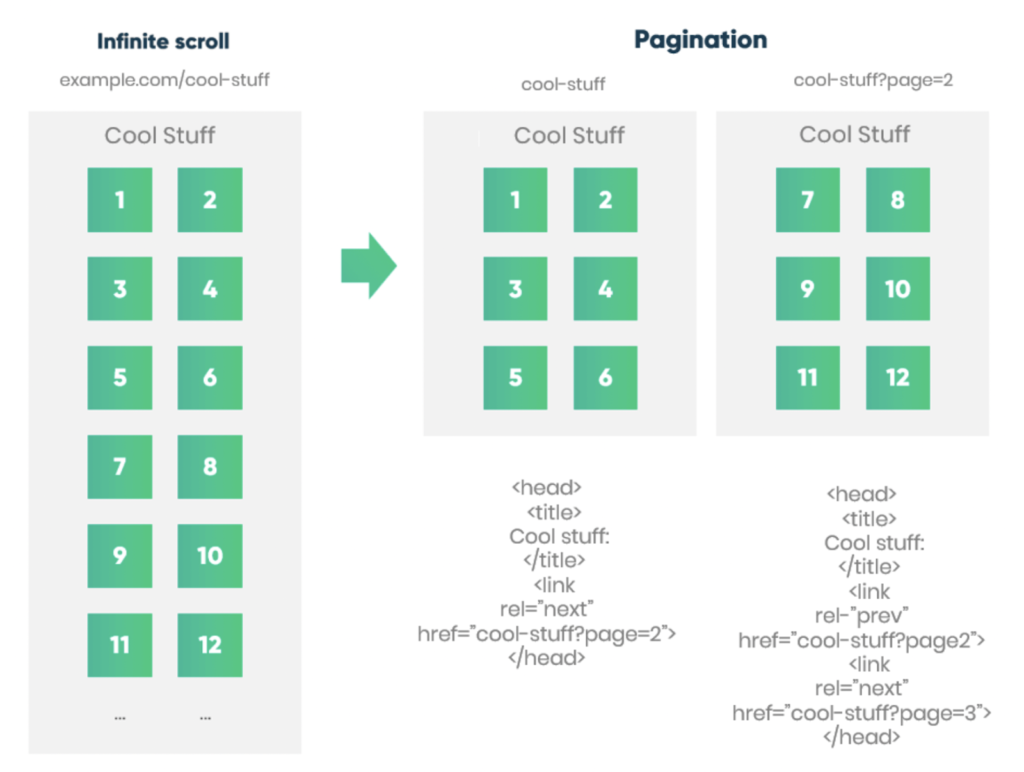

5. Infinite Scrolling Blocking Content

Infinite scrolling has many advantages over traditional pagination. However, it is almost impossible for search engine crawlers to access all of your content this way.

Remember that Googlebot doesn’t interact with the page, so there’s no scrolling down to find new links/pages.

To solve this problem, you could generate your pagination parallelly.

The team at Delante explains how to implement this solution in detail in their infinite scroll SEO article. It’s really worth checking out if you’re struggling with it right now.

6. Large and Unoptimized JS Files

Although it is not technically a problem, your JavaScript code can prevent your website from loading quickly by blocking critical resources.

Before the browser can show the website to the user, all the necessary JS needs to be rendered. This negatively affects your user experience and thus, your SEO.

Here are few things you can do to improve your website’s JavaScript SEO:

-

- Minify your JS file using a tool like https://javascript-minifier.com/

- Defer JavaScript loading so the browser can render the HTML and CSS files first.

- Don’t use JavaScript for things you don’t need to. For example, you can use CSS for lazy load images instead of JS.

7. Setting the Head Tag Properly

SEOs love head tags, but for a developer, managing all the tags for every page in the project can become tiresome, which may result in mistakes.

To manage all of these SEO tags, you can use a module like React Helmet to add the tags and manage all the changes from one component.

You can find similar solutions for all major JavaScript frameworks.

8. Google’s Caching Issues (Bonus)

There’s one issue that could come up related to Google’s caching process.

Because Google caches pretty much every resource (from the HTML to API requests) when crawling your site and then uses this information for rendering, it can cause terrible problems when it uses old files to try to make sense of updated pages.

For these situations, the best solution is to generate new file names for files with significant changes to force Google to download the updated resource.

Google and SEO for JavaScript Sites: How Google Crawls and Indexes JS-based Sites

When it comes to indexing pages, Google’s process is actually the combination of several microservices sharing information – we’ll focus on the main parts of the process so you can have an idea of what happens under the hood of their WRS.

It all starts when the Crawler sends GET requests to the server. Then, the server responds with all the building blocks of the page (including HTML files, CSS files, and JavaScript files) for the system to store for processing.

The Processing step involves a lot of different systems. However, in terms of JavaScript, there are a few services that are more relevant to us:

- Google looks for links to other websites and resources to build the page within <a> tags’ href attribute.

- It caches all the files needed to build the page, ignoring cache timings completely. So Google will fetch a new copy whenever they want/need to.

- Before sending the downloaded HTML, it will undergo a duplicate elimination process.

- Finally, it will determine what directives to follow depending on which is more restrictive. This way, Google decides what to do if the JavaScript code changes a statement (like noindex to index) from the HTML file.

After Google has collected everything it needs, it’s time to send every downloaded page to the Render Queue. The time it takes for every page to go through the Queue can vary, so it’s hard to give an accurate time estimate.

Still, an important factor for this time is the crawl budget. So for JS sites it is crucial to optimize your site’s crawl budget.

Finally, the page arrives at the Renderer where the page will be rendered (execute JavaScript, etc) with a headless Chrome browser (Puppeteer).

Note: They used to use Chrome 41 for rendering, which caused a lot of problems. However, by the time of this article, Google is now using a fully updated version of Chrome, so every feature is now compatible with the Renderer.

If you want to dive deeper into Google’s WRS, here’s an in-depth guide on how Google Bot renders pages.

How to Find JavaScript Problems Affecting SEO

Because so much can go wrong when working with pages built in React, Angular or Vue, it’s important to add routine checks to make sure Google is able to access our main content or see our pages at all.

Let’s explore a few ways you can find these discrepancies:

Use Google’s Testing Tools

Tools like the Inspector Tool, the Mobile-Friendly Tester and the Rich Results Tester have a few key differences from Google’s Renderer:

-

- They have a 5-second time-out. Because the tools run the check in real-time, it has to stop at some point, so page speed can influence the results. However, the Renderer does not have this limited time to render your page.

- Because the Inspector Tool – and any other testing tool from Google – works in real-time, it is pulling the resources needed right there and there. The Renderer, instead, uses the cached version of your files so in some cases it may not be using the latest version of your files.

- To save resources, the Renderer skips the pixel painting process, which Google’s testing tools do.

Even with these differences, these are still powerful tools to debug your website.

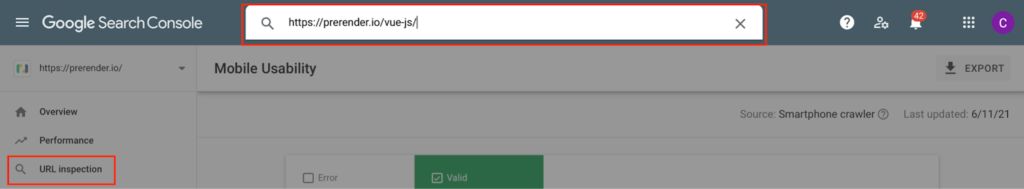

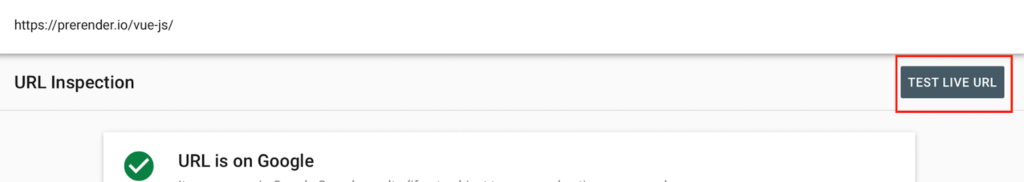

Let’s explore how to use them on your site. First, go to your Google Search Console and type the URL you want to check into the Inspector tool.

Then click on the TEST LIVE URL button.

The tool will report on a few issues right away. For example, our target page has some mobile issues according to the tool.

What we’re going to do now is click on the VIEW TESTED PAGE button and then go to SCREENSHOT.

There we’ll be able to see whether or not Google can access our main content, differences between your browser and the screenshot and more.

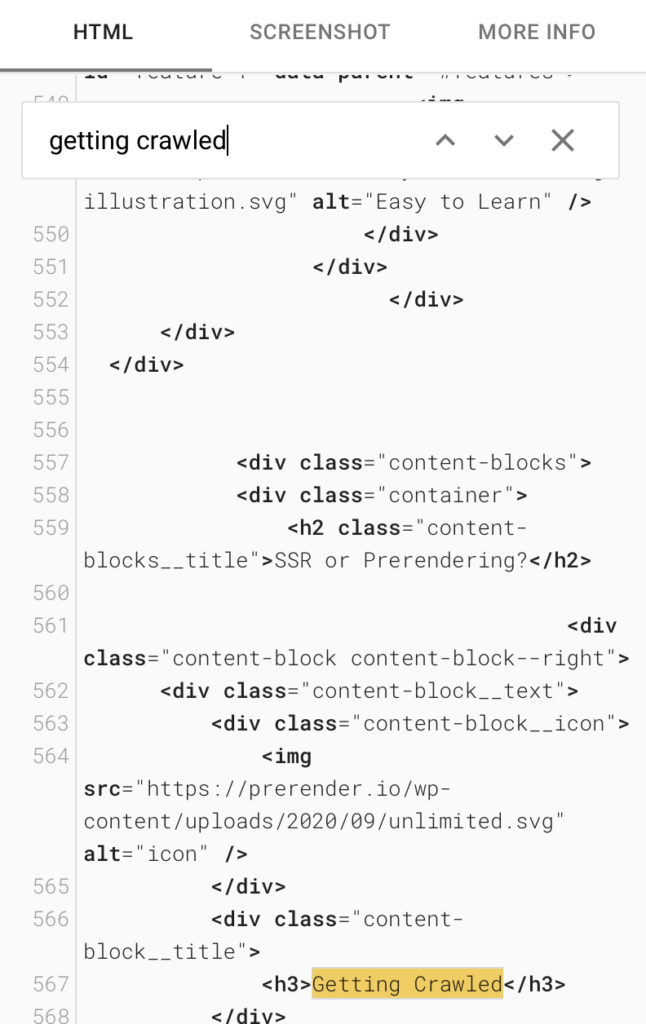

Just next to this tab, there’s the HTML tab. This is a great place for you to find out what’s DOM-loaded by default.

Go there and try to find a snippet of content. If it appears, Google definitely has access to your site’s resources. If it doesn’t appear, then you have work to do.

The tool will also report if there are any blocked resources. That will become handy when debugging your page.

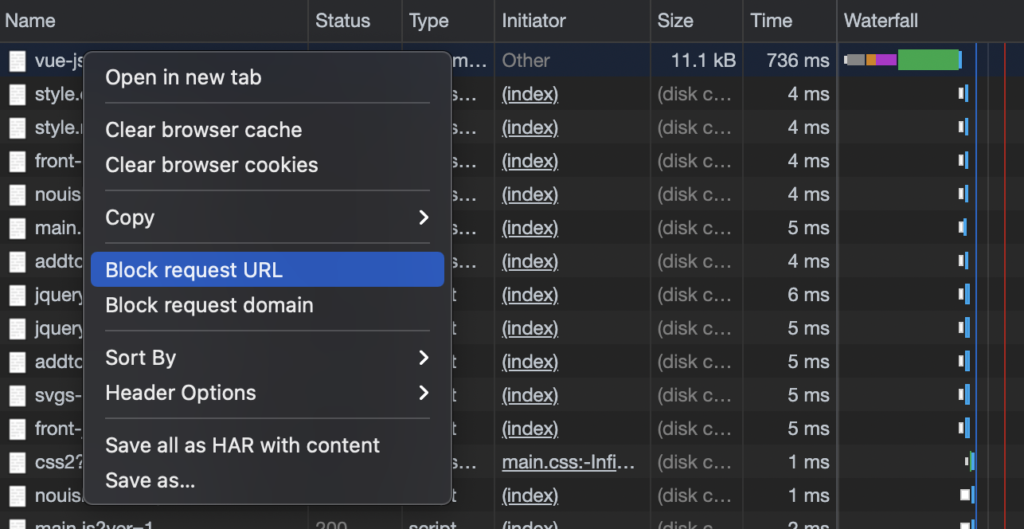

Use Chrome Developer Tools to Test Blocked Resources

Just because a resource is blocked by the robot.txt, it doesn’t mean that your site will break. But it could mean that.

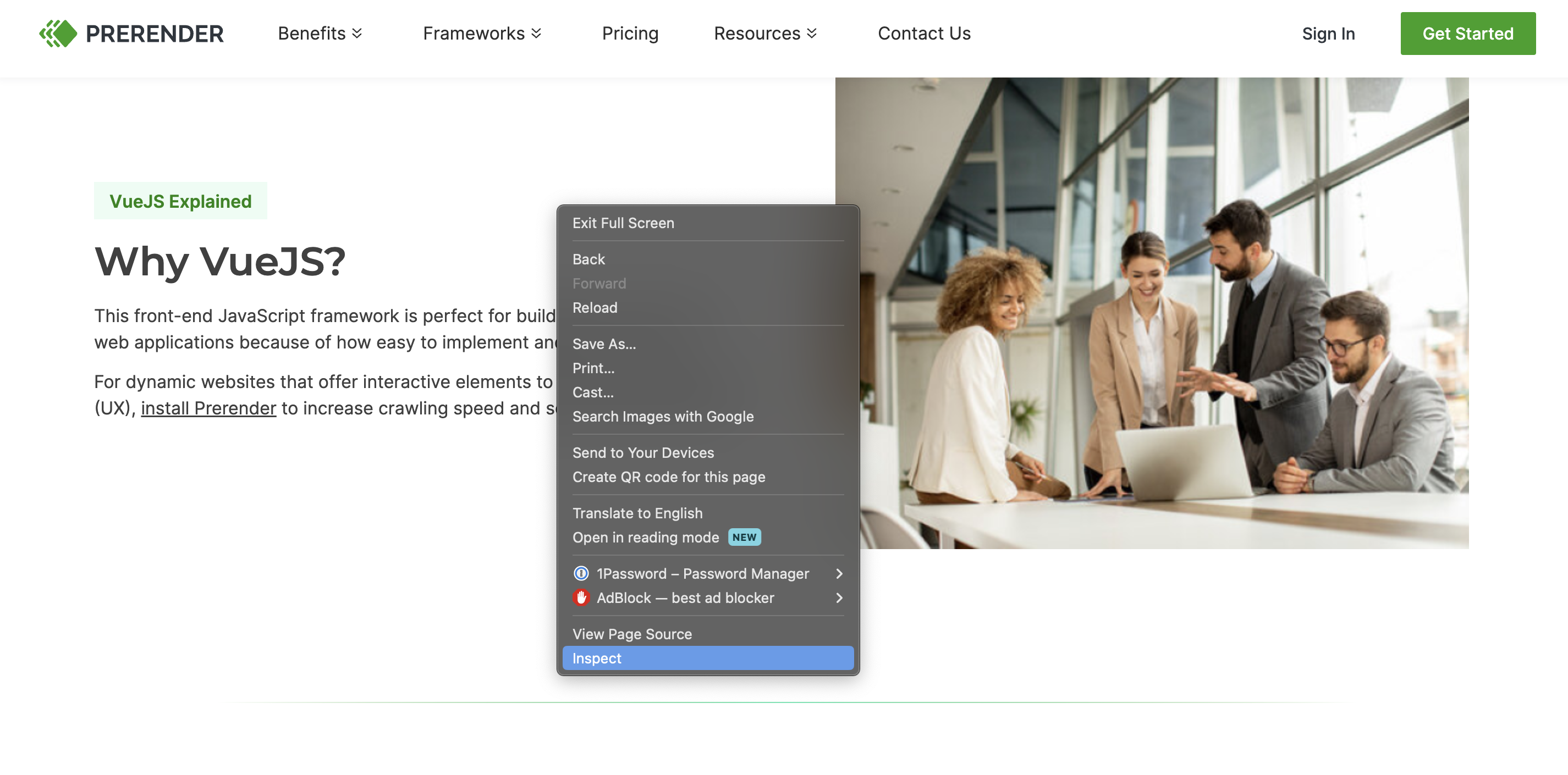

To test resources, in Google Chrome go to the page you want to audit, right-click, and inspect the page.

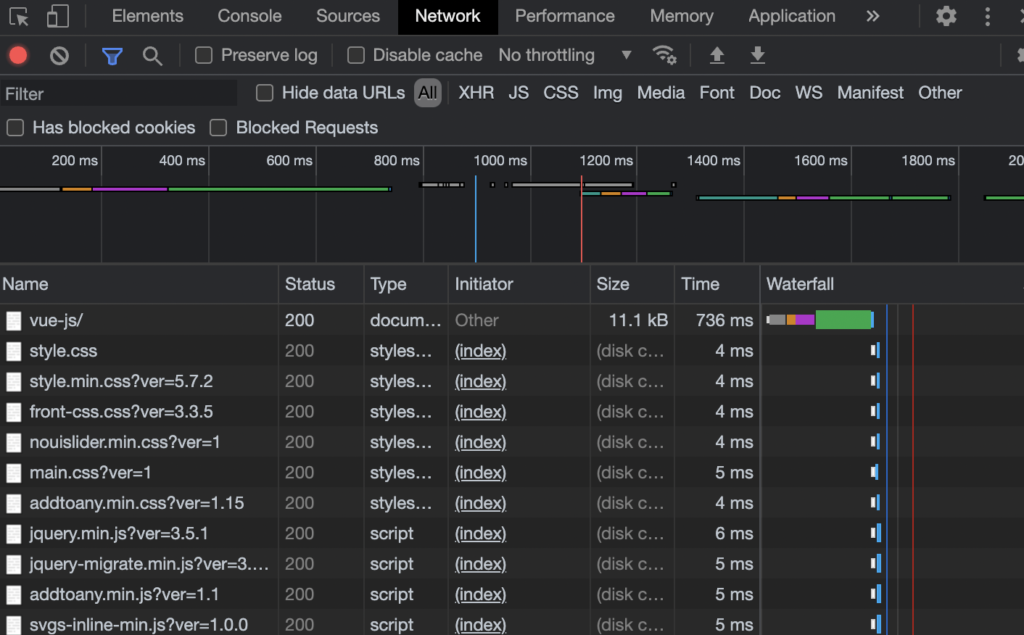

Head to the network tab and reload the page to see how your resources are loaded.

To test the importance of a resource, right click on the resource you want to check and block it by hitting “Block request URL”.

Reload the page. If any crucial piece of content disappears, you’ll need to change the robot.txt directive.

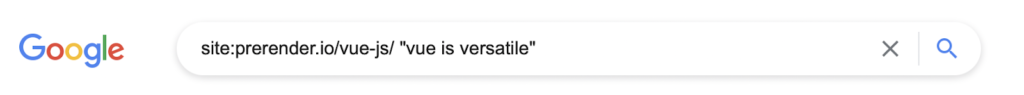

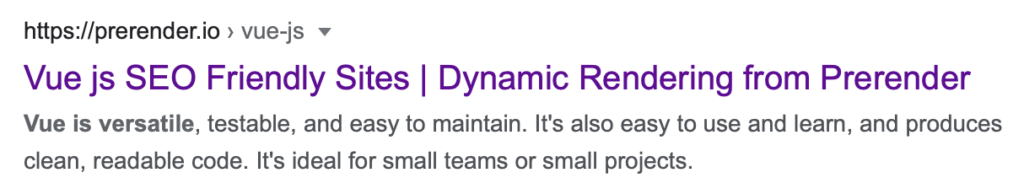

Use site: Command on Google Search to Find Indexing Issues

A quick check you can perform to find rendering issues is going to Google search and using the search command site:{yourtargeturl} and a piece of text from the page.

By default, our page shows the “Pros and Cons of VueJS” tab open.

But we want to check if Google can access the content inside the “Easy to Learn” tab by adding the open phrase “vue is versatile” to our query.

Here’s what it is going to look like if your content is indexed correctly:

Of course, general SEO best practices are still relevant when considering JavaScript SEO.

JS is a tool that’s meant to provide better experiences, but it’s not the only piece of the puzzle.

For a more in-depth analysis, follow our technical SEO audit checklist to speed up the process without letting any element slip through the cracks.

Try Prerender.io’s free site audit tool.

To get clear insights on whether JavaScript is one of the root of your SEO problems, you can use Prerender.io’s free site audit tool.

This tool measures your site’s performance for search engines. If you get a low score, that’s a clear indicator that you have JavaScript rendering issues.

Get your free site audit here—no need to book a demo, enter your credit card information, or fill out a survey. Simply add your domain and email and you’ll get your results in minutes.

Mitigate Your JavaScript Rendering Issues With Prerender.io

The application of JavaScript continues to expand, and our responsibility is to persistently seek solutions that benefit our projects and enterprises.

We trust that this information has either resolved your issues or, at the very least, improved your ability to identify them effectively. Integrating Prerender will mitigate some of these JavaScript-related concerns. Watch a video below to see how it works.

Interested? Get started with 1,000 free renders today.

FAQs

What Is Prerendering?

When you click on a website, your browser sends a request to the site’s server to retrieve (fetch) the necessary files (like HTML, CSS, JavaScript, images, etc.). This is used to build the final visual representation of the page (rendering), and it takes place every time you navigate to a page. Now, to speed up the process, we can render the page ahead of time. How, you might ask? We send a pre-rendered static HTML version of the page to the client, providing a faster and more seamless experience to the user or search engine.

What’s The Difference Between Client-Side Rendering, Server-Side Rendering, And Prerendering For JavaScript SEO?

Client-side rendering executes JavaScript in the user’s browser, which can cause issues for search engines. Server-side rendering executes JavaScript on the server before sending HTML to the browser, making it easier for search engines to crawl. Prerendering generates static HTML versions of your pages in advance, which can be served to search engines for optimal crawling and indexing.

How Does JavaScript Affect Core Web Vitals And What Can I Do To Optimize It?

JavaScript can significantly impact Core Web Vitals—especially Largest Contentful Paint (LCP) and First Input Delay (FID). To optimize, consider lazy loading non-critical JavaScript, minifying and compressing files, and using a pre-rendering solution for critical content.

How Can I Test If Google Is Properly Rendering My JavaScript Content?

You can use Google’s URL Inspection tool in Search Console to see how Googlebot renders your pages. Additionally, you can use tools like Prerender’s Rendering Test or Google’s Mobile-Friendly Test to check how your JavaScript content is being rendered.

What Javascript SEO Tools Should I Use?

- Google Search Console: An obvious one, this free tool provides insights into how search engines see your website, including any JavaScript-related errors.

- Prerender.io: This technical SEO tool helps pre-render your website’s JavaScript content, creating a static HTML version that search engines can easily crawl and index in a fraction of the time. It’s much more cost-effective than server-side rendering, and is particularly beneficial for large, frequently-changing JavaScript sites or SPAs.

- Screaming Frog SEO Spider: This tool crawls your website and identifies potential SEO issues, including problems with JavaScript rendering.

How Soon Will I See Results from Prerender.io?

You should see quick improvements to your crawling and indexing within days of implementation. For broader SEO impacts, like traffic and sales, you should see positive impacts in 4-8 weeks as search engines crawl your pages. Learn more in the video below.