SEO is one of the most useful skill-sets a marketer can have in their toolkit. It’s one of the highest-paying and in-demand career skills in digital marketing.

However, it’s also one of the most challenging. Seasoned, veteran marketers with years of campaign experience under their belt from other digital marketing disciplines e.g. PPC and Facebook advertising often balk at SEO-related tasks because they’re so frustrating and complicated.

SEO is challenging because there are so many factors which go into a successful SEO campaign – and this is especially the case with technical SEO.

As with any marketing skill, technical SEO is made much less daunting if you use systematic methods and processes and a knowledge of the right tools. We’ll lay out exactly what a technical SEO audit is, what tools you’ll need to conduct a successful audit, and what to look for as you go through your audit.

What Is Technical SEO, and Why Is It Important?

Technical SEO refers to the website and server optimizations required to help search engine web crawlers index your website and improve your organic traffic and search rankings.

In contrast to keyword optimization or link building, technical SEO refers to anything that makes your website perform well from a technical standpoint. For it to load fast, be easily crawled and indexed, and to display correctly on any screen size (mobile, tablet, or desktop) or network speed (3G, LTE etc.).

Making sure your website is technically well-optimized is a signal to both Google and your human users that your website is high-quality and provides a good user experience. It helps your users trust that your business is legitimate, and that your content provides the answers to whatever problems they’re having.

Google ranks and evaluates web pages based on hundreds of factors, many of which have to do with your website’s user experience. How fast it loads, how it’s organized, whether its URLs or site structure are confusing, whether there are any pages broken, whether it has the right security protocols etc. When you make sure your website is technically optimized, you help Google and other search engines to better understand your website, thereby improving your SERP visibility and organic traffic.

Technical SEO experts know this work is precise and multi-faceted. This is an area where success is often measured in milliseconds, and it can make the difference between you getting in the top three positions on Google SERPs or having your website being buried by your competition.

Technical SEO Audit Tools You’ll Need

Screaming Frog

Whenever you sit down to audit a website, one of the very first things you should do is crawl it using Screaming Frog.

Screaming Frog is an SEO tool that functions a lot like a web crawler. It’s one of the industry-leading SEO tools and has a customer base that includes Apple, Amazon and Disney. With it, you can quickly analyze any website, evaluate any technical issues and identify any necessary optimizations.

You can use it to:

- Identify broken links or web pages

- Find pages with missing or poorly optimized title tags or meta-descriptions

- Generate XML sitemaps for faster indexation

- Diagnose and fix any 301-redirect chains

- Discover duplicate or improperly formatted URLs, titles etc.

- Generate a layout of your website’s architecture and see it the way a search engine does

Google Pagespeed Insights

There are a number of diagnostic tools that technical marketers and web developers use to test the loading time of a webpage. They all have similar functions, yet they all measure slightly different things.

Google PageSpeed Insights is one of the more common ones. It’s considered the litmus test for how quickly a webpage loads. Others include GTMetrix, Pingdom and WebPageTest.

It’s a well-known fact in the online marketing space that the average website visitor will bounce off of a page in frustration if it takes more than three seconds to load. When your website is getting hundreds or thousands a day, that can end up losing you thousands or even millions of dollars.

Slow site speed means fewer conversions, a higher bounce rate and an underwhelming experience for your customers. Not only that, but it adversely affects Google Search Algorithms that look at user metrics, which can lead to Google giving you lower rankings based on that alone.

Google PageSpeed Insights evaluates the speed of your webpage using field data that it captures whenever someone visits your website on Google. It also scores your website’s load time on both desktop and mobile devices on a scale of 1 to 100, and gives you an action item list of recommendations to improve it.

AHrefs Site Audit

You’ll likely know AHrefs from their series of digital marketing tutorials hosted by Sam Oh. However, Ahrefs’ main product is a backlink analysis tool that is considered the best on the market for a link profile audit.

The AHrefs platform includes a site audit tool that gives you over 100 technical and on-page SEO issues that could be dragging down your search rankings.

Although it performs many of the functions of any technical site audit tool, you’ll probably be mostly using it for backlink research. Ahrefs is the linkbuilder’s swiss army knife.

That notwithstanding, the site audit tool is also incredibly useful. With it, you can group issues by type and put them together into full-color charts and graphs to show your marketing team. The categories include performance, social tags, content quality, incoming and outgoing links and broken images or scripts.

Moz

Just as you’re probably familiar with Sam Oh’s SEO tutorials, you’ve also probably see Rand Fishkin’s Whiteboard Fridays series of videos.

Rand Fishkin is the founder and erstwhile CEO of Moz, an enterprise-grade SEO platform. It’s a keyword research tool, site audit tool and enterprise-grade SEO platform all in one.

Moz is notable for having created the Domain Authority/Page Authority system for evaluating the backlink health of a website.

You probably already have MozBar installed as a Google Chrome Extension. If not, then it’s highly advisable that you do so. It allows you to see the backlinks and domain authority of any webpage you see on Google SERPs. Plus it’s free. You can download it here.

Technical SEO Audit Checklist

This list may not contain all of the things to look out for in a technical SEO audit. The optimizations and fixes required are usually specific to each website. However, they should serve as a general list of best practices to follow when conducting an audit.

On-Page SEO

On-page SEO refers to influencing any SEO ranking factors that are directly under your control as a webmaster. While this refers to a variety of technical SEO factors, when people talk about on-page SEO they’re mostly referring to keywords, but it also refers to factors such as pagespeed, website architecture, indexability and so on. On-page SEO is in contrast to off-page SEO, which deals with any SEO factors that are not under a webmaster’s direct control. Off-page SEO primarily refers to backlinks, but also refers to factors such as user metrics like bounce rate, session duration etc.

Other than backlinks, keywords are one of the most important factors in your website’s SEO health.

The subject of keyword research and keyword optimization is a whole topic of discussion in and unto itself. For our purposes, on-page SEO in a technical SEO audit is more-or-less synonymous with keyword optimization.

Use your website crawler to identify whether your pages have a valuable keyword that’s frontloaded in the title and meta-description.

Screaming Frog also has a SERP preview feature with which you can see whether your title and metadesc are truncated when they appear on Google search results.

Site Architecture Checks

Google and other search engines pay attention to the way your website is organized. This is referred to as your website architecture.

Generally, search engines want your webpages to be arranged in a logical and hierarchical order. Your homepage is the most broad, and each level down offers higher and higher levels of specificity.

A common comparison is to a pyramid or a silo structure. Clusters of web pages about similar topics link to a page about a broader, umbrella-group topic which passes pagerank to each of the pages below it – otherwise known as a pillar page.

Screaming Frog’s website visualization tool lets you see how your website is organized. As a rule of thumb, you never want a single webpage to be more than four clicks away from your homepage – at which point Googlebot gives up and stops crawling your website.

Crawl Errors

Whenever a search engine crawler or a human user comes across a webpage that is broken or missing, the website will return what’s known as a 400 or 500 error response code to signify a broken page.

It makes for a poor user experience when your visitor navigates a website and encounters one of these. Luckily, you can use Screaming Frog to identify and replace or redirect the broken webpages.

When in Screaming Frog, navigate to Bulk Export, then to “Client Error (4xx) Inlinks and Server Error (5xx) Inlinks” to pull up a report for all of the 4xx or 5xx pages on your website.

Google Search Console will also give you a crawl error report. Just go to Index > Coverage to see how many of your web pages have error codes.

Improperly Formatted URLs

URLs are a small but important part of your website’s SEO health.

Generally speaking, shorter URLs are better. It’s considered SEO best practice to not include uppercase letters or numbers in your URL.

When you have more than one word contained in your URL, be sure to seperate them using hyphens “-” instead of underscores “_”.Underscores are a signal to Google to mash words together into one, whereas hyphens tell Google to seperate them. Improper use of underscores can confuse Google and make your URL look like gobbledygook.

To get an overview of your URLs, go to the “Overview” panel in Screaming Frog, and scroll down to the “URL” section. You’ll see which of your URLs have numbers, uppercase letters or underscores.

SSL Certificate / HTTPS Secure

Online businesses have had their reputation irreparably tarnished by the early days of the internet, when “online businesses” were often thinly-veiled scams which resorted to shady and dishonest business practices. Many people regard online businesses without a brick and mortar location or an office as being scammy, disreputable, or somehow less than legitimate.

This is the purpose of an SSL Certificate: to signify that your website is safe to use and won’t compromise your user’s sensitive information. It’s a signal of trust to both search engines and your human users, and it has real SEO implications.

In the event that two web pages ranking for the same keyword are equal to each other in terms of quality of content and technical optimization, the one that has an SSL certificate will rank more highly.

It’s easy to tell whether your SSL certificate is up to date. If your website has an HTTPS:// security protocol in your domain, it means that you have an up-to-date SSL certificate. If a red “X” appears next to your domain, it’s likely that your certificate is expired or has issues.

Renewing your SSL certificate just takes a few easy steps. Just login to your domain registrar – Bluehost, HostGator etc., then:

- Generate a Certificate Signing Request

- Select your SSL Certificate

- Select a renewal date of 1-year or 2-year

- Click “Continue”

- Make the necessary payment

Duplicate and Thin Content

It used to be common practice for website owners to closely copy or outright plagiarize content off of high-ranking websites to wrest their SERP positions for themselves. To subdue this kind of unethical behavior, Google instituted the Panda algorithm update in 2011.

Panda’s purpose is to evaluate the quality of a website’s content – to give it preferential search rankings if a page covers a topic in depth, and to penalize it if it has blatantly ripped its content off from somewhere else. Likewise, if a content is low-effort, poorly written, reuses the same content on multiple pages, stuffed with keywords or generally too short to be useful to the user, then Panda penalizes it as well.

This is known as duplicate content. It’s bad for your website, and you don’t want it.

You can identify whether your website has duplicate or thin content by using Siteliner. Once you find the problem pages, rewrite the content as necessary.

301-Redirect Chains

When web pages become broken, disused, or have newer versions with more recently updated content, then website maintenance best practice is to decommission the page and send the user to the more recent URL instead using a 301-redirect.

However, as a website grows over time, carless web developers often take those URLs into yet newer ones as websites become redesigned, content is audited and then consolidated, when the website migrates to a new CMS or as the needs of the website change. This causes what are known as 301-redirect chains.

301-redirect chains are what happens when three or more URLs are linked together in a sequence. They tend to be more common the bigger and older a website is. Sometimes they can even create infinite loops that redirect back to the original URL They also take slightly longer to render the end URL, which can bog down your page’s load time.

301-redirect chains can wreak havoc on your website’s user experience and waste your crawl budget. Web crawlers will usually bounce off of a website if a URL takes them to more than two pages in a chain. Meanwhile, your human users are likely to be confused and frustrated when their content takes a few extra seconds to load and doesn’t match the intent of their query.

Screaming Frog lets you export any 301-redirect chains on your domain. Just go to the “Reports” tab then click on “Redirects” to export as a .csv file. Then you can redirect any URLs away from the intermediary page and towards the end destination URL.

In other words, redirect any chains going from:

URL A → URL B — URL C

To simply:

URL A → URL C

Minifying CSS & JavaScript Files

Many websites built with WordPress have bloated, unused or otherwise obstructive CSS and JavaScript code. Scripts and CSS code takes time for a browser to parse and render, and each CSS or JS file you use requires at least one HTTP request to the browser. This means that blocks of bloated code can drag down your web page significantly.

Web development and technical SEO best practices normally suggest that each website should feature one CSS file and one JavaScript file, and that any extraneous files should be consolidated where possible.

Minification is a process wherein code, schema markup and JavaScript is reduced in size. It drastically improves your website’s page speed and bandwidth, which directly translates into a better use experience.

Optimization plugins such as PageSpeed Ninja and and Smush will automate the process of minifying your scripts and stylesheets so neither you nor your development team have to spend hours doing it yourselves. Afterwards, your pages should start loading in 2-3 seconds or less.

Image Optimization

Image files such as JPEGs and PNGs take up a surprising amount of resources and bandwidth to load. The larger an image is, the more it drags down your website’s speed. This is particularly the case if your website uses a lot of images and videos – and it probably does. The accepted practice in modern web development holds that human beings process images faster than words, and so images should already be a central part of your marketing.

Images are resource-intensive, and decreasing the load they bear on your web page can boost your website’s speed dramatically. You can often reduce an image file by as much as 75% without any noticeable depreciation in image quality.

Google PageSpeed Insights can tell you which of your images are dragging down your pagespeed. From there, you can choose whether to compress the images, or better yet to convert them to filetypes optimized for browsers such as WebP.

Tools like TinyPNG will compress or convert individual image files for you, while image optimizers like ShortPixel can bulk optimize every image on your website. For most websites with lots of pages and images, compressing or converting all of them will take about a day or two.

Mobile Optimization and Testing

Google has been urging and pushing website owners to optimize their websites for mobile devices for years, leading up to Google’s formal switch to mobile-first indexing in 2019.

You can check to see whether your website is optimized for mobile devices with Google’s mobile friendly test.

What’s more, you can see how it looks across screen sizes yourself by using the Inspect tool, where you can test how your website looks on different devices and on different network speeds.

Sitemap and Robots.txt

At some point during your audit, you should sit down to do a site query.

When you put site:yourdomain.com into Google, it tells you exactly how many of your web pages are being indexed and on Google. Take note of this information and save it for later.

The next thing you’ll want to do is to check to see whether your website has an XML sitemap and a robots.txt file. You can think of them as a roadmap and a blueprint of your website respectively.

An XML sitemap helps search engines to understand your website’s structure, as well as what pages are on it and where they’re located. It’s the single best thing you can do to ensure your website gets correctly indexed.

Your robots.txt file tells web crawlers whether a page should be indexed or not.

Making an XML sitemap is quick and easy, and takes all of 5 minutes with Screaming Frog. Just crawl your website, then click on “Sitemaps” > “Create XML Sitemap.”

Then, submit it to Google Search Console on their sitemaps report. If done correctly, your website should be indexed within a few days.

It’s a good idea to check your XML sitemap for errors before submitting it. You can do so using XML validators like CodeBeautify.org and XMLValidation.com.

You can check for the presence of your website’s robots.txt file by navigating to yourdomain.com/robots.txt. Any pages involved in conversion actions such as shopping cart or checking pages should be “disallowed” with a forward slash “/”.

Wrapping Up

Your users expect your website to be technically well-optimized – for it to load quickly, display properly on any device or screen size, to be laid out in a way that makes logical sense, to be secure from cyberattacks etc.

The things to look out for in an SEO audit include:

- On-Page SEO

- Architecture checks

- Crawl errors

- Improperly formatted URLs

- SSL Certification

- Duplicate and thin content

- Minification of CSS & JavaScript Files

- Image optimization

- Mobile optimization

- Sitemap and Robots.txt

FAQs

What Tools Are Essential For A Technical SEO Audit?

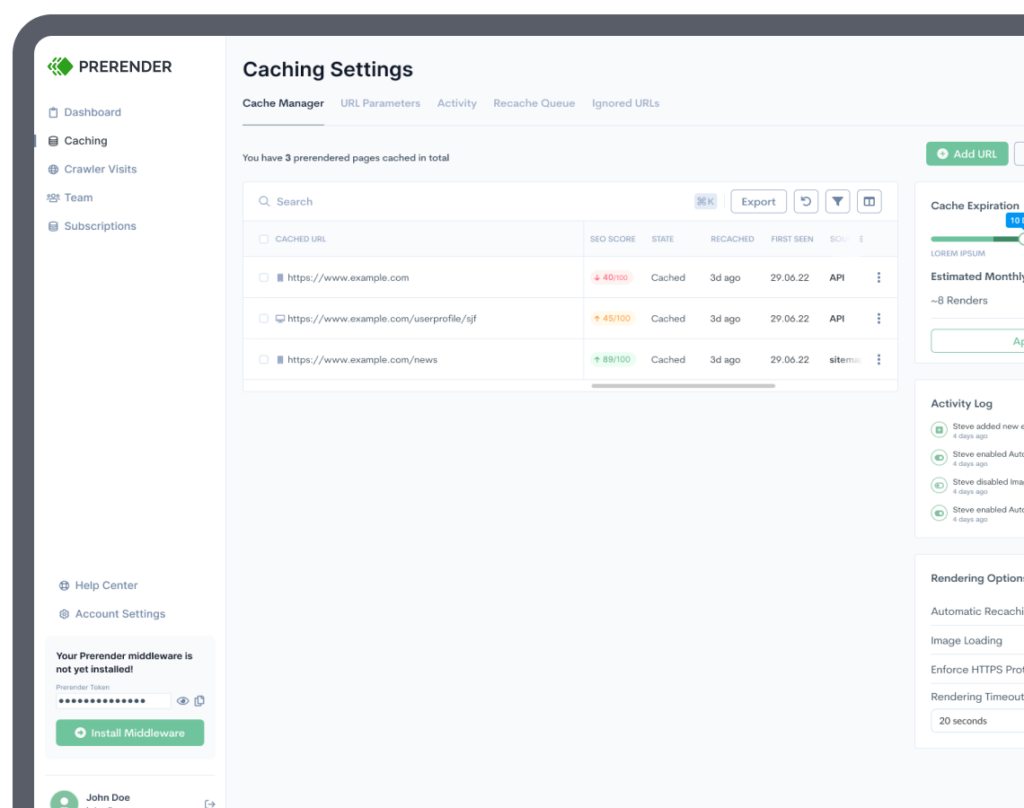

Essential tools include Google Search Console, Screaming Frog, PageSpeed Insights, Mobile-Friendly Test, and JavaScript rendering tools like Prerender.io. Each serves specific purposes in the audit process.

How Do I Prioritize Issues Found During An Audit?

Prioritize issues based on their impact on search engine crawling and indexing. Focus first on critical issues affecting site accessibility, then move to performance improvements and enhancement opportunities.

What Are The Most Critical Elements Of A Technical SEO Audit?

Key elements include: crawlability assessment, JavaScript rendering analysis, mobile optimization check, site speed evaluation, and indexation status. Focus on issues that directly impact search engine accessibility and user experience.

What Role Does JavaScript Play In Technical SEO Audits?

JavaScript rendering is crucial in modern technical SEO audits: it affects how search engines, LLMs, and social media crawlers see and understand your content. Furthermore, AI crawlers can’t render JavaScript, making JS rendering a crucial part of your SEO strategy nowadays. Use a solution like Prerender.io to help search engines, AI crawlers, and social media crawlers see your content. From there, your audience can find you.

Interested in seeing the impact that Prerender can have on your site? Try for free today.