Did you know that a seemingly small file, called robots.txt, can significantly impact your website’s SEO performance? This simple text file provides instructions to search engine crawlers, guiding them on which pages to index and which to avoid. This means that if you don’t configure your site’s robots.txt files correctly, it can have a serious negative effect on your website’s SEO.

In this technical SEO guide, we’ll delve into the intricacies of robots.txt files. You’ll learn what robots.txt is, how it affects your website’s SEO, how to create and submit it, and essential best practices for optimal robots.txt file configuration.

What is a Robots.txt File?

A robots.txt file is a simple text file located on your web server that provides instructions to search engine crawlers, such as Googlebot. Using “Allow” and “Disallow” directives, you can control which pages they can access and index.

Here are some benefits of using a Robots.txt file for your overall web performance:

- Prioritizing bottom-line pages: by directing search engines to your most valuable pages, you ensure they focus on indexing content that matters most to your business.

- Disallowing large files: prevent Googlebot from accessing large files that can negatively impact your web performance.

- Saving crawl budget: prevent search engines from wasting resources on less important content. This way, you can allocate more of their crawl budget to your priority pages.

Related: Need to refresh on what a crawl budget is? This crawl optimization guide can help.

How Does Robots.txt File Impact SEO Performance?

If a web crawler tries to crawl a page that is blocked in the robots.txt file, it will be considered a soft 404 error.

Although a soft 404 error will not hurt your website’s ranking, it is still considered an error. And too many errors on your website can lead to a slower crawl rate which can eventually hurt your ranking due to decreased crawling.

If your website has a lot of pages that are blocked by the robots.txt files, it can also lead to a wasted crawl budget. The crawl budget is the number of pages Google will crawl on your website during each visit.

Another reason why robots.txt files are important in SEO is that they give you more control over the way Googlebot crawls and indexes your website. If you have a website with a lot of pages, you might want to block certain pages from being indexed so they don’t overwhelm search engine web crawlers and hurt your rankings.

If you have a blog with hundreds of posts, you might want to only allow Google to index your most recent articles. If you have an eCommerce website with a lot of product pages, you might want to only allow Google to index your main category pages.

Configuring your robots.txt file correctly can help you control the way Googlebot crawls and indexes your website, which can eventually help improve your ranking.

Top tip: Discover 6 top technical SEO elements that can impact your SEO rankings.

When to Use a Robots.txt File?

A robots.txt file basically gives search engines a polite heads-up about which parts of your site they should or shouldn’t crawl. They are generally used to hide staging web pages, private pages, or duplicate content that you don’t want cluttering up search results or being indexed by Google. It’s also useful if you’re testing new pages or layouts and prefer to keep them under wraps until they’re ready to go live.

In general, you’ll use a robots.txt file whenever you need precise control over how search engine bots navigate your site, but you don’t need to hide pages from the public entirely.

Robots.txt Syntax: What Does a Robots.txt File Look Like?

Here’s a breakdown of the robots.txt syntax and what each directive typically means:

- User-agent: This directive specifies the search engine crawler(s) to which the rules apply, and you can use a specific user-agent name to target a crawler (e.g., User-agent: Googlebot) or use an asterisk (*) to target all bots (e.g., User-agent: *).

- Disallow: Lists the pages or directories that a user-agent is not allowed to crawl. For example, Disallow: /private means that the crawler should not index or follow any links in the /private folder.

- Allow: Allow is basically used to override the Disallow directive we just talked about, letting specific paths remain accessible. For instance, if you disallow /blog, but you want the crawler to still access /blog/public, you can use:

| Disallow: /blog Allow: /blog/public |

- Sitemap (optional but recommended): The sitemap directive crawls your sitemap so it can discover all your most important URLs. To implement this, simply add:

| Sitemap: https://example.com/sitemap.xml |

Putting it all together, a classic robots.txt file sitemap example should look like this:

Now, let’s break down the robots.txt files and directives above to sump up the robots.txt syntax:

- User-agent: *: Rules for all crawlers.

- Disallow: /admin and Disallow: /tmp: Blocks all crawlers from these directories.

- Allow: /admin/public: Creates an exception for /admin/public even though /admin is disallowed.

- User-agent: Googlebot: Additional rules specifically for Google’s crawler.

- Disallow: /old-section: Prevents Googlebot from crawling anything in /old-section.

- Sitemap: …: Points crawlers to your sitemap.

This robots.txt format is straightforward: groups of directives start with a User-agent line, followed by any number of Allow or Disallow lines, and an optional Sitemap reference. When writing your own robots.txt rules, remember that crawlers read them from top to bottom, and specific rules for particular user agents override the general ones.

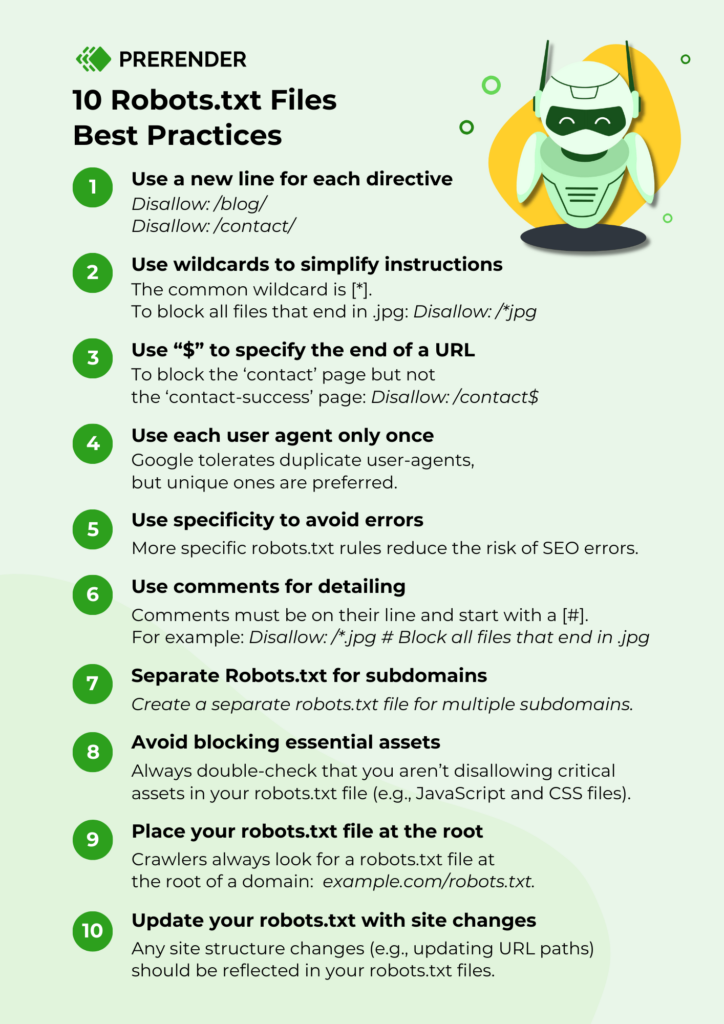

10 Robots.txt Best Practices to Boost SEO Performance

Now that you know the basics of robots.txt files, let’s go over some best practices. These are things you should do to make sure your file is effective and working properly.

1. Use a New Line for Each Directive

When you’re adding rules to your robots.txt file, it’s important to use a new line for each directive to avoid confusing search engine crawlers. This includes both Allow and Disallow rules.

For example, if you want to disallow web crawlers from crawling your blog and your contact page, you would add the following rules:

Disallow: /blog/

Disallow: /contact/

2. Use Wildcards To Simplify Instructions

If you have a lot of pages that you want to block, it can be time-consuming to add a rule for each one. Fortunately, you can use wildcards to simplify your instructions.

A wildcard is a character that can represent one or more characters. The most common wildcard is the asterisk (*).

For example, if you want to block all files that end in .jpg, you would add the following rule:

Disallow: /*.jpg

3. Use “$” To Specify the End of a URL

The dollar sign ($) is another wildcard that you can use to specify the end of a URL. This is helpful if you want to block a certain page but not the pages that come after it.

For example, if you want to block the contact page but not the contact-success page, you would add the following rule:

Disallow: /contact$

4. Use Each User Agent Only Once

Thankfully, when you’re adding rules to your robots.txt file, Google doesn’t mind if you use the same User-agent multiple times. However, it’s considered best practice to use each user agent only once.

5. Use Specificity To Avoid Unintentional Errors

When it comes to robots.txt files, specificity is key. The more specific you are with your rules, the less likely you are to make an error that could hurt your website’s SEO.

6. Use Comments To Explain Your robots.txt File to Humans

Despite your robots.txt files being crawled by bots, humans will still need to be able to understand, maintain and manage them. This is especially true if you have multiple people working on your website.

You can add comments to your robots.txt file to explain what certain rules do. Comments must be on their line and start with a #.

For example, if you want to block all files that end in .jpg, you could add the following comment:

Disallow: /*.jpg # Block all files that end in .jpg

This would help anyone who needs to manage your robots.txt file understand what the rule is for and why it’s there.

7. Use a Separate robots.txt File for Each Subdomain

If you have a website with multiple subdomains, it’s best to create a separate robots.txt file for each one. This helps to keep things organized and makes it easier for search engine crawlers to understand your rules.

8. Avoid Blocking Essential Assets

Blocking JavaScript, CSS, or other resources that search engines need to render your pages can seriously harm your SEO. For instance, if Googlebot can’t render your page the way users see it, your rankings could drop. Always double-check that you aren’t disallowing critical assets in your robots.txt file.

9. Place Your Robots.txt File at the Root

Search engine crawlers always look for a robots.txt file at the root of a domain (e.g., example.com/robots.txt). If you place it in a subdirectory or under a different name, crawlers might not find or respect your directives. Keeping your robots.txt file in the correct location ensures your SEO efforts aren’t wasted.

10. Update Your Robots.txt With Site Changes

Any time your site structure changes—like adding a new subfolder or updating URL paths—make sure you reflect those changes in your robots.txt file. Regular updates prevent crawlers from being misdirected, which helps keep your content visible and indexed appropriately.

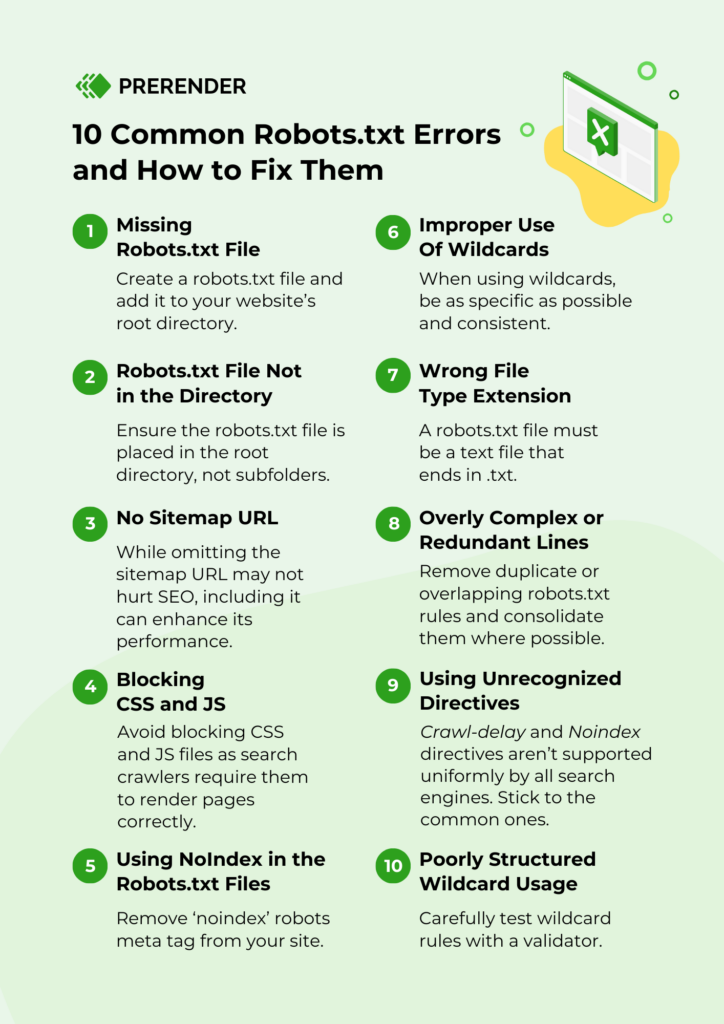

10 Common Robots.txt Errors and How to Fix Them

Understanding the most common mistakes people make with their robots.txt files can help you avoid making them yourself. Here are some of the most common mistakes and how to fix these technical SEO issues.

1. Missing Robots.txt File

The most common robots.txt file mistake is not having one at all. If you don’t have a robots.txt file, search engine crawlers will assume that they are allowed to crawl your entire website.

To fix this, you’ll need to create a robots.txt file and add it to your website’s root directory.

2. Robots.txt File Not in the Directory

If you don’t have a robots.txt file in your website’s root directory, search engine crawlers won’t be able to find it. As a result, they will assume that they are allowed to crawl your entire website.

It should be a single text file name that should be not placed in subfolders but rather in the root directory.

3. Omitting the Sitemap URL from Robots.txt

Your robots.txt file should always include a link to your website’s sitemap. This helps search engine crawlers find and index your pages.

Omitting the sitemap URL from your robots.txt file is a common mistake that may not hurt your website’s SEO, but adding it will improve it.

4. Blocking CSS and JavaScript

According to John Mueller, you must avoid blocking CSS and JS files as Google search crawlers require them to render the page correctly.

Naturally, if the bots can’t render your pages, they won’t be indexed.

5. Using NoIndex in the Robots.txt Files

Since 2019, Google has deprecated the noindex robots meta tag and no longer supports it. As a result, you should avoid using it in your robots.txt file.

If you’re still using the noindex robots meta tag, you should remove it from your website as soon as possible.

Related: Discover the impact of noindex vs. nofollow tags on your site’s crawl budget.

6. Improper Use Of Wildcards

Using wildcards incorrectly will only result in restricting access to files and directories that you didn’t intend to.

When using wildcards, be as specific as possible. This will help you avoid making any mistakes that could hurt your website’s SEO. Also, stick to the supported wildcards, that is asterisk and dollar symbol.

7. Wrong File Type Extension

As the name implies, a robot.txt file must be a text file that ends in.txt. It cannot be an HTML file, image, or any other type of file. It must be created in UTF-8 format. A useful introductory resource is Google’s robot.txt guide and Google Robots.txt FAQ.

8. Overly Complex or Redundant Lines

Sometimes webmasters add a flurry of rules, such as multiple Disallow directives for the same paths or unnecessary wildcard usage. These can confuse both bots and human collaborators.

You can simplify your robots.txt file by removing duplicate or overlapping rules and consolidating them where possible. Remember that when implementing robots.txt files, using fewer lines means fewer chances of making mistakes.

9. Using Unrecognized Directives

Some directives (like Crawl-delay or Noindex) are not supported uniformly by all search engines. Relying on them could lead to inconsistent behavior across different crawlers.

It’s generally best to stick to the officially recognized robots.txt directives we talked about earlier (User-agent, Allow, Disallow, and Sitemap)for broad compatibility. For advanced robots.txt directives, rely on meta tags or search engine specific methods.

10. Poorly Structured Wildcard Usage

Even though wildcards can simplify your rules, sloppy usage, such as placing them in the wrong part of a path, can inadvertently block or allow entire sections of your site. For example, Disallow: /images*.jpg might block more than intended.

Here’s how to fix it: Carefully test wildcard rules with a validator or in a staging environment. Ensure you fully understand how * (asterisk) and $ (end of URL) function to avoid accidentally blocking critical content.

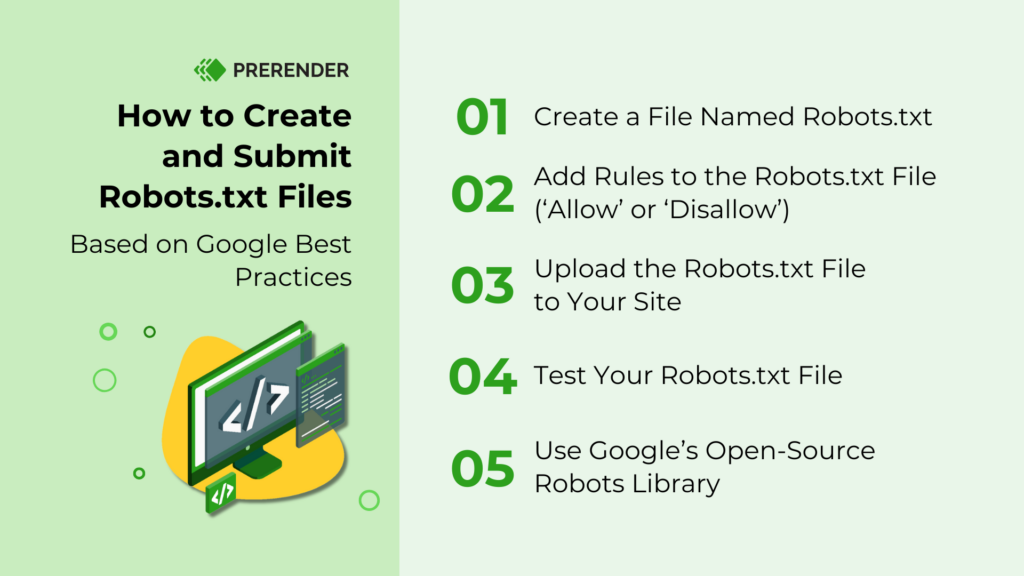

How to Create and Submit Robots.txt Files (Google’s Recommendations)

Now that we’ve gone over why robots.txt files are important in SEO, let’s discuss how to create and submit robots.txt files to your website’s backend. This setup guide is recommended by Google. You can read Google’s Robots.txt implementation tutorial here.

Step 1: Create a File Named Robots.txt

The first step is to create a file named robots.txt. This file needs to be placed in the root directory of your website – the highest-level directory that contains all other files and directories on your website.

Here’s an example of proper placement of a robots.txt file: on the apple.com site, the root directory would be apple.com/.

You can create a robots.txt file with any text editor, but many CMS’ like WordPress will automatically create it for you.

Step 2: Add Rules to the Robots.txt File

Once you’ve created the robots.txt file, the next step is to add rules. These rules will tell web crawlers which pages they can and cannot access.

There are two types of robot.txt syntax you can add: Allow and Disallow.

Allow rules will tell web crawlers that they are allowed to crawl a certain page.

Disallow rules will tell web crawlers that they are not allowed to crawl a certain page.

For example, if you want to allow web crawlers to crawl your homepage, you would add the following rule:

Allow: /

If you want to disallow web crawlers from crawling a certain subdomain or subfolder on your blog, you use:

Disallow: /

Step 3: Upload the Robots.txt File to Your Site

After you have added the rules to your robots.txt file, the next step is to upload it to your website. You can do this using an FTP client or your hosting control panel.

If you’re not sure how to upload the file, contact your web host and they should be able to help you.

Step 4: Test Your Robots.txt File

After you have uploaded the robots.txt file to your website, the next step is to test it to make sure it’s working correctly. Google provides a free tool called the robots.txt Tester in Google Search Console that you can use to test your file. It can only be used for robots .txt files that are located in the root directory of your website.

To use the robots.txt tester, enter the URL of your website into the robots.txt Tester tool and then test it. Google will then show you the contents of your robots.txt file as well as any errors it found.

Step 5: Use Google’s Open-Source Robots Library

If you are a more experienced developer, Google also has an open-source robots library that you can use to manage your robots.txt file locally on your computer.

Pro tip: Optimizing the robots.txt files for ecommerce has its own challenges. Find out what they are and how to solve them in our robots.txt best practices for ecommerce SEO guide.

Where Should I Put My Robots.txt File?

The best spot for your robots.txt file is right in your site’s root directory—so, for example, you’d have example.com/robots.txt. That’s where search engines know to look by default. If you drop it into a subfolder, like example.com/blog/robots.txt, there’s a good chance crawlers won’t see it at all.

It’s the same story for subdomains: if you’ve got blog.example.com or store.example.com, each one needs its own robots.txt file placed at the root of that subdomain. This setup keeps everything straightforward and helps ensure search engines follow the rules you’ve laid out.

How Do I Test and Validate My Robots.txt File?

Testing and validating your robots.txt file is a must to ensure you’ve implemented the robots.txt files correctly.

To test and validate robots.txt files, use Google’s Robots Testing Tool in your Google Search Console account. Simply paste in your file’s contents, and the tool will highlight any errors. It’s also super handy for testing specific URLs, so you can see if you accidentally blocked or allowed pages you didn’t intend to.

Next, pay attention to your crawl data in Google Search Console’s Coverage report. If you spot pages that are “Blocked by robots.txt” but shouldn’t be (or pages being indexed that you meant to hide), go back and fine-tune your robots.txt file setup. Third-party SEO auditing tools—like Screaming Frog or SEMrush—can also simulate how different search engines read your robots.txt file configuration, helping you catch issues before they become real problems.

Finally, if you’re planning big changes, like blocking entire sections of your site, always test them in a staging environment. That way, you can see how crawlers actually behave before rolling out those robots.txt directives to your live site.

What Happens to Your Site’s SEO if a Robots.txt File Is Broken or Missing?

If your robots.txt file is broken or missing, it can cause search engine crawlers to index pages that you don’t want them to. This can eventually lead to those pages being ranked in Google, which is not ideal. It may also result in site overload as crawlers try to index everything on your website.

A broken or missing robots.txt file can also cause search engine crawlers to miss important pages on your website. If you have a page that you want to be indexed, but it’s being blocked by a broken or missing robots.txt file, it may never get indexed.

In short, it’s important to make sure your robots.txt file is working correctly and that it’s located in the root directory of your website. Rectify this problem by creating new rules or uploading the file to your root directory if it’s missing.

Achieve Better Indexing and SEO Rankings With Robots.Txt Files

A robots.txt file is a powerful tool that can be used to improve your website’s SEO. However, it’s important to use it correctly.

When used properly, a robots.txt file can help you control which pages are indexed by search engines and improve your website’s crawlability. It can also help you avoid duplicate content issues.

On the other hand, if used incorrectly, a robots.txt file can do more harm than good. It’s important to avoid common mistakes and follow the best practices that will help you use your robots.txt file to its full potential and improve your website’s SEO.

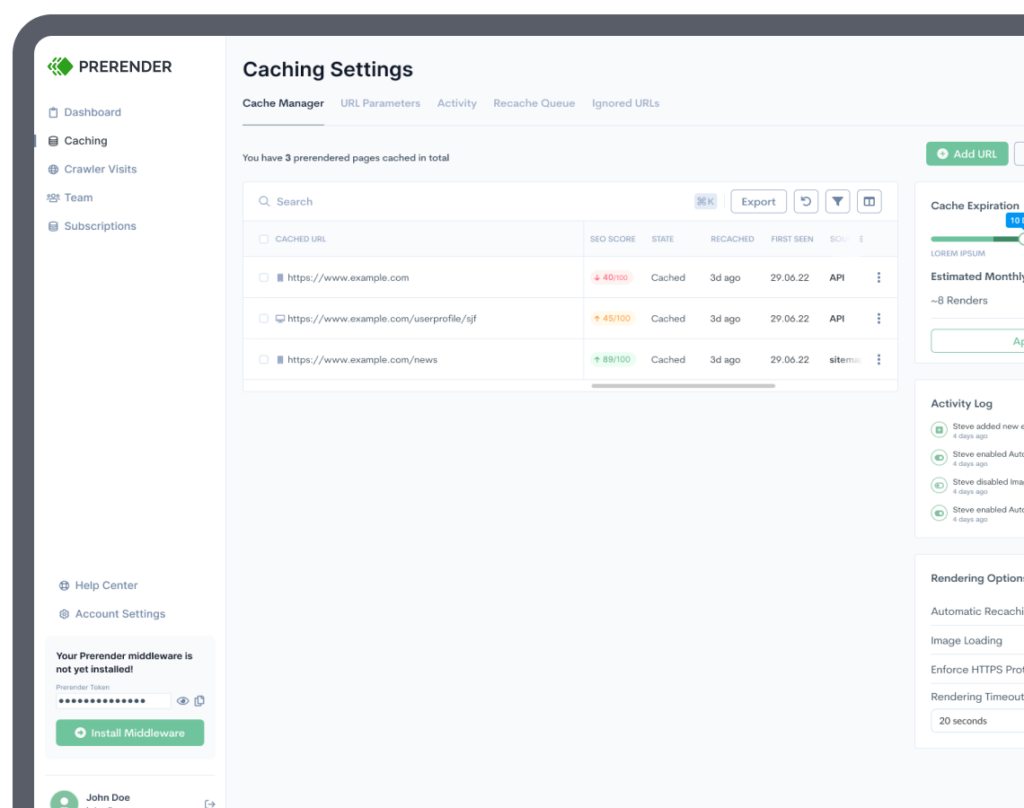

In addition to expertly navigating Robot.txt files, pre-built rendering with Prerender also offers the opportunity to produce static HTML for complex Javascript websites. Now you can allow faster indexation, faster response times, and an overall better user experience.

Watch the video below to see if it’s right for you or try it for free today.

FAQs – Robots.txt Files Best Practices

Answering some questions about robots.txt files, implementation tips, and its impact on SEO results.

1. Can Robots.txt Block My Site from Appearing in Google Search Results?

While robots.txt prevents crawling, it doesn’t guarantee that pages won’t appear in search results. Google may still index URLs blocked by robots.txt if they’re linked from other pages, though without understanding their content. If you want to prevent indexing for certain pages, stick to the noindex meta tag.

2. How Long Does It Take for Robots.txt Changes to Take Effect?

Google typically caches robots.txt files for up to 24 hours. After that, changes take effect when search engines next crawl your site. While you can use Google Search Console to test your robots.txt, this won’t speed up the implementation process.

3. Should I Block Images Using Robots.txt?

Generally, no. Blocking images can prevent them from appearing in Google Image search and reduce your visual search traffic. Only block images if they contain sensitive information or are not meant for public viewing.

4. What’s the Difference between robots.txt and Meta Robots Tags?

Robots.txt controls crawler access at the server level and prevents crawling, while meta robots tags control indexing at the page level. Meta robots tags are more precise for controlling individual page indexing.

5. Can I Use Robots.txt to Block Specific Search Engines?

Yes! You can specify different rules for different search engines using the User-agent directive. Legitimate search engines like Google, Bing, and Yahoo will respect these directives, but malicious bots may ignore them.

Looking for more technical SEO tips? Here are other articles that may interest you:

- 10 Best Screaming Frog Alternatives (Free and Paid)

- 10 Ways to Accelerate Web Page Indexing and Its SEO Effects

- Caching in JavaScript—How it Affects SEO Performance (for Developers)

- How to Find & Fix Broken Backlinks Before They Destroy SERP Rankings

- Is AJAX Bad for SEO? Not Necessarily. Here’s Why.