Google’s crawlers aren’t the only ones visiting your website anymore. New AI crawlers, like ChatGPT’s GPTBot, ClaudeBot, and PerplexityBot, are constantly scanning the web to gather fresh information and feed it into their databases.

If your website isn’t optimized for these AI agents, it may never show up in AI-generated search results. Why? Because most AI crawlers struggle to render JavaScript, meaning key parts of your content can go completely unseen.

That’s where AI crawler optimization comes in: the process of making your website fully discoverable, readable, and interpretable by AI-driven bots and large language models (LLMs), not just traditional search engines.

The good news? Prerender.io makes content optimization for AI crawlers effortless. With Prerender, you can make your JavaScript-powered pages instantly accessible to AI crawlers in just a few clicks. Let’s learn how Prerender.io can make a difference in your site’s AI visibility and AI optimization efforts.

TL;DR: How to Optimize Your Content for AI Crawlers and Search

- Ensure full JavaScript rendering using tools like Prerender.io so AI bots can read dynamic content.

- Improve site crawlability by allowing AI agents (like GPTBot and ClaudeBot) in your robots.txt file.

- Use structured data (Schema.org markup) to label and clarify your key content.

- Adopt progressive enhancement to make sure your content is readable even if scripts fail.

- Audit your site regularly to confirm AI crawlers can access the same content as human users.

Understanding the Impact of AI Crawlers on AI Search Results

Understanding how AI crawlers interpret your site is key to improving AI visibility, ensuring your brand can appear in AI-generated answers and summaries. Unlike traditional search crawlers, which primarily focus on parsing HTML and following links, AI crawlers use advanced language models to understand and contextualize web content.

Read more: Understanding Web Crawlers: Traditional vs. OpenAI’s Bots

This allows LLMs like ChatGPT, Claude, Perplexity, and more to grasp the meaning and relevance of content more like a human. They can understand natural language, interpret context, and even make inferences based on the content they encounter. Users get more accurate search results and content recommendations.

More and more users are leaning towards LLMs as their search engine of choice. This is having a significant impact on the SEO world: results from a recent study show that AI agents now account for ~28% of Googlebot’s traffic volume, and this is only expected to rise.

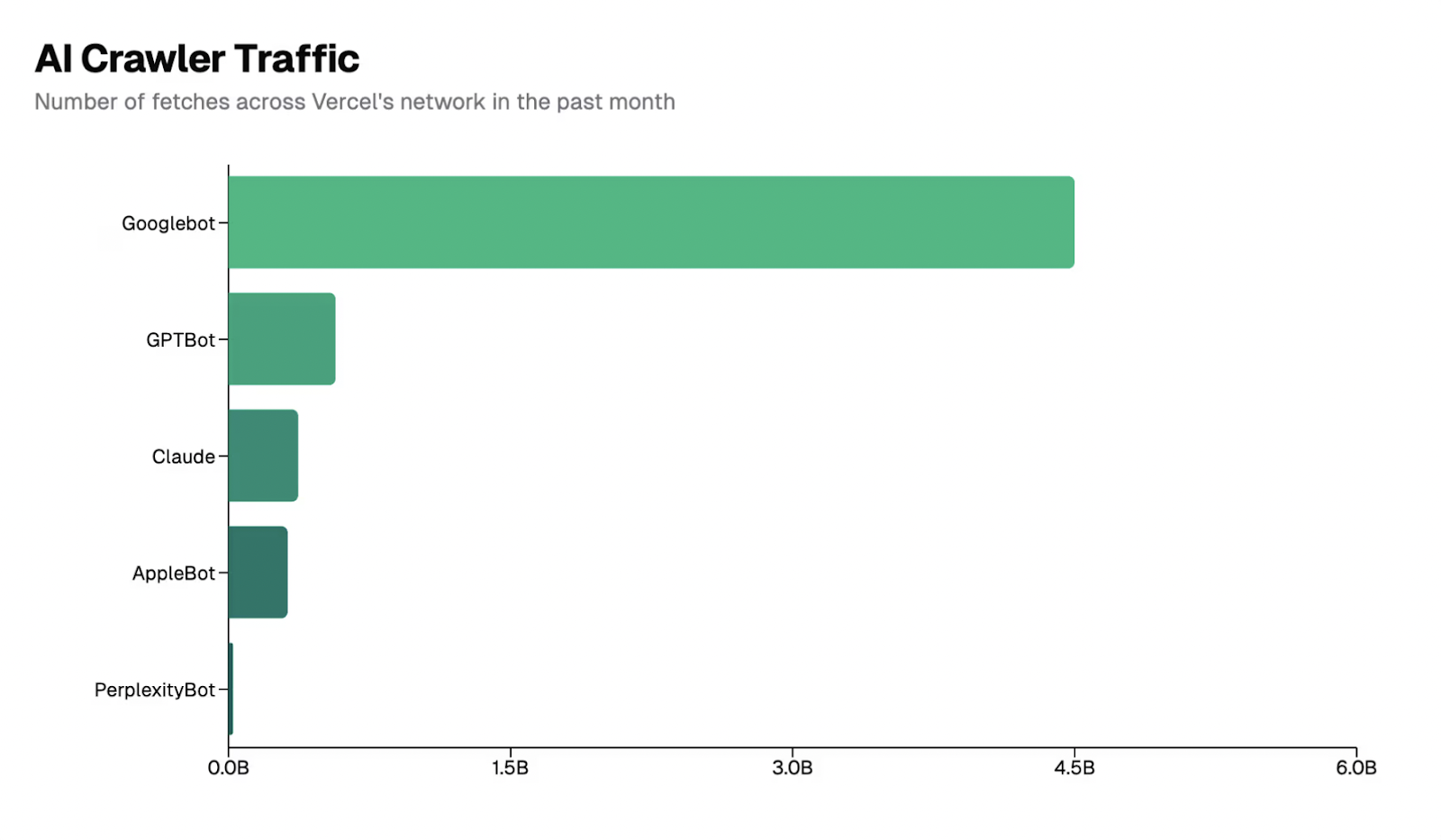

Search Engine Journal cited the study: “OpenAI’s GPTBot and Anthropic’s Claude generate nearly 1 billion requests monthly across Vercel’s network… GPTBot made 569 million requests in the past month, while Claude accounted for 370 million. Additionally, PerplexityBot contributed 24.4 million fetches, and AppleBot added 314 million requests.”

But as AI crawlers gain visibility and traffic share, the benefits of preparing your site go far beyond technical compliance.

Why Optimizing for AI Crawlers Matters

Optimizing for AI crawlers ensures your content is seen, enhances your brand authority, strengthens your SEO foundation, and keeps your strategy future-ready. Here’s what you stand to gain:

- Stronger Brand Authority

When AI tools reliably incorporate your content into their answers, you’re seen as a credible source in your niche. Consistent inclusion in AI responses can bolster brand trust and authority, leading to more traffic, leads, and conversions in the long run. - Boost to Conventional SEO

Many steps you take to accommodate AI bots, such as dynamic rendering, clean HTML content, and structured data, also benefit conventional search engines like Google. This dual-purpose approach can improve your rankings in AI-driven and traditional search results. - Improved Accuracy of AI Summaries

AI crawlers don’t just index your page; they interpret and summarize it. By presenting your content in a well-structured, easily parsable format, you help AI tools extract and represent your brand’s information accurately, reducing the chance of misinterpretation or incomplete snippets. - Future-Proofing Your Content Strategy

The rapid rise of AI suggests this technology will play an increasingly significant role in search and content discovery. Preemptive optimization ensures your site remains relevant as AI platforms evolve and become more mainstream.

Related: How to Get Your Website Indexed by ChatGPT and Other AI Search Engines

How to Optimize Your Websites for AI Crawlers and AI Agents

1. Adopt Prerender.io – The Best Tool to Optimize Content for AI Crawlers

Prerender.io is a dynamic rendering solution that helps AI crawlers easily access and understand your website content. By ensuring your pages are optimized and fully visible to AI systems, Prerender improves how your site is represented in AI search results—boosting your visibility, accuracy, and chances of being recommended by tools like ChatGPT, Claude, and Perplexity.

To get the most out of Prerender.io, it’s crucial to configure the right AI user agents. These identify and grant access to specific AI crawlers that index your content. By adding AI-related user agents, you’re telling Prerender which AI crawlers should receive fully rendered versions of your site. This ensures they can read and index all your content accurately, rather than missing dynamic elements loaded by JavaScript.

Why is this important? Without proper user agent configuration, AI crawlers may only see a blank or partially rendered page, leading to poor visibility in AI-driven search results.

Prerender.io currently supports major AI and search crawlers, including:

- GPTBot (ChatGPT)

- ClaudeBot (Anthropic’s AI assistant)

- PerplexityBot (Perplexity AI search)

- Googlebot

- Bingbot

- DuckDuckBot

- LinkedInBot, Twitterbot, and other social media crawlers

See the complete list of AI user agents/AI crawlers that Prerender.io supports here.

You can easily add or manage these user agents in your Prerender.io dashboard. Once configured, Prerender automatically serves fully rendered pages to each bot, making sure every AI system sees your content exactly as a human visitor would.

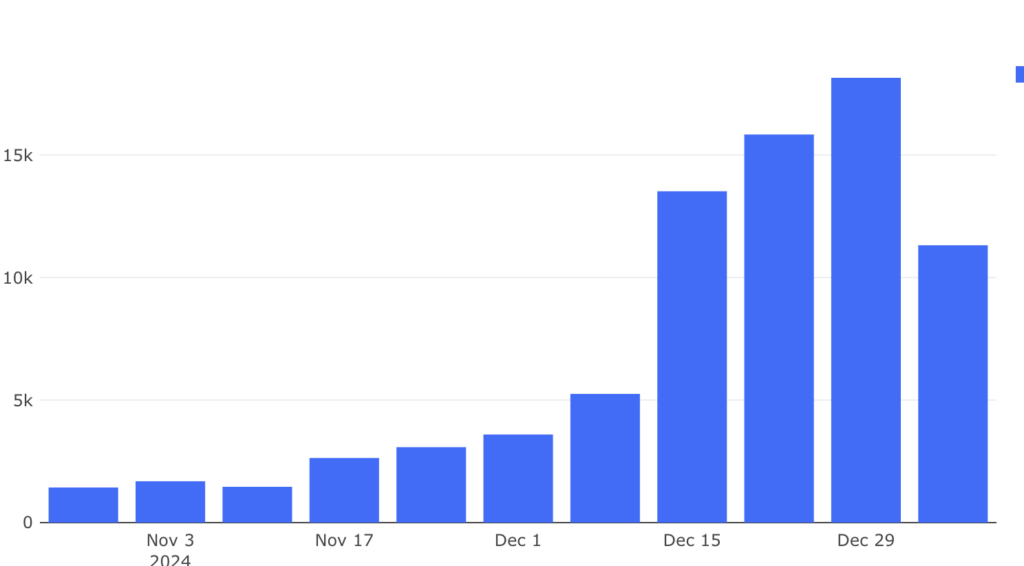

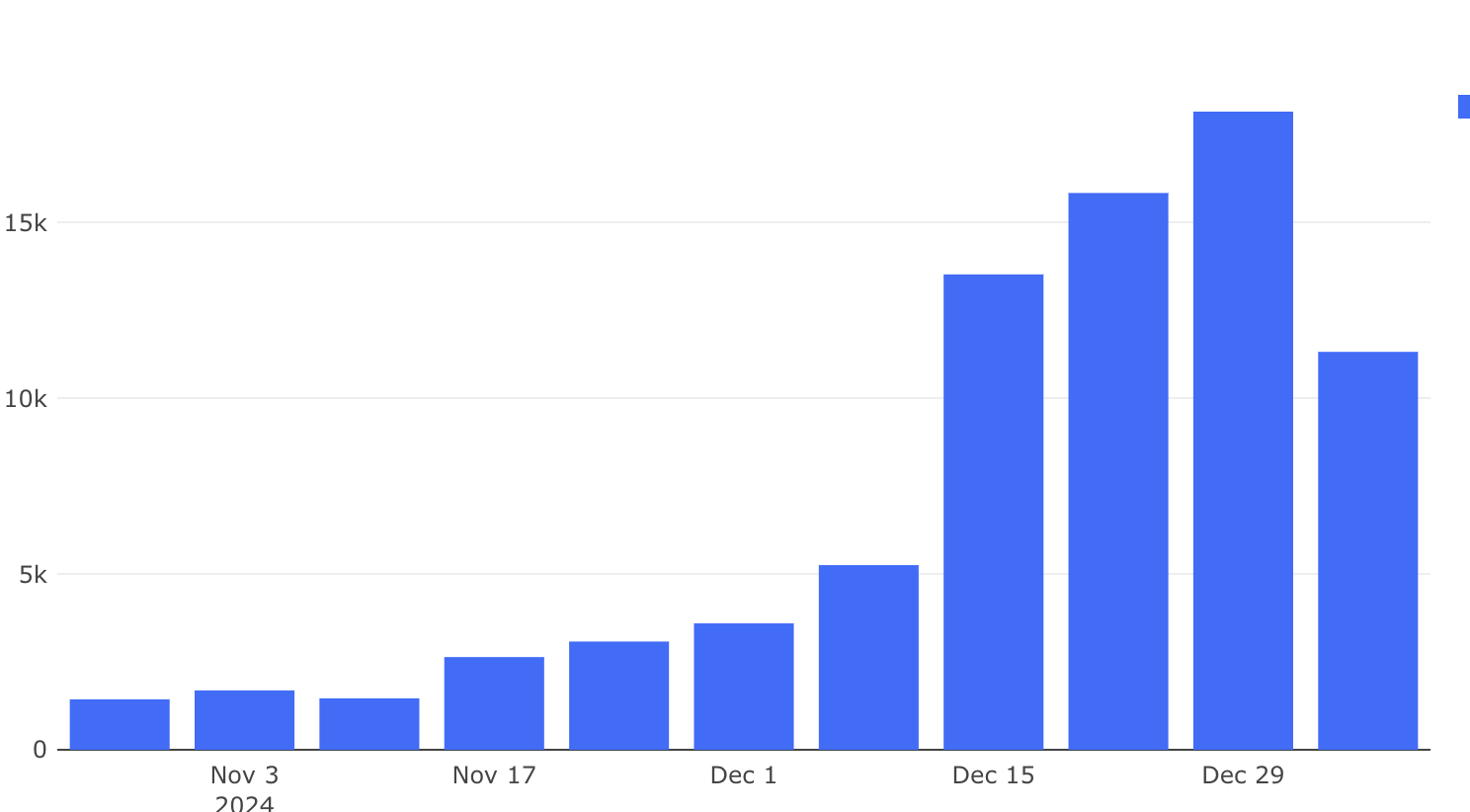

For example, after one customer added the ChatGPT user agent to their Prerender setup, they saw a clear uptick in traffic from AI-driven crawlers.

How Does Prerender.io Work?

Put simply, we convert your dynamic JavaScript pages into static HTML for crawlers to read quickly. Here’s an overview:

- When a crawler visits your site, Prerender intercepts the request.

- It serves a pre-rendered, static HTML version of your page to the crawler.

- This static version contains all the content that would normally be loaded dynamically via JavaScript.

You can also add various user agents to your account, such as ChatGPT-user like the image above. Here’s a list of some of Prerender’s user agents and how to add them to your account.

How Prerender.io Optimizes Your Website Content for AI Search Visibility

From there, you should see:

- Faster rendering times. Crawlers receive instantly loadable HTML, dramatically reducing rendering time.

- Improved crawl efficiency. More of your pages can be crawled within the allocated budget.

- Better indexing rates. Your content, including dynamically loaded content, becomes visible to all available bots.

- Fewer drops in traffic. Poor JS rendering is a lesser-known cause of traffic drops. Clients often come to us after traffic drops from site migrations or algorithm updates for positive results.

These improvements may sound technical, but they lay the groundwork for AI bots to see all your content and its SEO signals. This way, your content is no longer hiding behind JS, but is easily read and understood by AI crawlers and has a higher chance of being recommended on AI search results. This is why Prerender.io is one of the best tools to optimize content for AI crawlers.

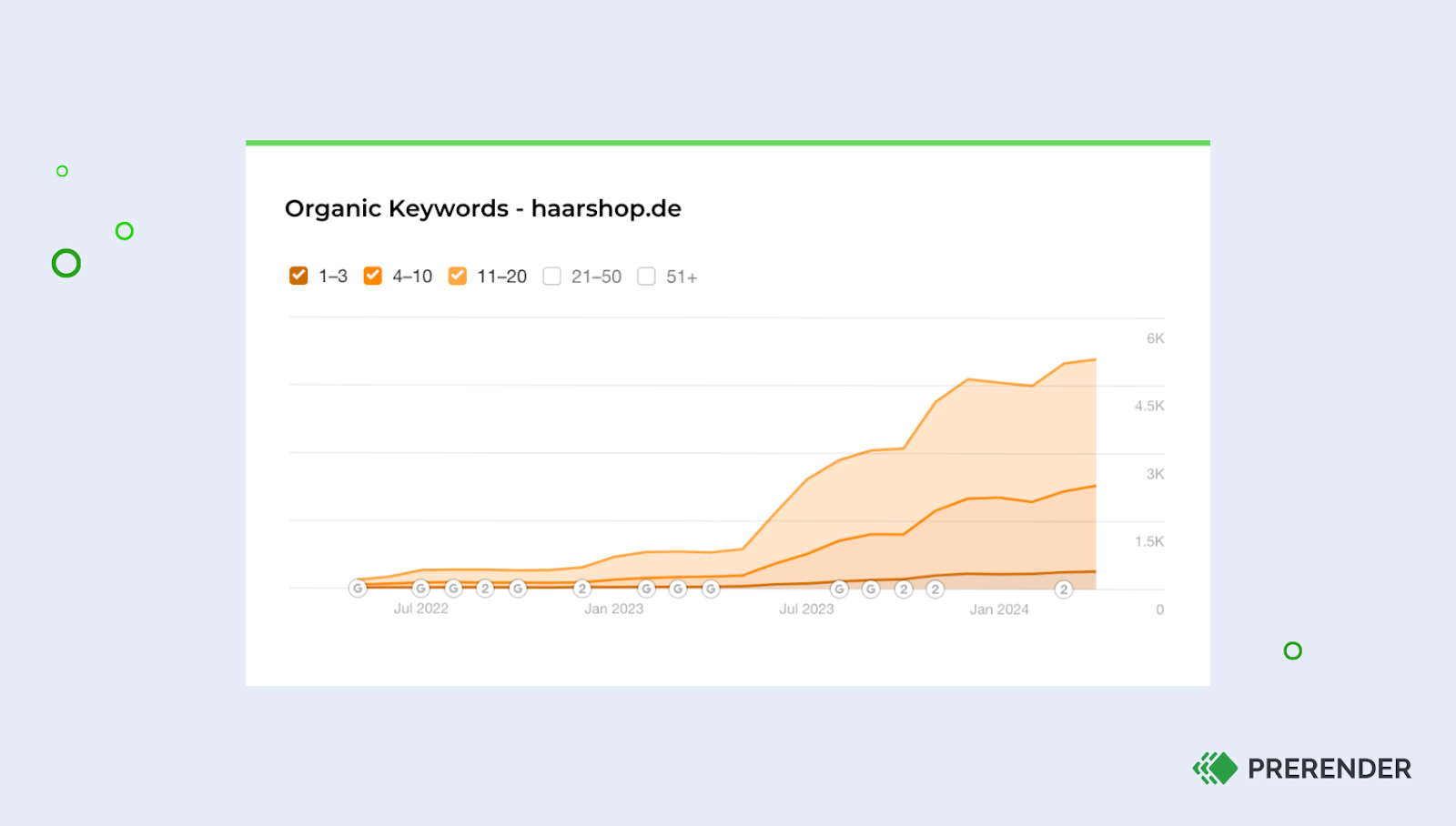

For example, here are the organic traffic levels from the Dutch webshop, Haarshop, after installing Prerender. Read the case study to learn more.

What Sites Can Benefit From Prerender.io AI Optimization Solution?

There are a few types of websites that can benefit most from Prerender.

- Large sites (1M+ unique pages) with content that changes moderately often, such as once per week. An example might be an enterprise ecommerce store.

- Medium to large sites (10,000+ unique pages) with rapidly changing content, i.e., content that changes on a daily basis. Examples include: job boards, ecommerce stores, gaming and betting platforms, and more.

- Sites with indexing challenges. This is especially true if a large portion of your total URLs are classified by GSC as Discovered – currently not indexed. Learn more about how to avoid discovered – currently not indexed Google indexing errors here.

- Sites with slow rendering problems. Google dislikes pages slower than 20-30 seconds. If your average site rendering time is greater than 30 seconds and less than one minute, Prerender.io is a fantastic option to improve your speeds.

In addition to adopting Prerender.io, the following AI SEO techniques can help optimize your content to get featured on AI search engines alike.

2. Check What AI Crawlers Can Actually See on Your Site

Conduct a thorough technical SEO and AI SEO audit to confirm that both human users and AI crawlers can easily find and read the content you publish.

Start by examining which parts of your site’s text and images are presented in plain HTML versus those served exclusively through JavaScript. Remember, many AI-based tools and AI search engines aren’t able to fully load or interpret JavaScript. Consequently, some of the key pieces of your content could remain hidden from AI crawlers and bots.

If you’re unsure about how your site appears to AI crawlers, consider using Google Search Console’s URL Inspection. As a rule of thumb, if Google crawlers can see the content, AI crawlers are likely to find it, too.

You can also temporarily disable JavaScript in your browser or install a plugin that blocks it, then navigate through your site’s pages. Whatever disappears when JavaScript is off is likely invisible to AI crawlers as well, giving you a clear checklist of what needs fixing.

Want clear insights into your site’s performance? Try this free site audit tool for results in minutes.

3. Use Progressive Enhancement to Keep Content Visible to AI

Progressive enhancement is all about building your website in layers to ensure it remains fully functional, even if JavaScript doesn’t load. You start by serving up the most important content and navigation in plain HTML and CSS, so visitors and AI crawlers alike can instantly see what matters most.

Only after you’ve established that solid foundation do you add JavaScript for advanced interactions and visuals. This layered approach guarantees that if a human user or some AI crawler encounters errors or disables scripts, your essential information won’t disappear. Ultimately, you end up with a more reliable, accessible, and future-proof site.

4. Add Structured Data to Help AI Crawlers Understand Your Content

Think of structured data as a cheat sheet that helps AI search engine crawlers quickly grasp the main points of your website. When you add Schema.org markup, such as tags for products, reviews, or events, it’s like placing helpful signs around your content to show bots exactly what’s what.

This not only boosts your odds of showing up in those sleek “rich results” but also helps AI-driven platforms like ChatGPT and Perplexity correctly interpret and present your information.

Even though structured data was originally designed with search engines in mind, it’s rapidly becoming a must-have for AI crawlers, too. AI crawlers scan pages looking for clear, well-labeled details that they can weave into answers or summaries. By embedding structured data right in your HTML, you’re essentially guaranteeing that your key points don’t get lost in the noise—and that your site has the best shot at standing out in this new age of AI-powered search.

Why Do AI Crawlers Struggle to Render JavaScript?

Even though AI crawlers use advanced language models, they still face technical limitations when it comes to executing and rendering JavaScript, leading to partial or missing visibility of your content.

“AI crawlers’ technology is not as advanced as search engine bots crawlers yet. If you want to show up in AI chatbots/ LLMS, it’s important that your JavaScript can be seen in the plain text (HTML source) of a page. Otherwise, your content may not be seen,” says Peter Rota, an industry-leading SEO consultant.

These rendering challenges make AI crawler optimization a vital part of your technical SEO strategy. Here are a few reasons why and how they struggle to render JS:

1. Static vs. Dynamic Content

JavaScript often generates content dynamically on the client-side, which occurs after the initial HTML has loaded. This means that when an AI crawler reads the page, it may only see the initial HTML structure without the rich, dynamic content that JavaScript would typically load for a human user. Depending on your website, this can lead to a major discrepancy in what content AI is pulling from your site versus what human users see.

Related: Traditional Search vs. AI-Powered Search Explained

2. Execution Environment

AI crawlers typically don’t have a full browser environment to execute JavaScript. They lack the JavaScript engine and DOM (Document Object Model) that browsers use to render pages. Without these components, the crawler can’t process and render JavaScript in the same way a web browser would. This limitation is particularly problematic for websites that rely heavily on JavaScript frameworks like React, Angular, or Vue.js, where much of the content and functionality is generated through JavaScript. As a result, dynamic content may remain hidden from AI crawlers.

3. Asynchronous Loading

Many JavaScript applications load content asynchronously, which can be problematic for crawlers operating on a set timeframe for each page. Crawlers might move on to the next page before all asynchronous content has loaded, missing critical information. This is particularly challenging if your website uses infinite scrolling or lazy loading techniques.

4. Single Page Applications (SPAs)

AI crawlers may struggle with the URL structure of SPAs, where content changes without corresponding URL changes. In SPAs, navigation often occurs without page reloads, using JavaScript to update the content. This can confuse crawlers, which traditionally rely on distinct URLs to identify different pages and content. As a result, much of an SPA’s content might be invisible to AI crawlers, appearing as a single page with limited content.

Related: How to Optimize Single-Page Applications (SPAs) for SEO

5. Security Limitations

For security reasons, AI crawlers are often restricted in their ability to execute external scripts, which is a common feature in many JavaScript-heavy sites. This limitation is designed to protect against potential security threats, but it also means that content loaded from external sources or generated by third-party scripts may not be visible to the crawler. This can be particularly problematic for sites that rely on external APIs or services to generate dynamic content.

Related: How to Manage Dynamic Content

The Hidden Cost of Poor AI Crawlability to Your Traffic

While many search engines struggle to render JavaScript anyway, the impacts for AI crawlers’ inefficiencies may, eventually, be more impactful. Any content loaded dynamically through JavaScript may be completely invisible to AI crawlers.

This can have hard-hitting results on your SEO and your bottom line. For example, Ahrefs stated that they’ve received 14,000+ customers from ChatGPT alone. If your content isn’t being featured in LLMs, this could wreak havoc on the discoverability of your site.

Some websites may be worse than others, particularly those that may struggle with an inefficient crawl budget. Factors include:

- Site size. Larger sites can face crawling inefficiencies as more resources are required to crawl them.

- Sites with frequently changing content. If your content is updated on a daily—or even weekly—basis, you’ll burn through your crawl budget more quickly and your pages may not be crawled as quickly as you’d like.

- Sites with slow rendering speeds. Slow or error-prone sites may see reduced crawling by certain bots, and therefore, could be impacted by poor JS rendering from AI crawlers, too.

You can overcome all these limitations with a dynamic rendering solution like Prerender.io: the easiest way to make your JavaScript content visible to both Google and AI crawlers.

Use Prerender.io to Optimize Your Content for AI Crawlers

Ready to see if Prerender.io is right for you? With a 4.7-star Capterra rating and a 100,000+ user base, Prerender helps JavaScript sites around the world improve their online visibility. Create your free account today.

FAQs: AI Crawler Optimization Best Practices

Is Prerender.io Easy To Integrate?

Yes! Integration is simple, and you should be fully integrated within a few hours. Learn more.

Can I Use Prerender.io With Other SEO Techniques?

Absolutely, and you should! Prerender works best when your SEO is in check. Continue optimizing your SEO strategy while using Prerender on the side. Prerender addresses the technical aspect of making your content visible to crawlers. In tandem with effective SEO strategies, you should see strong SEO improvements.

How Does Prerender.io Affect My Site’s User Experience?

Prerender only serves pre-rendered pages to crawlers and bots, not users. Regular users will continue to see and interact with your dynamic, JavaScript-powered site as usual, but behind the scenes, Prerender improves your site’s visibility to search engines and AI crawlers.

How Does Prerender.io Impact My Website’s Loading Speed?

For crawlers, it improves the loading speed significantly by serving pre-rendered HTML content. This can indirectly benefit your SEO, as page speed is a crucial ranking factor for many search engines.

Does Prerender.io Benefit All Websites?

While Prerender helps most JavaScript websites, it’s particularly helpful for:

- Single Page Applications (SPAs)

- Websites with quickly-changing or dynamic content

- E-commerce sites with frequently changing inventory

- News sites or blogs with regular updates

It may not be necessary for simple static websites or those already using server-side rendering. However, even these sites might benefit if they’re looking to optimize for AI crawlers specifically.

What Is the Best Tool to Optimize Content for AI Crawlers?

The best tool to optimize content for AI crawlers is Prerender.io. Prerender makes your JavaScript-heavy pages fully accessible to AI crawlers like ChatGPT, Perplexity, and Claude by serving pre-rendered versions of your content. This ensures AI systems can read, index, and recommend your site in AI-driven search results. Learn more about how Prerender.io improves your AI visibility.