You can pour your heart and soul into crafting compelling content, but if Google doesn’t index your pages, it won’t show up on SERPs. One common SEO issue that can cause this is the ‘discovered – currently not indexed’ Google indexing status.

While the ‘discovered—currently not indexed’ status is not necessarily an error, it prevents your site and content from reaching its intended audience and can negatively impact your site’s performance in search engines.

In this Google indexing guide, we’ll dive deep into what ‘discovered – currently not indexed’ means, why this Google indexing error happens, whether it’s bad for SEO and site’s visibility, and some actionable steps to fix (and avoid) this crawling and indexing hurdle.

What is ‘Discovered – Currently Not Indexed’?

Before we jump into the steps for solving this Google indexing error, let’s break down what ‘discovered – currently not indexed’ means.

The ‘discovered – currently not indexed’ status doesn’t necessarily imply that there are Google indexing errors. It simply signifies that Google has identified your web page’s URL during the crawling process, but for various reasons (such as concerns about overloading the server or because the URL has been deprioritized), Google has chosen to reschedule crawling and indexing of that page at that specific time.

So, should you be worried about the ‘discovered but not indexed’ indexing issue? Well, this indexing error essentially means a “we’ll get back to you” from Google. Generally, this won’t hurt your SEO and traffic performance in the short term. That said, you shouldn’t ignore this page indexing challenge when:

- Key pages and content are not being indexed. This can lead to a decrease in traffic and sales.

- Increasing number of ‘discovered but not indexed’ pages on Google Search Console. This suggests an underlying problem with your site’s crawlability.

- This indexing issue has persisted for a long time. This indicates that Google has trouble accessing your content.

You can check whether your URLs have ‘discovered – currently not indexed’ indexing errors through the Google Search Console coverage report. We’ll discuss how to find this Google indexing error later.

Related: Learn 10 smart ways to accelerate page indexing.

‘Discovered – Currently Not Indexed’ vs. ‘Crawled – Currently Not Indexed’: What’s the Difference?

Another frequent challenge in search engine indexing is ‘crawled – currently not indexed.’ Let’s break down how this differs from the ‘discovered – currently not indexed’ error.

The ‘discovered – currently not indexed’ signifies that Google has found your page but hasn’t visited it to analyze its content. On the other hand, ‘crawled – currently not indexed’ means that Google has crawled your page; its crawlers have seen and evaluated the content. However, for some reason, Google has chosen not to include it in its search index, and there’s even a chance it might not index it at all.

Interestingly, the Google Search Console help explicitly advises against resubmitting URLs with a “crawled – currently not indexed” status for crawling. In contrast, it is recommended to resubmit your site for re-indexing after you fix the ‘discovered but not indexing’ problem.

This blog tells you more about “crawled – currently not indexed” Google indexing issue and how to solve it.

How to Solve ‘Discovered – Currently Not Indexed’ Google Indexing Errors

Now, let’s look at a combination of technical SEO fixes and content optimization strategies you can use to keep Google happy and ensure your content gets indexed quickly and accurately.

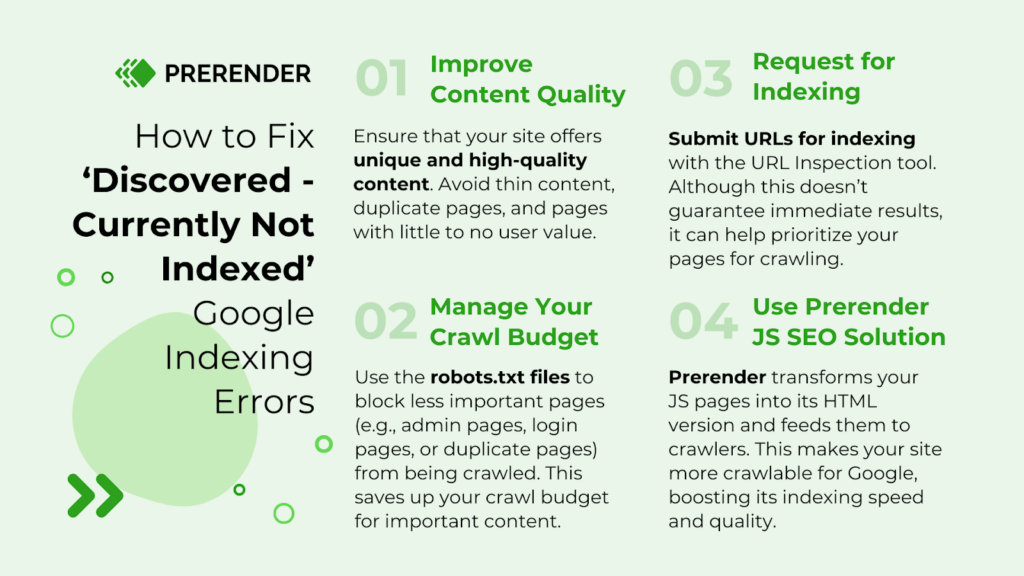

1. Improve Content Quality

First, you must ensure your content meets Google’s standards. Invest in good content so that all pages on your site offer valuable, unique, and high-quality content. You should also avoid thin content, duplicate pages, and pages with little to no user value. Here is a guide to understanding how Google defines quality content worth indexing.

If your site already contains a lot of content, conduct regular content audits to identify and improve low-performing pages. Screaming Frog or its alternative can help you perform thorough SEO audits.

After you find low-quality content, consider merging or deleting them, and apply redirect where appropriate so that you won’t lose the link juice and traffic. Visit this extensive guide to learn how to find and fix duplicate content properly.

2. Manage Your Crawl Budget

Efficiently managing your crawl budget will ensure that your most important pages are crawled and indexed. Use Google Search Console to track which pages are being crawled and analyze any alarming patterns. This data will help you pinpoint areas where your crawl budget may be inefficiently spent and allow you to allocate it for the bottom-line content.

You can also use the robots.txt file to block less important pages (such as admin pages, login pages, or duplicate pages) from being crawled, freeing up more of your crawl budget for important content.

This ‘Robots.txt Best Practices for Ecommerce SEO’ guide will walk you through how to properly set up robots.txt files for your online shop website.

3. Request for Indexing

If you’ve addressed the underlying issues and want to expedite the indexing process, you can manually request indexing through Google Search Console. Use the URL Inspection tool to submit your URLs for indexing.

While this doesn’t guarantee immediate indexing, it can help prioritize your pages for crawling.

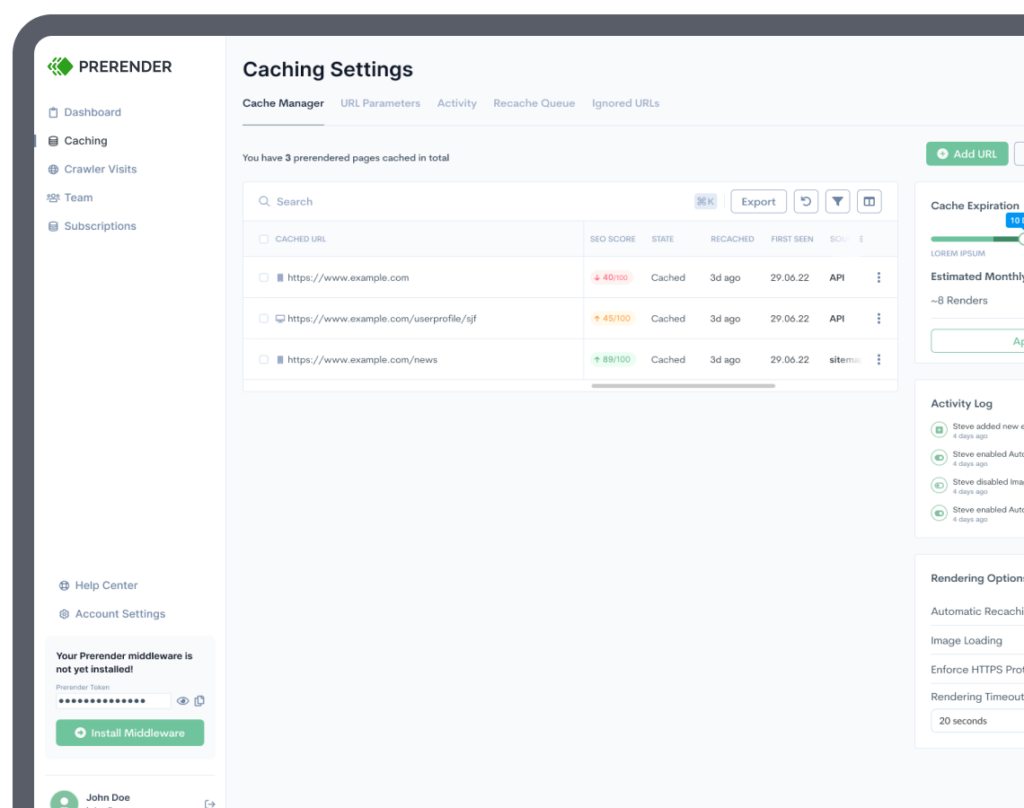

4. Use Prerender.io to Optimize SEO for JavaScript Content

JavaScript-heavy pages can be a double-edged sword for SEO. To address this, you need to take proactive steps. While techniques like minifying CSS and reducing JavaScript file size can help, they often require ongoing manual effort. Another option is server-side rendering (SSR), which can be resource-intensive and costly, making it inaccessible for many websites. A more effective solution to solve JavaScript SEO indexing is to pre-render JavaScript using a dynamic rendering tool like Prerender.io

Prerender.io generates the static HTML snapshots of your heavy JavaScript content. These snapshots are then served to search engines instead of the JavaScript-heavy original versions, granting you the following SEO and web performance benefits:

- Optimized crawl budget: Prerender.io eliminates the need for Googlebot to spend time and resources rendering complex JS content, saving you plenty of crawl budget.

- Reduced indexing errors or timeouts: Prerender.io alleviates the burden on Googlebot, allowing it to crawl and index your JS website more efficiently.

- Improved crawling and indexing performance: Prerender.io can help your JavaScript site achieve 300x better crawling and 260% faster indexing, leading to 80% more traffic.

- 100% indexed content: Prerender.io ensures all the dynamically loaded JS content is readily available for Googlebot, minimizing partial-crawl or partial-index problems.

In summary, Prerender.io addresses several factors contributing to the ‘discovered – currently not indexed’ Google indexing error by making your website more accessible and crawlable for search engines, regardless of the complexity of your JavaScript elements.

The Impact of ‘Discovered – Currently Not Indexed’ on Your Site’s SEO and Visibility

While the ‘discovered – currently not indexed’ content might be indexed soon, waiting for it to happen can negatively impact your website’s SEO and brand performance in the following ways:

- Reduced organic traffic

The primary goal of SEO is to get organic traffic from search engines. When your pages aren’t indexed, they simply don’t appear in search results. This can translate to a significant drop in website visitors and potential leads.

- Lower conversion rates

Reduced traffic translates to a smaller pool of potential customers interacting with your content. As a result, the chances of achieving your desired conversions (e.g., sales and signups) also plummet.

- Brand awareness lag

If your target audience can’t find your content organically, it hinders brand awareness. You miss out on the opportunity to establish yourself as a thought leader in your niche.

Experiencing ‘discovered – currently not indexed’ over time can affect your site’s overall authority and ability to rank for competitive keywords. Additionally, if multiple pages on your site are stuck in the ‘discovered – currently not indexed’ status, it can signal to Google that there may be underlying technical issues with your website, such as poor site structure, server performance issues, or problems with content quality.

Why is My Page ‘Discovered but Not Indexed’?

Several factors can contribute to Google deciding not to index your pages despite discovering them. If you’re struggling with this Google indexing delay, here are some common causes.

- Crawl budget issues

Google assigns a crawl budget to every website. This budget determines the number of pages Google crawls and attempts to index within a certain timeframe. If your website has many pages, especially dynamically generated content, Google might prioritize crawling more established pages and postpone indexing the newer ones.

Need a refresher about crawl budget and how to optimize it for your JavaScript website? Download our free crawl budget guide here.

- Content quality concerns

Google prioritizes high-quality content that provides value to users. If your content is thin, poorly written, or lacks originality, Google will not deem it worthy of indexing.

- JavaScript (JS) rendering issues

Many modern websites rely heavily on JavaScript to create interactive, dynamic content. However, Google’s crawlers often struggle to fully render and understand JS-generated content, resulting in ‘missing content.’ This occurs when content is either not crawled or not indexed (or only half-crawled/indexed) due to resource limitations or partially loaded pages.

- Slow server response times (SRT)

Google’s primary concern is providing a great user experience. If your website takes too long to respond to Google’s crawl requests, it might be flagged as overloaded. To avoid wasting resources, Google will choose to reschedule crawling and indexing the site, leaving the ‘discovered – currently not indexed’ status to your JavaScript pages.

- Technical SEO issues

Technical SEO problems such as incorrect use of robots.txt, meta robots tags, or canonical tags can prevent Google from indexing your pages seamlessly. If Googlebot encounters a ‘noindex’ directive or a page is blocked by robots.txt, it won’t be indexed.

Related: Dive deeper into the difference between ‘noindex’ and ‘nofollow’ tags and their impact on SEO and indexing performance.

How to Know if Google Has Discovered but Not Indexed My Site

To see if Google has discovered but not indexed your site (or if you experience other Google indexing errors), use the Google Search Console coverage report.

Navigate to the ‘Pages’ tab, located under ‘Indexing.’ Then, scroll down to the ‘Why pages aren’t indexed’ section. If you see “Discovered – currently not indexed” listed here, it means your site is experiencing this specific crawling and indexing problem. If it’s not on the list, your site is free from this particular Google indexing error.

Clicking on “Discovered – currently not indexed” will show you the number of affected pages. After you’ve applied the recommended fixes for the “discovered but not indexed” issue, you can click ‘Validate Fix’ to request that Google recrawl and re-evaluate those URLs for indexing.

Prerender.io Prevents Your JS Pages From Experiencing ‘Discovered – Currently Not Indexed’ Indexing Errors

For JavaScript-heavy websites, the ‘discovered – currently not indexed’ status in Google Search Console can be a real pain point. It signifies that Google has found your pages but hasn’t yet added them to its searchable index. This can significantly hinder your website’s SEO potential, as valuable content remains hidden from searchers.

Fortunately, there are proactive SEO steps you can take to significantly reduce the chances of your JS pages falling into this indexing limbo. You can start by improving your content quality, better managing your crawl budget and adopting Prerender.io’s JavaScript SEO solution.

By pre-rendering your JavaScript pages into their HTML versions, you help Google to read and understand them easily. Consequently, Google is more likely to index your JS pages faster, without leaving any content elements behind.Boost your JavaScript crawling and indexing performance today with Prerender.io. Create an account and get 1,000 renders for free today.

FAQs – Best Practices to Fix ‘Discovered – Currently not Indexed’

How Do You Fix ‘Discovered – Currently Not Indexed’ In Google Search Console?

There are a few things you can do to solve the ‘discovered – currently not indexed’ Google indexing issue:

- Improve internal linking structure

- Submit XML sitemaps

- Enhance content quality

- Optimize Core Web Vitals

- Implement proper JavaScript rendering with Prerender.io

What are the Reasons for ‘Discovered but Not Indexed’ Indexing Delays?

Some reasons why Google may discover your pages but not index them include content quality, crawl budget limitations, JavaScript rendering issues, or other technical issues.

How Long Until Google Indexes Discovered Pages?

Google typically indexes discovered pages within days to weeks, depending on:

- Site authority

- Content quality

- Technical setup

- JavaScript rendering efficiency

A prerendering solution can significantly speed up this process by making content immediately accessible to search engines.

How Can I Get My Website Indexed By AI Search Engines?

There are a few tips to ensure your website shows up in AI search results. Write like a human would for Natural Language Processing (NLP), implement a logical site structure, and optimize for JavaScript. This helps your site appear in both traditional and AI-powered search results.

Will ChatGPT And AI Tools See My JavaScript Website?

No, ChatGPT and other AI tools like Claude or Perplexity typically can’t see JavaScript content without help. While traditional search engines like Google can eventually process JavaScript, AI tools often miss this content completely. Using Prerender.io helps by converting your JavaScript content into a format that all tools—including AI crawlers—can easily read and understand.