Seeing your pages get de-indexed from SERPs sounds like a story out of a horror film.

Not only does it affect organic visibility, but it directly impacts your bottom line. It can cost you leads and sales, and if not fixed in time, you can get replaced in the SERPs.

If you leave this issue unattended for too long, your competitors will take advantage and make it harder for your pages to return to their usual positions. This will potentially hurt your SEO performance long term.

To help you recover as fast as possible, let’s explore the most common reasons websites get de-indexed and how you can recover from it.

Why Did Google De-index My Website?

|

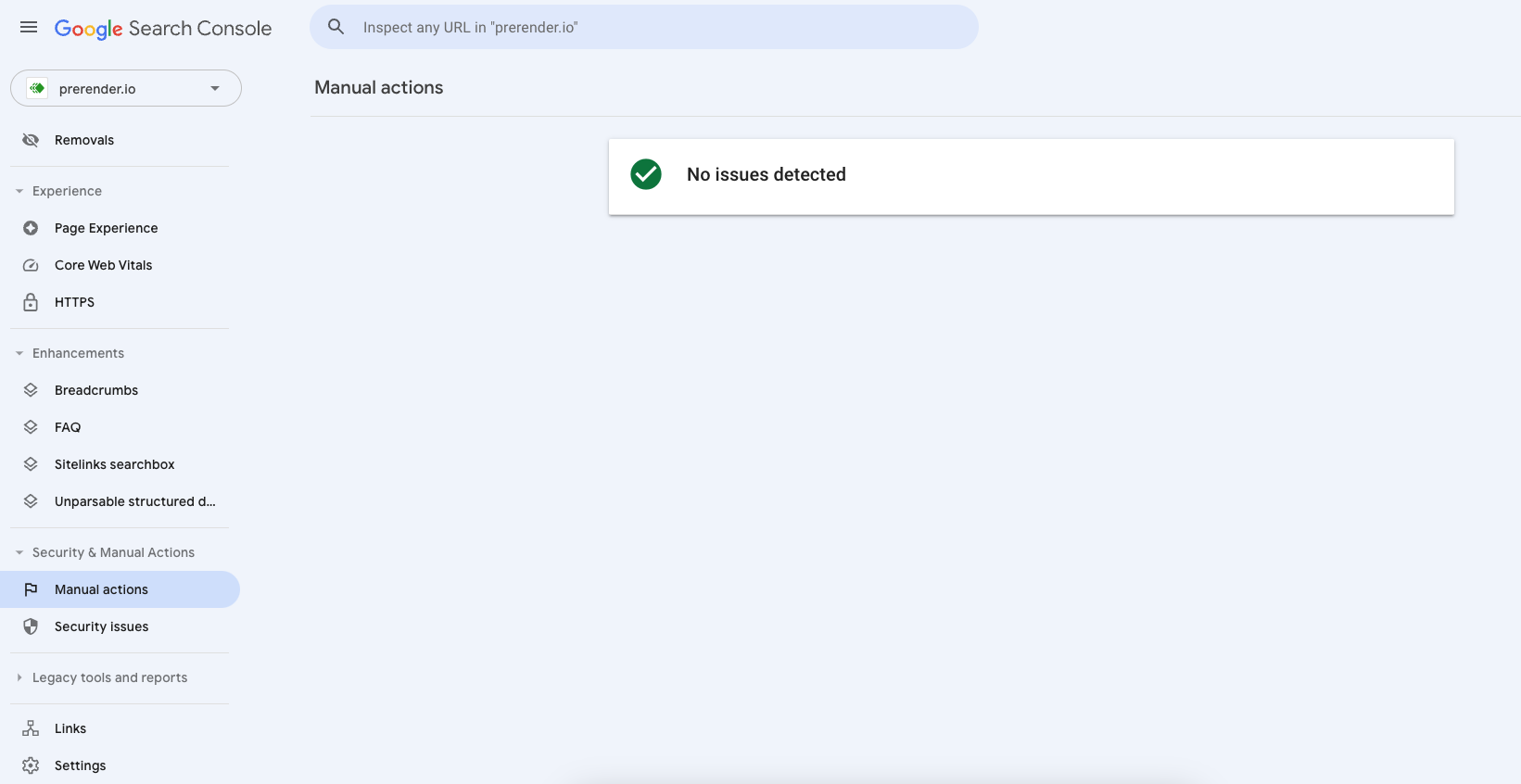

Google can decide to de-index a website when they consider the site is breaking their guidelines or they are obligated by law (like detecting fraudulent activities). You’ll receive a notification inside Google Search Console under the “Security and Manual Actions” tab for any manual actions taken. It will give you more details on why this is happening. Once you have identified a manual action, the following steps are to correct the issue and submit a reconsideration request. Here’s a list of Google’s manual actions. However, Google can also de-index your pages for other reasons, too. |

Common Reasons Why Your Pages Might Be De-Indexed

Content Quality

If your content is thin, spammy, plagiarized, ridden with duplicates, and generally poor quality, you’ll be at higher risk of de-indexing.

“If the number of [de-indexed] URLs is very high, that could hint at a general quality issues,” Gary Illyes from Google recently said.

Ultimately, Google frequently reiterates that content should be “helpful, reliable, and people-first.” If you’re doing the opposite, over time, there’s a good chance you’ll be flagged.

Black Hat SEO Techniques

Avoid following any sort of black hat SEO tactics. This is a sure-fire way for Google to flag your site and potentially de-index your content.

Risky behavior might include:

- Link exchanges

- Sketchy guest posting

- Cloaking

- Link farms

- Too many inbound links in a short timeframe (Google might think you bought them)

- Keyword stuffing

Technical Issues

Beyond content and site maintenance, your content may also be de-indexed because of various technical reasons.

Some of these technical issues include:

- The accidental or improper use of noindex tags or directives

- Robots.txt file blocking search engines

- Server errors or prolonged downtime

- Hacked content on the site

- General JavaScript rendering issues

User Experience (UX) Problems

User experience is highly important for Google. While not as common as other issues, your pages may be flagged for de-indexing because of.

Some UX problems to be aware of:

- Intrusive interstitials or pop-ups, such as obscuring the entire page with interstitials or failing to include the legally mandated interstitials in your region

- Deceptive page layouts

- Overly slow loading times or poor mobile experience

Poor Site Maintenance

Haven’t been paying attention to your site? De-indexing might happen if your website hasn’t been well-maintained or updated in a long (read: long) time.

Make sure to frequently check in on your website, update it, and if necessary, delete certain content pages if they’re no longer relevant.

Geolocation Issues

Content is restricted in certain regions due to legal requirements. Ensure to follow the mandatory legal requirements in your location, especially in stricter regions like the European Union, for example.

Now that we know why Google can de-index a website, here are 6 recovery steps to prepare your website to get indexed again.

6 Steps to Recover After Google De-Indexed Your Website

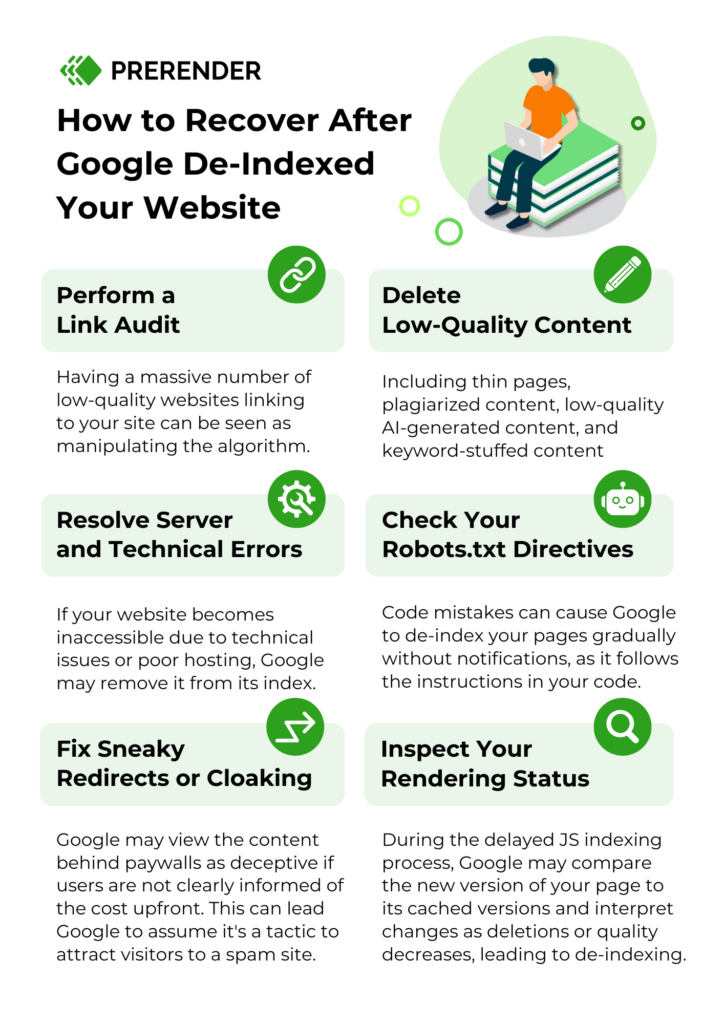

1. Perform a Link Audit

In Google’s spam policies, link spam is defined as “any links that are intended to manipulate rankings in Google Search results (…) This includes any behavior that manipulates links to your site or outgoing links from your site.” Because links are such an essential part of Google’s ranking algorithm, it’s easy to understand why they want to be strict about it. Unnatural links are a common reason for websites to get de-indexed, so ensuring you’re not infringing any linking policy is a crucial step toward recovery.

In many cases, link audits are performed to eliminate low-quality links. You can’t control what websites link to your resources, but manual actions are taken when there’s a pattern of misleading links.

For example, when a massive number of low-quality websites start linking to your site in a short period of time, it can be seen as you trying to manipulate the algorithm, even if you didn’t have anything to do with it. This could be a black hat attack from a competitor. Focus your link audit on these steps:

-

- Identify all low-quality, irrelevant, and spammy links

- Create a disavow list with these links

- Submit the disavow file through https://search.google.com/search-console/disavow-links

- File a reconsideration request

To avoid this issue in the future, check your backlinks at least once a month. Disavow anything that’s not useful.

For a more constant overview, you can use a tool like SEMrush, which assigns a toxicity score to every link, making it easy to spot potential threads. A healthy link profile will keep you out of trouble.

|

Is Your Site Being Used as Part of a PBN? Besides backlinks, the links you create on your site can also cause de-indexation. If Google identifies your site as part of a private blog network (PBN) sending unnatural links to other sites, your website will get penalized, and returning its content to Google’s index will be very hard. If you’re getting involved in link exchanges or selling link spots on your content, you could be linking to spammy sites or getting involved with other PBNs, which will drag your site out of Google’s index with them. Solution: audit your outbound links and ensure you only link to trusted sources. Replace or delete all spammy outbound links you find, file a reconsideration request, and submit a new sitemap to Search Console so Google can eventually start crawling your site and see you’ve taken action. |

2. Eliminate Spammy, Duplicate Content From Your Site

Showing trustworthy, helpful content is at the core of Google’s priority, and all ranking factors are designed to put the best result possible at the top of the SERPs. But this also means your pages must meet Google’s quality standards to get indexed. (After all, Google has to allocate resources to crawl, render and index your pages, as well as keep a cache version stored and pick up on any updates).

Related: Learn 6 most important Googe ranking factors for 2024.

With millions of new pages getting published daily, they can’t just waste resources on low-value pages or, worse, indexing dangerous/misleading content. When your site is filled with spammy content (like plagiarized pages), low-quality AI-generated content, or stuffed with keywords, Google will take action.

And by action, we mean take your site out of the index to avoid spending more resources crawling it.

The best course of action is to delete all the URLs that contain this type of content and follow SEO best practices to optimize and build quality pages. (Of course, if most of your site was created this way, you might want to consider rewriting the content before submitting it for reconsideration.)

Fixing Duplicated Issues

Sometimes, Google can flag your pages as duplicated content even if you’ve done everything manually and tried to write the best pages possible. This usually happens because of technical issues or conflicting SEO strategies. If that’s your case, we recommend you follow our duplicate content guide to identify the origin of the issue and fix it from the root. It will also teach you how to prevent this problem in the future. Once the issue is resolved, proceed to file the reconsideration request.

3. Fix Server Errors

Things are bound to break, and your servers are not the exception. If your site goes down without you realizing it, Google could take matters into its own hands and de-index your site.

Check your site’s health and contact your hosting provider as fast as possible to bring your site back online and request reconsideration once your pages return a 200-success status code.

Another less common technical difficulty with your hosting is when a general manual action is done to the service. As Google states: “if a significant fraction of the pages on a given web hosting service are spammy, we may take manual action on the whole service.”

If so, you must migrate your site to a different hosting service. Still, the best way to prevent this is to use a reputable hosting provider and do your own research before committing to less-known services.

(Note: It can also be a problem with your domain, like it expiring without you knowing. In this case, the URL is no longer available for Google to crawl, and thus your site gets de-indexed.)

4. Check Your Robots Directives

Code mistakes happen constantly, and it’s even more common during production times when your website is going under constant changes and updates.

A simple code mistake that can make your site drop out of Google’s index is changing robots directives into no-index, as this directive directly tells Google you don’t want specific pages, directories, or your entire site to be indexed.

When the tag is in place, URLs will be dropped from Google’s index gradually, but there won’t be any notifications or manual action warnings because it is following your instructions.

To check if that’s your case, look at the robots meta tag on a page level or the robots.txt file directives, which can affect entire directories or the site as a whole.

Once identified, delete the directive from the robots.txt file or set the rogue robots tag to index, follow.

If you don’t have experience configuring your site’s robots directive, read our guide on robots.txt file optimization. It will tell you how to configure your file to avoid index issues and ensure your pages are discovered by Google.

5. Fix Any Sneaky Redirects or Cloaking

If the content your users receive is different than the content Google receives when getting to your page, you’re using cloaking, and it is a direct violation of Google’s guidelines.

What Google is trying to prevent is ranking a page for a specific keyword and then having the page display a completely different thing for the end user. This is often used to deceive visitors, so it wouldn’t reflect well on your site, even if it were by mistake.

It’s important to mention that cloaking penalties can also occur because of poor implementations or unknowingly making a mistake.

For example, you could have content behind paywalls, preventing Google from accessing your main content. Because users won’t expect this non-content on your page, Google will assume you’re using deceptive tactics to attract visitors to a spam site.

Note: There are clear instructions to add structured data for paywalls and subscriptions and avoid penalties.

Another scenario is when an interstitial popup redirects users to another page. Again, this can be seen as a spam tactic.

However, a widespread occurrence is hackers using your site for cloaking. After getting access to your site, hackers would cloak specific pages—usually pages ranking somewhat high in search results—to funnel visitors to scams.

Because the hack affects only certain pages, it’s hard to notice until it is too late. So setting up security monitoring systems is advised.

Take care of every vulnerability, remove malware, fix any redirection that could be interpreted as cloaking, and then submit your reconsideration request to Google.

6. Ask Yourself If Google Can Render Your Content Properly

As your site grows, it needs to integrate new systems and functionalities, becoming more dynamic to accommodate the demand from your customers.

However, handling JavaScript is a difficult task for search engines, as they have to allocate more resources to download the necessary files, execute the code and finally render the page before they can access the content.

This process can take anywhere from a couple of weeks to several months—sometimes up to 18 months—until your pages are fully rendered.

During this time, Google will compare your new pages to their cache version, which will indicate your content has been deleted or at least that the site’s quality has dropped significantly, causing your site to be de-indexed by Googlebot as it is no longer relevant.

The most common reason for this issue is not setting up any system to handle the rendering process. If you’re using a framework like React, Vue, or Angular, these technologies default to client-side rendering, which makes your content invisible to search engines. Consider a solution like Prerender to improve this—read on for details.

Prevent De-indexing and Maintain Strong SEO With Prerender

Although you can recover from penalties and de-indexation issues, you will definitely lose rankings and revenue, so the best practice is to try and prevent the problem from happening.

Manual actions are rarely a sudden occurrence. In most cases, there will be a gradual drop in your indexation rate. Monitoring your index status every month will help you spot trends before they become a widespread issue.

Notice that you’re struggling with crawling, indexing, and JavaScript rendering problems? Prerender is a technical SEO solution that you can install to prevent de-indexing from happening. The console includes a caching process will identify potential technical SEO issues and list them in your dashboard in order of priority.

Watch the video below for a short explainer on how it works.

Additional Indexing Tips

To help you in your ongoing indexing optimization journey, here are additional recommendations to go along with the list above:

- Follow indexing best practices. Here’s a 10-step checklist to help Google index your website.

- Read Google’s guidelines.

- Keep an eye Google’s algorithm updates. Changes in their guidelines can impact your site if it’s now in violation.

- Install Prerender.io. Trusted by 65,000+ businesses worldwide, this pre-built rendering solution improves indexing in a fraction of the time. Get 1000 free renders per month.

Ensuring fast crawling and flawless indexation is necessary to strengthen your online presence and avoid losing all your hard work because of simple mistakes. We hope you find this article helpful and you can return to the SERPs where you belong!

FAQs

How Long Does It Typically Take To Recover From Being De-Indexed?

Recovery time can vary depending on the reason for de-indexing and how quickly you address the issues. Generally, it can range from a few days to several weeks or even months. For manual actions, recovery might take 2-4 weeks after submitting a reconsideration request. For technical issues, recovery can be faster once the problems are fixed and Google re-crawls your site.

Can I Still Get Organic Traffic While My Webpages Are De-Indexed?

While your site is de-indexed, you won’t receive organic traffic from Google search results. But you can still get traffic from other sources. These include: direct visits, social media, or other search engines that haven’t de-indexed your site.

How Do I Prevent My Content From Being De-indexed In The Future?

There’s no guarantee against future de-indexing, but you can significantly reduce the risk by following best practices. These include: regularly monitoring your site’s health, conducting technical SEO audits, ensuring your content can be easily read by search engines, keeping your content high quality and up-to-date, avoiding black hat SEO tactics, and installing a solution like Prerender.io.