Search engine optimization (SEO) is an investment that, when done right, can create a sustainable source of income for your business. However, like any journey to SEO success, it’s plagued with challenges you’ll need to overcome to get the most out of your search campaigns. One significant challenge that can trip up even the most seasoned SEO professional is JavaScript.

JavaScript (JS) adds a layer of interactivity and dynamism to websites, enhancing user experience. But for search engines like Google, it can create a roadblock to understanding and indexing your content. These factors can lead to critical SEO issues for JavaScript-heavy websites, including:

However, Google understands the importance of JavaScript in modern web development. They’ve developed methods to process and index JS-rich pages, though it requires some additional considerations on your part.

This article will delve into Google’s approach to indexing JavaScript content, common issues you might encounter, and how to optimize your website for successful SEO despite its reliance on JavaScript.

How Does Google Index JavaScript Pages?

In general, Google’s indexing process looks something like this:

1) Google’s crawler (or bot) sends a request to the server

2) The bot will then generate a paint from the returned HTML information and grab all links found in the crawl queue

3) This first paint of the page gets indexed

4) The bot will also send a request to download CSS, JavaScript and any other files needed to render the page correctly

It seems simple enough, but here’s where things get more complicated.

Crawling an HTML file is relatively easy, as all content is already structured and doesn’t require additional computation power. But dynamic pages need a more complex rendering process.

-

- After all the files are downloaded and cached, the page gets sent to the renderer

-

- The renderer will execute all JavaScript, grab new links for crawling and compare the rendered version against the first paint version, and index the additional content

Note: For a more detailed description of the process, visit How Prerender Crawls and Renders JavaScript Websites.

For Google to “see” the entirety of your content, it must rely on the renderer component. This renderer uses an up-to-date Google Chrome instance controlled via Puppeteer, allowing Google to render your pages as your browser does. And that’s where the problems start.

This rendering process is time and resource-consuming. Google needs to do this exact same process for millions of pages every day, resulting in a lot of delays and, in some cases, complete failure to index your pages. It is important to note that Google processes every request one by one, so if your JavaScript doesn’t load, it’ll wait until it does before fetching the following file.

This creates a bottleneck that will eat your crawl budget and generate a half-baked page in Google’s index – if it gets indexed at all.

The 3 Main JavaScript Indexing Issues

There are several challenges and problems with JavaScript SEO that you’ll need to consider when working with dynamic sites. These issues will impact your website differently and hurt your SEO. But to keep this article’s focus precise, let’s dive into the first thing your SEO campaign needs to nail: getting your pages indexed by Google.

Regarding indexation, three primary problems can emerge:

1. JS Files are Blocked by the Robot.txt

One of the most common and easy-to-fix issues regarding JavaScript is resources getting blocked by the robot.txt file.

Many developers and misinformed SEOs want to block crawlers from accessing anything but content. If you think about it, it makes sense at some level. After all, if all your content is on the HTML file, why would you want to waste Crawl Budget on fetching resources that add no SEO value?

However, as we know by now, Google uses these resources to generate a paint of your page. That includes your CSS to understand the layout of your page and the JS file to render dynamic content.

Without these two files (and other resources like images) blocked, search engines won’t generate an accurate representation of your page, resulting in indexing issues like missing content, low rankings, or errors.

Resource: Robot.txt SEO: Best Practices, Common Problems & Solutions

Solution

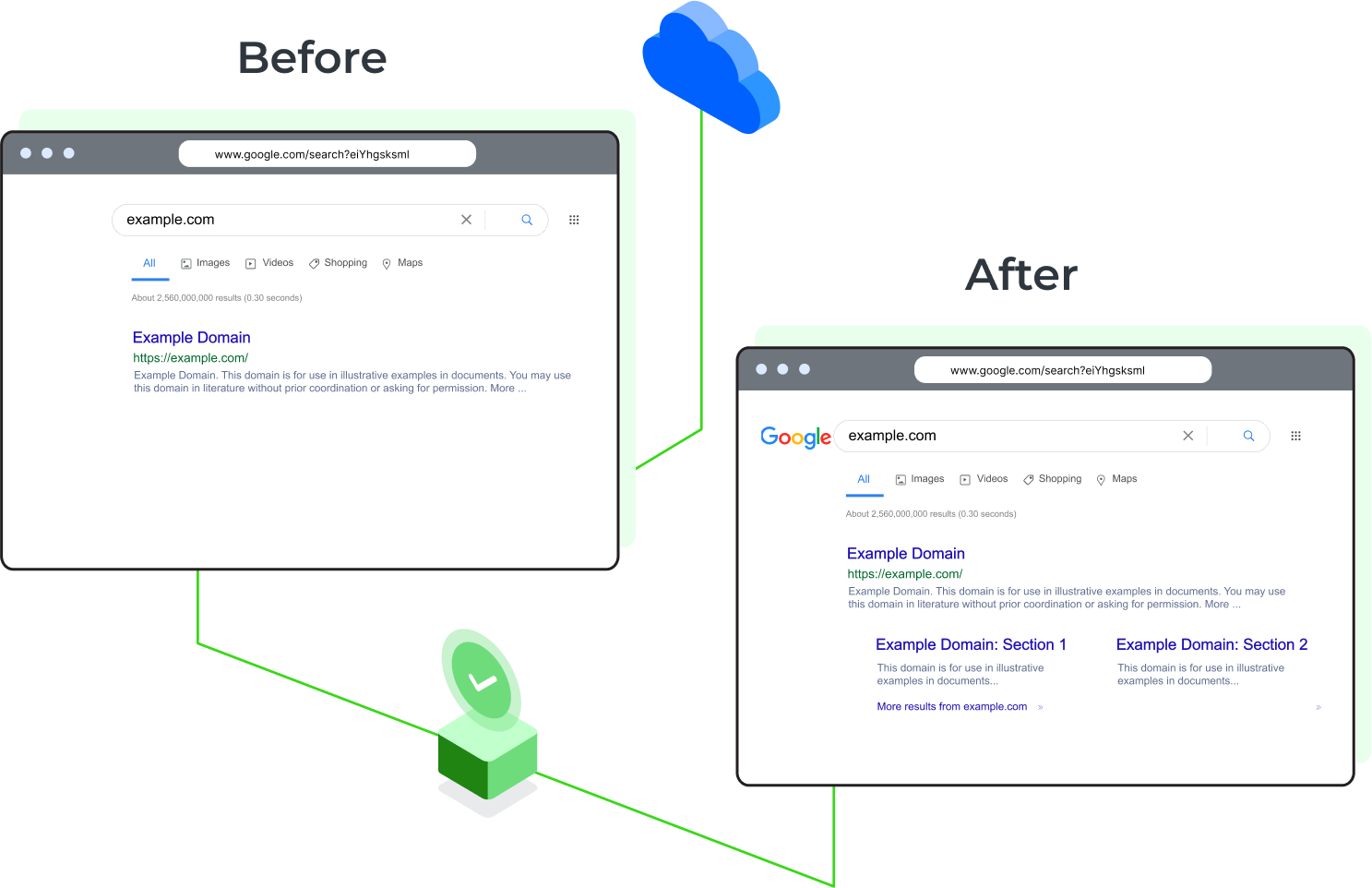

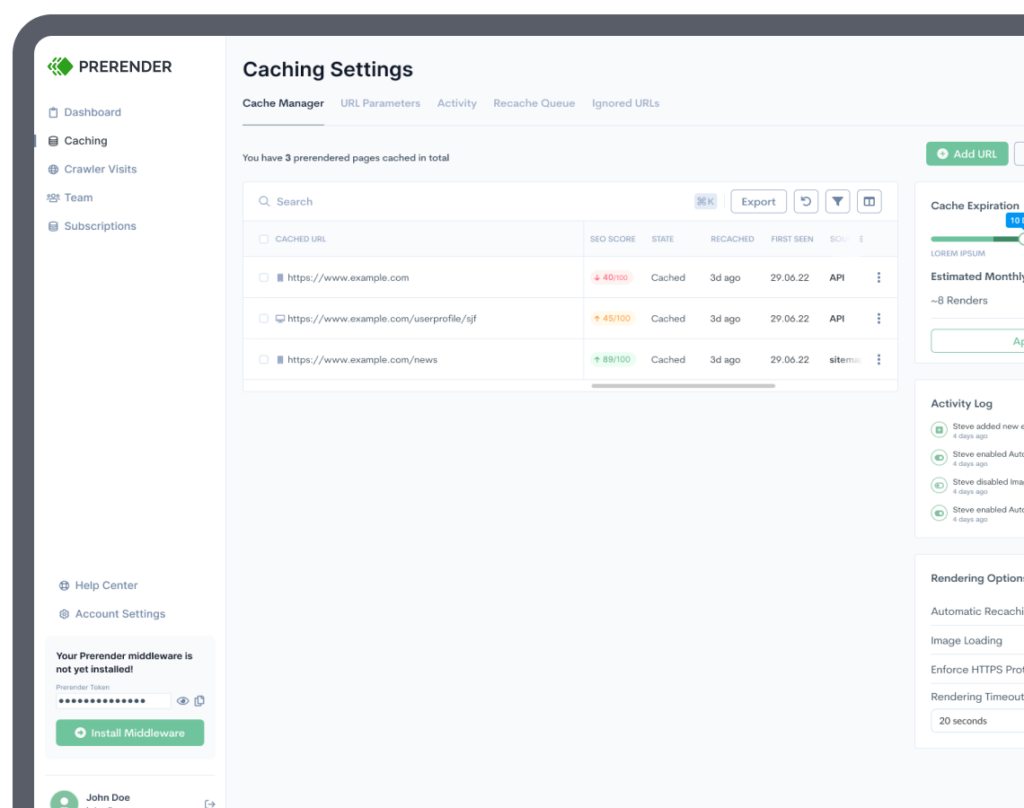

Prerender cuts all these issues from its root by generating and storing a static version of every page on your website and serving them to crawlers, and all you need to do is install our middleware on your server.

2. Google is Using Old Files

When Google Bot downloads the necessary files to render your page. It’ll cache them to lower the burden on the system when it crawls the page again. Because of this caching system, if you update your CSS, JS, or any other file name and content, Google could ignore the changes and use the cached files instead.

Suppose the changes are significant enough (like changing element names or behaviours). In that case, the page won’t be rendered as intended, causing your page to get unindexed because of low/poor content or because it appears to be broken.

If your pages don’t get unindexed, it will affect your rankings. Google can perceive the poorly rendered elements as a signal for a bad user experience and derank you against more fully rendered pages from your competition.

Solution

Use Prerender.io. When a crawler requests your URL, the middleware will identify the user-agent as a bot and send a request to Prerender.io, which will return the fully rendered version of your page to the bot through your server.

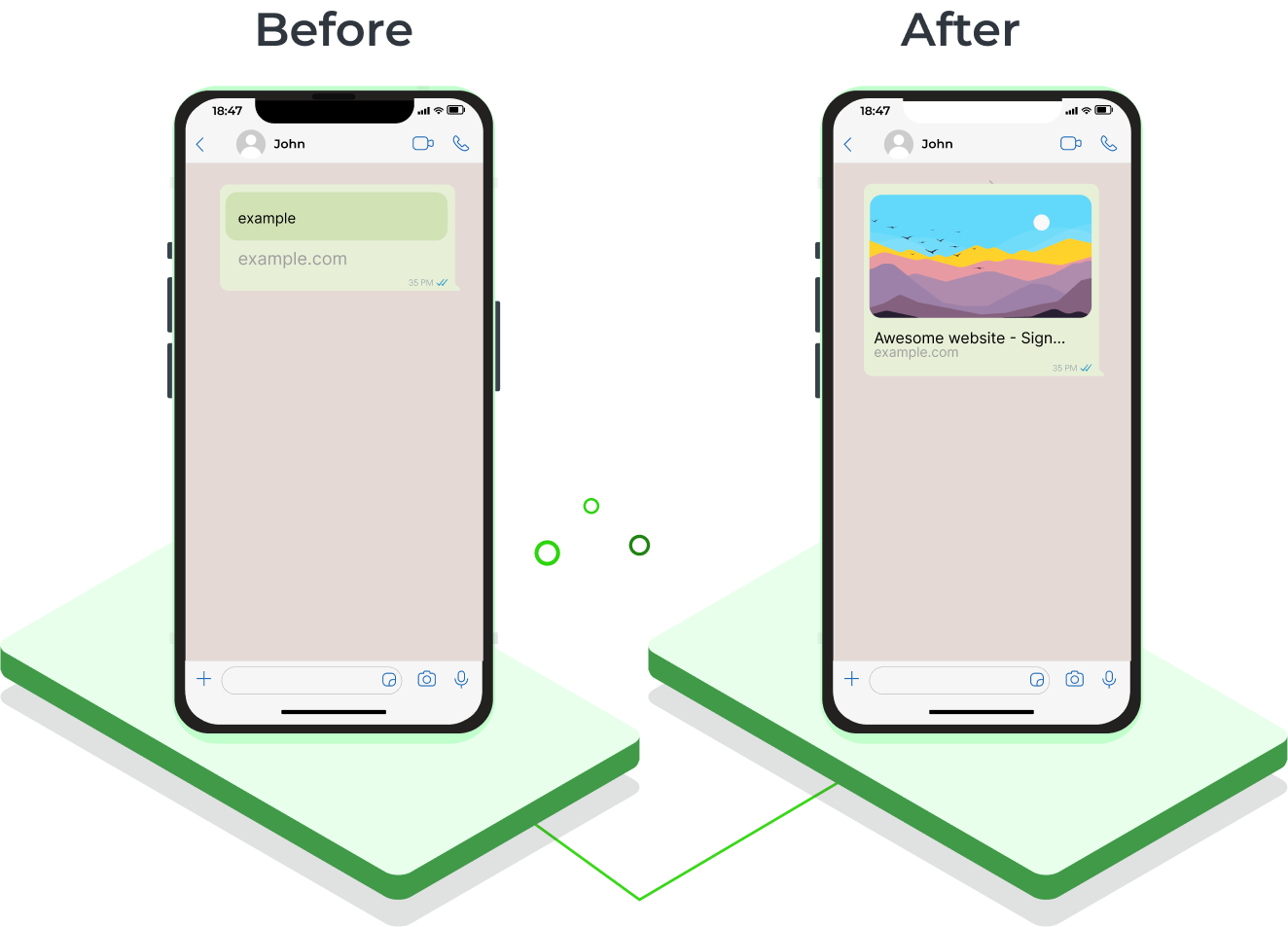

Because all your content is already rendered out and Google is receiving a static HTML page, it won’t need to go through Google’s renderer, eliminating all problems associated with the dynamic nature of your site, such as broken link previews.

3. Google Can’t Render or Partially Renders Your Pages

Even if Google downloads all your files and has access to every resource it needs, there are still many instances where the renderer won’t be able to get all the content on your page.

Although there are many reasons for this, Google’s renderer operates with time. When Google is rendering your pages, it will timeout once the allocated crawl budget is done, interrupting the rendering process.

In a new crawl session, it will go through the same process, and if it still can’t render the entire page, it will index your page as it is, no matter if it isn’t the complete paint – after all, how can Google really know?

The problem with these partially rendered pages is that Google can miss crucial information, extra data injected through AJAX, and more. More about SEO and AJAX here.

You must also remember that Google will compare your source page to the rendered page. If they have inconsistencies (like layout shifts), it won’t index your page because it can be considered spammy.

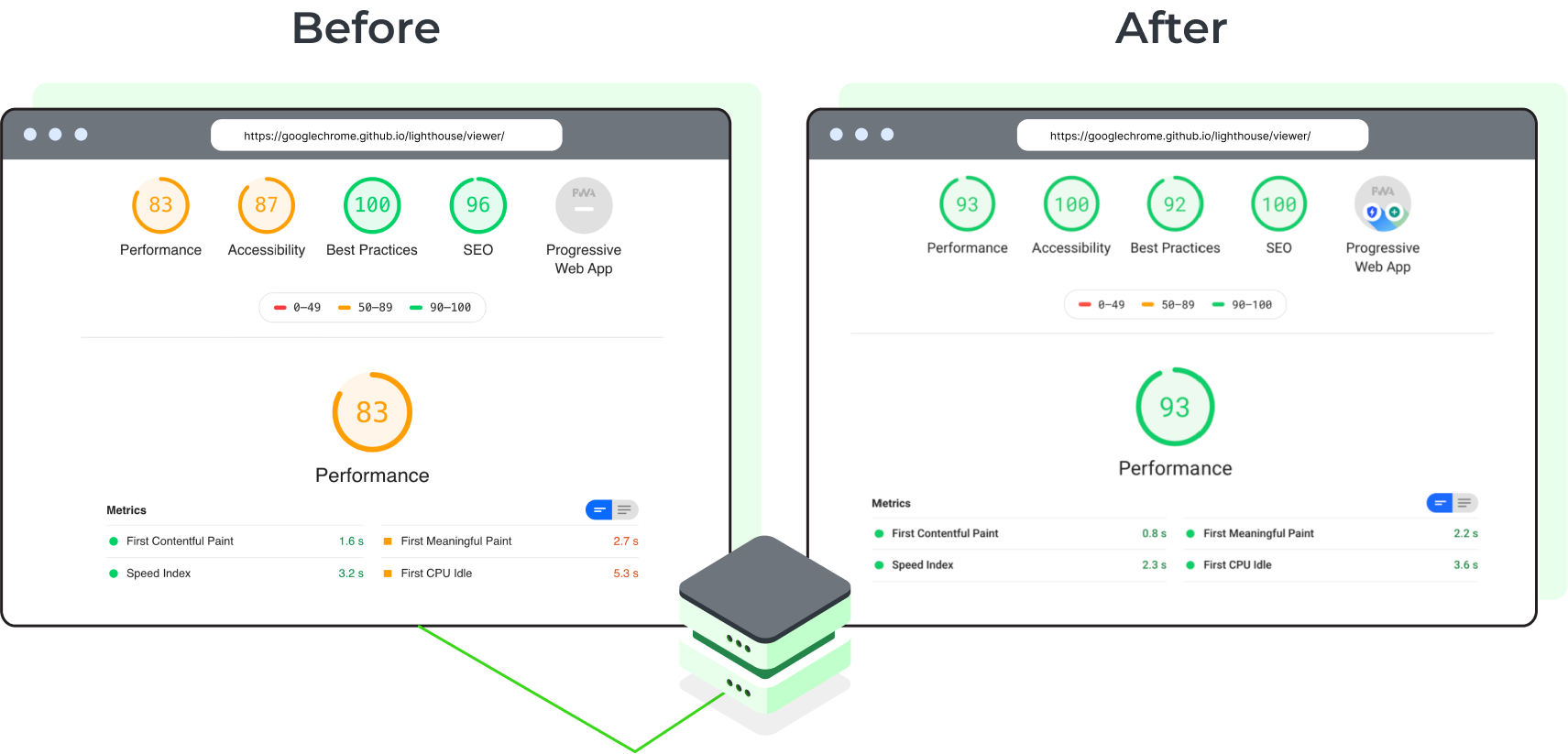

Although it’s not directly related to indexation, it’s worth mentioning that rendering will impact page speed. Google will take into consideration the time it takes to render the page when measuring things like Core Web Vitals. A low score can harm your rankings.

If your pages rely on frameworks like Angular, React, or Vue to serve content, you’ll need to work extra hard to ensure search engines can pick all the data they need. If you want to take this route, we’ve created a few tutorials to help you get started:

However, most workarounds can cost you thousands of dollars and countless development hours to make it work. (Not to mention the added server costs for solutions like server-side rendering.)

Solution

By using Prerender.io, you can save money on these efforts and still get your desired indexation results.

In other words, Prerender.io will:

-

- Ensure search engines can read and index the entire content of your pages

-

- Improve PageSpeed scores – Prerender.io will do the heavy lifting for search engines

-

- Free crawl budget, allowing more pages to be crawled and indexed

-

- Prevent any Google caching issues by providing an up-to-date version of your page

-

- Keep content, layout, and user experience consistent

All of this without you needing to change your backend technology, spend hundreds of dollars on processing and maintenance fees, and steal dev time away from your product.

Curious to see just how much Prerender.io can impact your site’s potential? Try our free site audit tool to measure where your site is at and where you can go once you fix your technical issues.

FAQs for JavaScript Indexation

1. What are the types of Rendering Processes for JavaScript?

There are three main types of rendering processes.

- Client-Side Rendering (CSR): In CSR, the user’s browser downloads the initial HTML from the server. This HTML contains minimal content and references to JavaScript files. Once downloaded, the browser executes the JavaScript code, which then fetches additional data and dynamically generates the final content you see on the webpage.

- Server-Side Rendering (SSR): With SSR, the web server itself is responsible for rendering the entire webpage, including the content generated by JavaScript. The server takes care of executing the JavaScript code and generates a fully formed HTML document. This HTML is then sent directly to the user’s browser.

- Static Site Generation (SSG): SSG pre-renders the HTML content of your website at build time. This means the website content is already generated and stored as static HTML files. When a user requests a webpage, the server simply delivers the pre-rendered HTML file, making it very fast for users.

2. What are examples of Javascript SEO Audit tools?

- Prerender: This service helps pre-render your website’s JavaScript content, creating a static HTML version that search engines can easily crawl and index.

- Server-side Rendering (SSR): This technique renders your website’s content on the server before sending it to the browser, ensuring search engines can see the content even if they don’t execute JavaScript fully.

- Screaming Frog SEO Spider: This tool crawls your website and identifies potential SEO issues, including problems with JavaScript rendering.

- Google Search Console: This free tool from Google provides insights into how search engines see your website, including any JavaScript-related errors.

In conclusion, you now know that Prerender is your prime solution for how to fix Javascript issues with Indexation. Simply choose a pricing plan that fits the size of your website and follow our installation instructions.

Don’t worry, we’ll be here to help you through the process!