Career sites and job boards, such as Indeed and Glassdoor, often face unique SEO challenges due to their dynamic content and the large number of hosted pages. Limited crawl budgets further complicate matters, as it becomes more difficult to ensure new or updated job listings are crawled and indexed promptly before they become outdated.

In this crawl budget guide, we’ll explore effective crawl budget optimization strategies specifically designed to enhance SEO for career websites and job boards. These will help you solve JS indexing issues, maximize your site’s visibility, and ensure that your job listings are always providing the most updated job vacancies in SERPs.

Why is Managing Crawl Budget a Challenge for Job Board Websites?

Crawl budget refers to the number of pages Googlebot crawls and indexes on your site within a specific timeframe. It is heavily influenced by factors like the size of your site, the number of requests Googlebot receives, your server’s capacity, and the frequency of content updates.

Related: Need a refresher about the role of crawl budget in SEO? Download our FREE crawl budget whitepaper now.

As mentioned, the challenge of managing your crawl budget for job marketplaces or career websites is amplified by two main factors:

- The sheer volume of pages: including job listings, employer profiles, categories, etc.

- The quickly-expired content: either the job postings are changed, added, or removed within a short period of time.

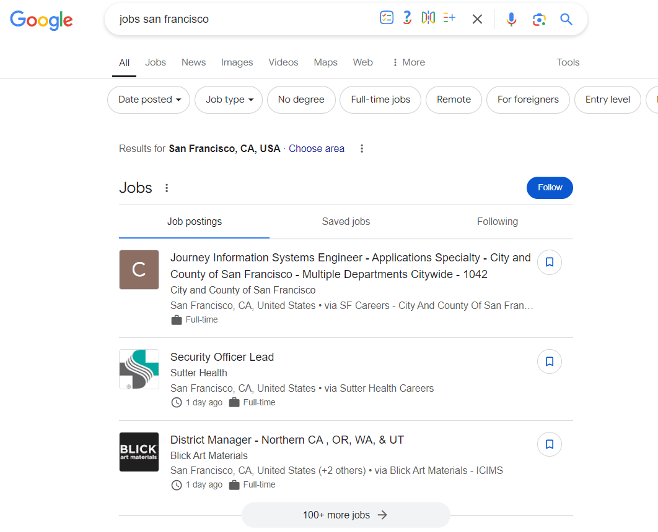

This urgency demands efficient crawling and indexing to ensure that up-to-date job postings are always presented to users. Outdated or expired job listings can negatively impact user experience and hurt the job board site’s relevance in search results. There’s also the problem of Google for Jobs (GFJ). GFJ aggregates job postings and displays them in a prominent section within the SERPs, meaning they often take precedence and appear before traditional organic results in many cases.

This means that to effectively reach job seekers, job and career sites must optimize not only for traditional SERPs but also for Google for Jobs. This requires strategic management of crawl budget resources. If search engine bots exceed their crawl limit without indexing your most important content, it can hinder your job SEO efforts and reduce your visibility to potential job seekers.

6 Strategies on How to Optimize Crawl Budget for Career Sites

Optimizing your crawl budget here means ensuring that Google prioritizes crawling high-value pages (job listings) over less important content. Here are a few tips on how to make the most out of your limited crawl budget.

1. Reduce JavaScript Rendering Delays

Most job boards and career sites use JavaScript to build websites and add dynamic interactions. While this is great for UX, it introduces significant delays in crawling and indexing, primarily because Googlebot needs to execute the JavaScript to render the content before it can evaluate what to index.

Some websites use SSR or CSS techniques like lazy loading to overcome this challenge, but these have significant drawbacks in terms of cost and efficiency. For instance, SSR can cost you $120,000 for building the framework alone, not to mention the maintenance and scaling costs. The best way to fix this issue is to use a prerendering tool like Prerender.io.

Top tip: Learn the financial and engineering benefits you’ll get when you adopt Prerender.io compared to building an SSR.

Prerender.io is a crawl budget optimization cheat code for those that want to hack SEO for career websites. Prerender.io acts as a bridge between your website and search engines. When a search engine bot, like Googlebot, requests a page, Prerender.io intercepts the request and delivers a pre-rendered, static HTML version.

This pre-rendered HTML eliminates the need for Googlebot to spend time processing your career website’s JavaScript. Because of this, your job positings are crawled and indexed quicker, increasing their visibility before the job listings get expired. Importantly, human users continue to experience the full functionality of your dynamic JavaScript-powered website. This means you achieve improved SEO without sacrificing user experience.

Learn more about how Prerender works and the benefits.

2. Implement Structured Data Correctly

Google for Jobs uses JobPosting schema markup to pull job listings into its interface. Proper implementation of structured data is crucial, as it ensures that Google correctly interprets your job postings and includes them in GFJ results.

Ensure your JobPosting schema includes essential fields such as job title, job location, job description, date posted, validThrough (expiration date), and application process. This reduces confusion for Googlebot and helps with more efficient crawling and indexing. Follow our JobPosting schema markup tutorial to implement it correctly.

Errors in your structured data can also lead to wasted crawl budget since Googlebot may spend time trying to process pages with faulty or incomplete markup. You have to regularly validate your schema using tools like Google’s Rich Results Test and monitor your Google Search Console for any issues.

3. Optimize Pagination with rel=”next” and rel=”prev”

To ensure that paginated job listings are crawled efficiently, implement rel=”next” and rel=”prev” tags on your paginated series. These tags help Google understand the relationship between paginated pages, improving the chances of discovering and indexing all relevant listings without wasting resources on every single page.

Also, consider grouping listings by date or category to reduce the number of paginated pages Google needs to crawl. For example, you can show all job listings for a specific week on one page instead of spreading them across several pages.

4. Monitor Crawl Errors and Fix Them Promptly

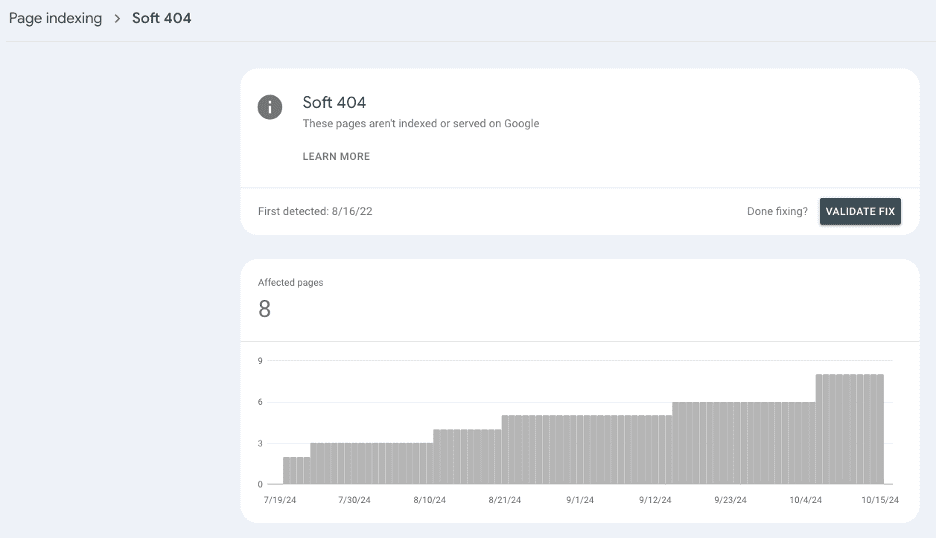

Google Search Console provides insights into crawl errors that may be affecting your site’s performance. Regularly monitor your crawl stats to identify issues such as If expired jobs are returning 404 errors, make sure to redirect them to relevant pages, such as a similar listing or category page. This preserves link equity and helps Googlebot avoid wasting crawl budget on dead-end pages. Learn how to fix 404 errors here.

You can also use GSC to monitor Soft 404 errors (pages that return a 200 status but contain no content) and infinite redirect loops. These issues can cause Google to get stuck crawling unnecessary pages.

5. Adjust Crawl Rate Settings

For large career sites and job boards, managing crawl rate settings is critical to balancing server performance with efficient crawling by Googlebot. Since job boards often feature thousands or millions of job listings, search engines must crawl pages quickly and frequently to capture the most up-to-date listings before expiration.

However, allowing Google to crawl too much at once can overload your server, impacting both your site’s performance and user experience.

One effective strategy for optimizing the crawl rate on large career sites is to adjust Googlebot’s crawl activity based on the time of day. During peak hours (when user traffic is high), an increased crawl rate could slow down the site. However, during non-peak hours, such as overnight or early in the morning, your server may have enough capacity to handle an increased crawl rate without affecting site performance.

6. Optimize for Mobile User Job Seekers

Optimizing job board sites for mobile devices is crucial for improving crawl budget efficiency, as mobile-optimized pages tend to have faster load times and fewer elements for Google to process. A mobile-first design typically leads to a shallow site structure, reducing the number of clicks Googlebot needs to access critical pages like job listings. This minimizes crawl depth, allowing search engines to focus on indexing high-priority pages such as new or soon-to-expire job postings

A streamlined mobile experience also means fewer duplicate content issues, as both desktop and mobile versions are consolidated, which prevents crawl budget waste on redundant pages. Discover some best practices to find, prevent, and fix content duplication.

Healthier Crawl Budget Means Better Job Listing Indexing

Given the dynamic nature of these job marketplaces and career sites, where job postings are frequently updated or removed, effective management of the crawl budget is crucial for maintaining visibility in search results. The techniques we showed can ensure a more efficient crawling process.

While all of the crawl budget optimization techniques listed above are great, if we had to pick the most important, it’d be reducing JS indexing delays with Prerender.io. By generating static HTML snapshots of your dynamic pages, Prerender.io enables bots to crawl your site faster, without the delays caused by rendering JavaScript. This ensures that your most important content, like new job listings, is indexed promptly.

Sign up for free and start improving your job site’s crawl budget spending!

FAQs

Do Expired Job Listings Affect Crawl Budget?

Yes, expired job listings can waste crawl budget. Properly handle expired listings by removing them, redirecting them, or using the noindex tag to prevent search engines from crawling them unnecessarily.

How Can I Increase My Site’s Crawl Budget?

To increase crawl budget, optimize site speed, improve internal linking, use a sitemap, remove or noindex low-value pages, and ensure your robots.txt file is configured correctly.

How Do I Know If Poor JS Rendering Is Affecting My Website?

Poor JavaScript rendering can cause reduced visibility, slower indexing, inconsistent user experience, and/or lower search rankings. You might be experiencing any of these.

What Are Technical SEO Tips for Job Boards?

Some include: optimizing for mobile, writing compelling meta descriptions, implementing proper structured data, and improving your JavaScript site for SEO with a tool like Prerender.io. Try for free.