Your website hosts two types of visitors: humans and bots. Human visitors browse your pages, engage with content, and convert into customers. On the other hand, bots are automated programs that visit your site to perform specific tasks, some of which are beneficial and others harmful.

Both bot and human visits affect your website traffic; the challenge is determining the actor behind them. Are those increasing pageviews from potential customers or just bot activity? Distinguishing between human and bot traffic isn’t just about cybersecurity: it’s also key to accurate analytics, smarter SEO decisions, and improving how AI crawlers interpret your site.

In this guide, you’ll learn how to differentiate between human and bot traffic, identify suspicious bot activity, and implement strategies to protect your site. You’ll also find tools that can help monitor and manage your website traffic.

What Is Bot Traffic and Why Does It Matter for SEO?

In simple terms, bot traffic refers to visits to your website generated by automated software programs (aka bots). Bots make up a significant part of online activity, and their impact on your site can be positive or negative.

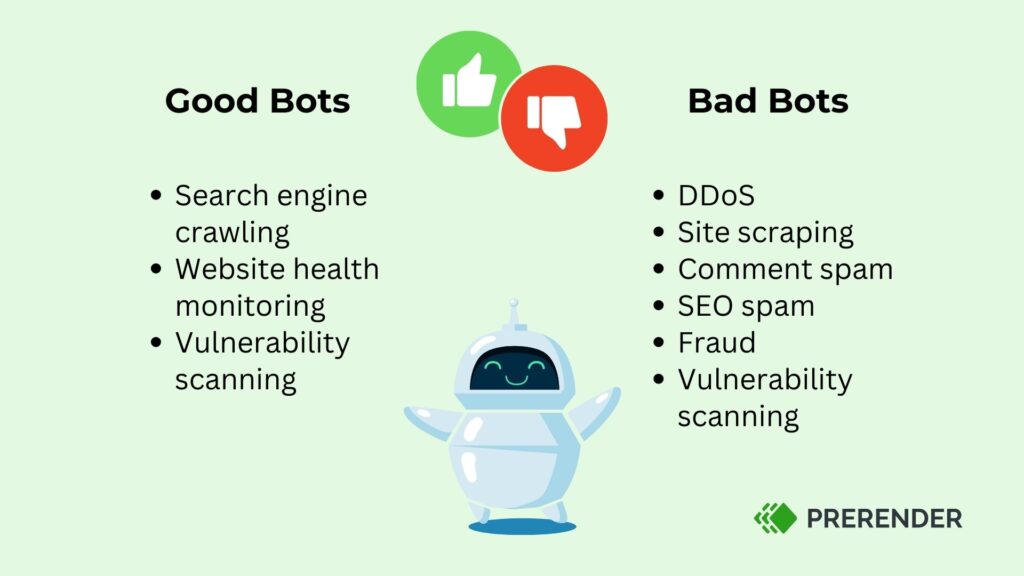

There are “good” bots, such as search engine crawlers (e.g., Googlebot) and AI crawlers (GPTBot, PerplexityBot, etc.), that help index your site for visibility in search results. Other good bots assist with tasks like monitoring website performance, optimizing ad displays, or providing customer support via chatbots.

Read: Understanding Web Crawlers: Traditional vs. OpenAI’s Bots

However, not all bots come bearing gifts. There are “bad” bots that force their way into your site, misuse its resources, distort your analytics, and compromise security. These include scrapers that steal data, spam bots that flood forms or comment sections, and DDoS bots that overwhelm servers. It’s these malicious bots that give bot traffic a bad reputation, and rightly so.

Ultimately, the impact of bot traffic, whether good or bad, depends on the bot’s intention and your site’s preparedness to handle it.

Resource: Check out this thorough guide on optimizing your website for humans and bots.

Why Distinguishing Bot vs. Human Traffic Matters

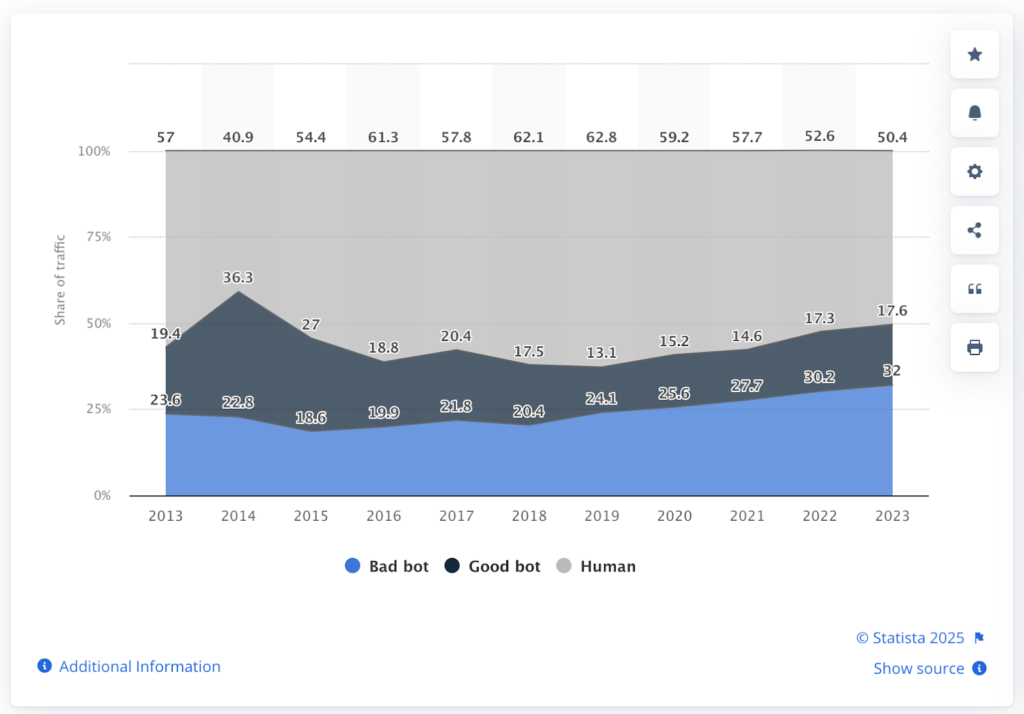

A 2024 study by Statista revealed that bots account for over 40% of all internet traffic, with humans making up just 50.4%. Nearly half of all website traffic came from bot activity, and a substantial portion of those were bad bots.

As a website owner, this raises an important concern: if your website traffic sources are bot-driven, how can you accurately measure key performance indicators like engagement, conversion rates, or marketing ROI? The answer is you can’t—at least not without filtering out bot activity.

By identifying and differentiating between bot and human traffic, you can:

- Accurately assess your site’s real performance and health.

- Make better data-driven decisions free from bot-skewed analytics.

- Optimize your marketing and SEO efforts for genuine visitors.

- Protect your site and mitigate risks associated with malicious bots.

How to Detect Bot Traffic vs. Human Visitors

Now that we have identified the types of bots and realized their practical danger, let’s learn how to detect them. Here are six methods to differentiate between bot and human traffic:

1. Look for Visible Behavioral Patterns

Human visitors typically interact with your website in unpredictable and dynamic ways. They’ll click on different links, scroll through your content, spend time reading articles, fill out forms, share your articles, or even make a purchase.

Bots, however, behave differently: predictably. They tend to send repetitive requests, visit multiple pages within seconds, or not engage with your content. For example, it can be potential bot activity if you consistently notice spikes in pageviews but insignificant time spent on the pages. By analyzing these patterns, you can flag actions or traffic that seem “too robotic” to be human.

2. Analyze Traffic Spikes and Timing

Increasing site traffic is one of the joys of every website owner. However, a sudden spike in visitors shouldn’t excite you too much until you understand the source and nature of these visits. While viral content can cause legitimate surges, consistent, unusual spikes are a red flag.

Assuming your website typically experiences low traffic during certain hours and you notice consistent spikes during those times, dig deeper. This is because bots often generate traffic in bursts, especially during malicious activities. So, by close monitoring, you can identify suspicious activities and take necessary actions to protect your site.

For more clarity, conduct a thorough human traffic analysis. Examine their traffic patterns, timing, sources, behavior, and other indicators to distinguish human activities from bots properly.

Resource: Experienced a Sudden Drop in Traffic? Here’s How To Fix It

3. Pay Attention to User Agent Strings

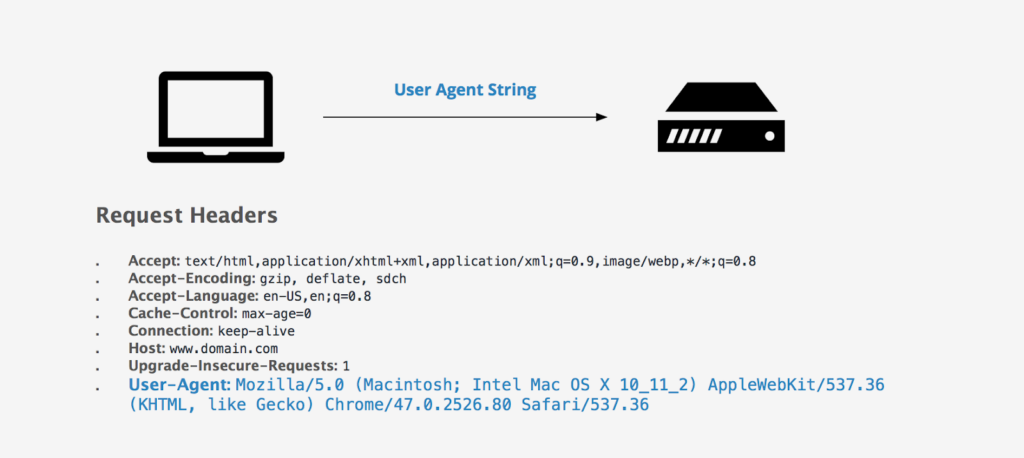

When users visit your site, their browser sends a user agent string to your server, which identifies the browser and operating system. Bots often use generic or outdated user agents to mask themselves and blend in with legitimate traffic.

For instance, if your analytics show a large percentage of visitors using outdated browsers like Internet Explorer 6 (which is largely obsolete), it’s likely bot traffic. You can identify and flag these suspicious traffic sources with tools like server logs or web application firewalls (WAFs).

4. Check IP Addresses and Geolocations

Malicious bots often operate from server farms or unknown geolocations. A quick check of your server logs can reveal clusters of traffic originating from specific IP ranges that don’t match your usual audience demographics.

For example, if your business primarily serves local customers, but a large portion of traffic suddenly comes from unknown IP addresses in a distant country, it could indicate bot activity.

Moreover, bots are often programmed to attack or scrape from a single location until they’re blocked. So, repeated requests from the same IP address are a strong indicator of bot behavior.

5. Implement reCAPTCHA

A reCAPTCHA test determines whether a user is a human or a bot by requiring tasks like identifying objects in an image or typing out distorted text. Humans pass the test, but bots fail because, as expected, they cannot decipher the tasks.

However, it’s important to note that reCAPTCHA is not foolproof: sophisticated bots can bypass basic reCAPTCHA. Instead, they can serve as a first line of defense against less advanced bots.

6. Leverage Traffic Monitoring Tools

Traffic monitoring tools, such as Google Analytics and Cloudflare, offer bot detection features that analyze session duration, bounce rates, pages per session, unusual geographic locations, and other metrics to filter out automated traffic.

Google Analytics, for example, offers bot filtering options that automatically exclude known bots from your reports, ensuring more accurate data. Similarly, Cloudflare offers real-time bot mitigation by blocking suspicious bots before they can affect your site.

Can All Bots Be Blocked?

No, not all bots can and should be blocked. While there are malicious bots that disrupt and harm websites, not all bots are enemies. Good bots are important to your website and the internet’s ecosystem, and blocking them can harm your site’s visibility, SEO, AI discoverability, and overall functionality.

Instead, focus on identifying and filtering out malicious bots while allowing the helpful bots to function.

Read: How to Easily Optimize Your Website for AI Crawlers

How to Manage Bot Traffic and Keep Your Data Accurate

Using targeted bot filtering strategies, you can manage traffic sources, protect your site, and make better data-driven decisions. Below are eight practical strategies to manage bot traffic:

1. Identify the Good and Bad Bots

Start by identifying helpful and harmful bots. Google Search Console can help identify and monitor the behavior of different bots visiting your site. To conserve your server resources, you should permit access only to useful bots, particularly those assisting with SEO, marketing, and customer support.

2. Challenge the Bots Using reCAPTCHA and Two-Factor Authentication (2FA)

reCAPTCHA remains one of the most common ways to restrict bot activity, but it can frustrate genuine users if implemented carelessly.

A good practice is strategically implementing them only in sensitive areas, such as account registration pages, login forms, or checkout processes, where bots can spam or cause significant damage.

For enhanced security, you can pair reCAPTCHA with a second form of verification like 2FA. This could be a code sent to a mobile device or an app-generated token. 2FA reduces the risk of unauthorized access and ensures that even if a bot bypasses the first authentication factor, it cannot easily pass the second. However, like reCAPTCHA tests, 2FA implementations should be strategic and user-friendly to maintain a positive user experience.

3. Regularly Monitor Your Site’s Traffic for Unusual Patterns

Monitoring your site’s traffic for irregularities is another way to protect your site from bad bot activity. You can set up alerts for abnormal traffic patterns and investigate their sources to quickly identify and mitigate bot activity. Use analytics tools like Google Analytics or server log analysis to identify anomalies like:

- Unusual traffic spikes

- Unusually high bounce rates

- Excessive requests from specific IP addresses

4. Customize Your Robots.txt Files

A robots.txt file guides and instructs web crawlers on parts of your site they’re allowed and not allowed to crawl and index. It controls your site’s access to essential bots while restricting unwanted ones.

Regularly audit your robots.txt file to ensure it aligns with your SEO goals and doesn’t unintentionally block essential crawlers. For the best practices for implementing this strategy, check out our robots.txt guide.

5. Limit the Rate of Malicious Bots’ Behaviors

Rate-limiting controls the number of requests a single IP address can make within a specific time frame. When you set a limit, your system monitors incoming requests from each IP address or user. If the limit is exceeded, subsequent requests are blocked, delayed, or redirected.

The table below shows examples of rate-limiting rules and their consequences. For example, if a bot or user tries to log in more than five times within one minute, the system temporarily bans their IP address for a set period.

| Action | Rate Limit | Time Frame | Consequence |

| Login attempts | 5 | 1 minute | Temporary IP ban |

| Page requests | 60 | 1 hour | IP block for 24 hours |

| API calls | 250 | 1 day | API key suspension |

| Form submissions | 10 | 25 minutes | CAPTCHA test |

The rate-limiting technique helps prevent excessive bot activity, reduces server overload caused by excessive bot requests, and secures your site.

6. Use Advanced Bot Protection Software

There are numerous solutions designed to help website owners manage bot traffic. They offer robust protection against advanced bots while allowing good bots to flourish.

These tools use AI, behavioral analysis, IP reputation databases, machine learning, and device fingerprinting to detect and block malicious bots. They also offer real-time analytics and alerts to enable quick responses to bot threats.

In addition, they integrate seamlessly with existing cybersecurity infrastructure, including WAFs and content delivery networks (CDNs), to give you a cohesive defense strategy.

7. Incorporate Prerender.io for JavaScript Websites

JavaScript (JS) websites often face SEO and AEO challenges because search engine bots and AI crawlers struggle to process JS content properly.

While search engines like Google have improved their rendering capabilities, processing JS content remains resource-intensive, causing delays or incomplete indexing that can hurt your SEO efforts. On top of that, AI crawlers are rarely able to execute JavaScript, which can result in a significant gap in your AI discoverability.

Download: Get Found Beyond Google: Free AI Visibility Playbook

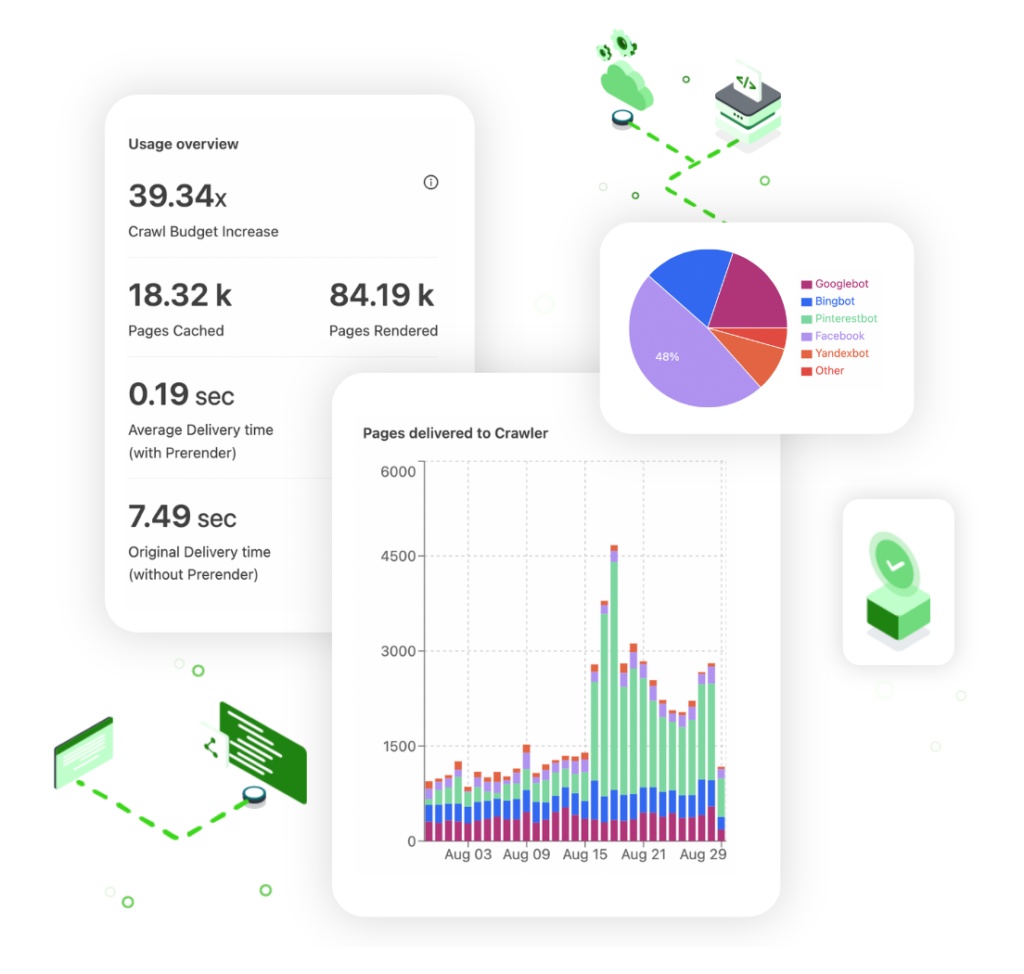

To solve this problem, use a technical SEO tool like Prerender.io. It serves static, fully rendered versions of your web pages to search engine crawlers, ensuring that all content and SEO elements are easily accessible and indexed while maintaining the dynamic experience for human users.

Additionally, Prerender.io analyzes request headers and user agents to differentiate between human visitors and verified bots like Googlebot and Bingbot. For a comprehensive approach, consider combining Prerender.io with advanced bot detection solutions to filter out unnecessary bot traffic and sophisticated bots mimicking human behaviors. This efficiently balances SEO for JavaScript websites with robust security.

If you’re new to Google’s JS rendering challenge, read Why Does JavaScript Complicate My Indexing Performance?

Resource: Check out a breakdown of Prerender.io’s process and benefits.

8. Stay Updated

Bots are persistent and constantly evolving. You may find that what worked a few months ago is no longer effective or that new bots are appearing that need to be blocked or managed.

Either way, it’s important to keep up with the latest trends by subscribing to cybersecurity newsletters, attending webinars, and following industry reports to ensure that your site always remains protected.

Optimize for Both Humans and Bots with Prerender.io

Understanding the distinction between human and bot traffic allows you to optimize your website performance and protect it from threats. By implementing the tools and strategies listed in this guide, you can ensure your analytics remain accurate, your marketing efforts stay effective, and your website remains secure.

If your website relies heavily on JavaScript and suffers from poor crawling and indexing performance, try out Prerender.io. Our dynamic rendering solution boosts your JS page’s crawling performance by up to 300x and indexing speed by 260%, improving your site’s visibility on SERPs.

Whether it’s distinguishing real visitors from bots or helping good crawlers see your site clearly, Prerender.io makes your traffic and your data trustworthy. Get started with Prerender.io today.

FAQs on Bot vs. Human Traffic

1. What’s the Difference Between Bot and Human Traffic?

Bot traffic comes from automated software programs that visit your site to perform specific tasks, like crawling, scraping, or spamming, while human traffic comes from real users engaging with your content. Distinguishing between the two helps ensure your analytics reflect genuine user behavior.

2. How Can I Tell If My Website Traffic Is From Bots?

Signs of bot traffic include sudden spikes in visits, extremely low time on page, repetitive user actions, or traffic from unusual IP addresses or geolocations. Tools like Google Analytics, Cloudflare, or log analyzers can help identify these patterns and separate bots from humans.

3. Should I Block All Bots From My Website?

No. While harmful bots should be filtered or blocked, beneficial bots, like Googlebot, Bingbot, and AI crawlers that index your site, should be allowed. Blocking them can hurt your SEO visibility and prevent your content from being indexed or cited in AI search results.

4. How Can I Protect My Site From Malicious Bots Without Hurting SEO?

Use a mix of techniques: implement reCAPTCHA on sensitive pages, limit repetitive requests with rate-limiting, monitor IP behavior, and use technical SEO tools like Prerender.io. It serves fully rendered pages to search engines and filters traffic by user agent, ensuring verified bots access your content while blocking malicious ones.