Single-page applications (SPAs) are popular because they offer a unique, interactive, and fast user experience, achieving higher engagement and conversion rates than their static HTML counterparts. However, as much as users love them, SPA SEO brings unique challenges that can negatively impact your content ranking on SERPS.

In this article, we’ll explore the top seven SPA JavaScript SEO challenges and their solutions. We’ll provide some practical tips to overcome the challenges, helping you grow your online presence without compromising UX.

1. JavaScript Rendering and Search Engines

Your JavaScript site’s rendering is the biggest and most crucial SPA SEO challenge to tackle first.

SPAs load all their content into a single HTML page and dynamically update the page (through JavaScript) as users interact with it. By default, all JavaScript frameworks are set to use client-side rendering (CSR), creating a much faster and more seamless experience for the user.

Instead of the server loading a new HTML file for every user’s request, JavaScript will only refresh the elements or sections the visitor interacts with, leaving the rendering process in the hands of the SPA.

While modern browsers can handle complex web applications built with JavaScript (JS), search engines are a different story. Many search engines struggle to render dynamic content, and some can’t process JS at all. This can lead to crawling and indexing issues, causing your single-page applications (SPAs) to underperform in search results even if the content and optimizations are on point. In other words, SPAs aren’t SEO-friendly.

Related: Learn why rendering ratio matters for healthy SEO performance.

Solution 1: Server-Side Rendering (SSR) and Static Rendering

A potential SPA SEO solution is setting your SPA to render on the server – server-side rendering (SSR) – to let search engines access the content and index your pages. But if we’re being honest, implementing SSR requires a significant upfront investment in servers, engineering hours (usually six months to a year), expertise, and maintenance for it to work. Still, it’s not a solution that comes free of issues.

Since your server has to generate a new HTML for every request made by the user and bots, SSR will slow down your server response time and overall PageSpeed scores. Not to mention that your server needs to be able to handle the rendering process at the scale your website needs.

Highly interactive websites will suffer the most as every interaction requires a complete page reload, causing additional bottlenecks and further impacting your site’s performance.

Another solution is static rendering, a technique for generating static HTML pages for a website or web application at build time rather than dynamically generating them on the server or client at runtime.

Although it technically solves the “processing power” problem, it opens the question of scalability. First, you need to be able to predict all URLs to build the static HTML version, which is not always possible for SPAs, as many URLs could be created dynamically. Second, for large and ever-expanding websites, it means a massive amount of overhead for the project.

Before you give up, let’s talk about a more straightforward, more affordable, and scalable solution for your SPA rendering.

Solution 2: Dynamic Rendering with Prerender

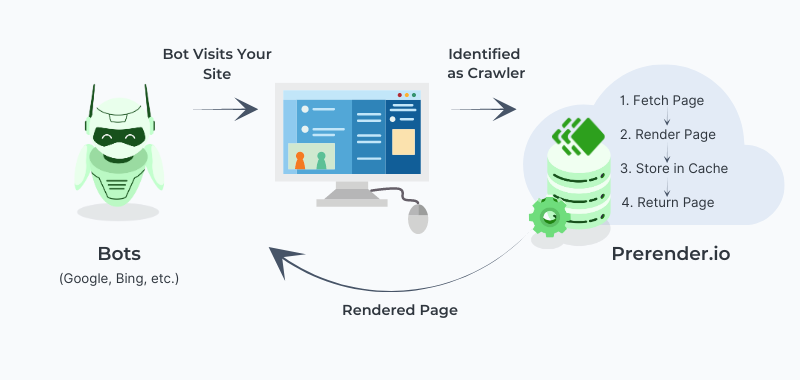

Yes, this is us, but we developed Prerender for a good reason: to solve JavaScript SEO problems once and for all, in the easiest and fastest way. Prerender works by fetching all the necessary files to render your page and generating a cached static HTML version to deliver to search engines upon request.

Unlike SRR, Prerender feeds search engines from its cache (which updates as frequently as you need), so instead of increasing your server response time, it delivers your pages at 0.03 seconds on average. Your pages will always be 100% indexable and ready to go anytime.

Prerender does this automatically in two ways:

- You can upload a sitemap with all your URLs, and Prerender will generate and store the cache version of each one

- When a crawler requests a page, Prerender will identify the request, fetch the necessary files and generate the rendered version

No matter if you have one or millions of URLs or if hundreds of these are generated dynamically, Prerender solves the static rendering dilemma from its root—which ultimately impacts the SPA SEO performance.

Best of all, Prerender just requires a quick integration installation for it to start working, reducing development time, costs, and server loads and allowing you to use client-side rendering (or the strategy you prefer) to deliver the best user experience possible to your customers without compromising your SPA SEO health.

Related: Dive deeper into the differences between pre-rendering and other rendering solutions like SSR, CSR, and more.

2. Low PageSpeed Scores (Especially on Mobile)

The next of our common SPA SEO challenges is PageSpeed. PageSpeed has been a confirmed Google ranking factor for several years.

In fact, it’s so important that Google has developed more sophisticated methods to measure it through the core web vitals (CWVs) update, making it a core part of its user-experience signals.

PageSpeed is critical for single-page applications that want to rank on Google, and nothing plays a bigger role in speed than JavaScript. But of course, there’s a catch. SPAs are used because they are fast and responsive, but when it comes down to UX, users and crawlers experience JavaScript differently.

The Problem of Render Blocking

If you analyze your SPA with PageSpeed Insights, you’ll probably encounter the warning “eliminate render-blocking resources,” and more likely than not, it is related to JS files.

When a web browser finds a JavaScript file or snippet while rendering a page, it stops the rendering process and executes the JavaScript code before it can continue. This is known as “render-blocking,” as it blocks the browser from parsing and displaying the rest of the page until the JavaScript is dealt with.

But what does it have to do with Google? As we said before, crawlers can’t handle JS, so to understand your pages, Google sends the found resources to the Renderer, which uses a Chrome instance and Puppeteer to download and execute JavaScript and get the full representation of your website.

This also means that render-blocking is more than guaranteed to affect your PageSpeed scores as it directly impacts the Largest Contentful Paint (LCP) and First Input Delay (FID) core web vitals.

One way to deal with this is by deferring non-critical JavaScript, so the page can load faster the actual content of the page – especially above-the-fold content – and leave non-critical JS to be dealt with in the background the page is rendered.

|

Quick Example: In this example, we have an empty div with an id of “root” that will be used as the mount point for our React application. We’ve also included a script tag that loads the example.js file with the defer attribute. In example.js, we can use React to create a component and render it to the div with the id of “root”: import React from ‘react’; By including the defer attribute on the script tag that loads our example.js file, we ensure that the browser defers the execution of the JavaScript code until after the page has finished rendering. |

JavaScript Just Takes Time to Load

Beyond just render-blocking, JavaScript will always take longer to load than pure HTML pages, and every second counts when it comes to search engine optimization.

An important challenge for SPAs is making JS easier to handle for mobile devices. These devices don’t have the same processing power as their desktop counterparts and usually don’t count with the best network connections.

Because of this and the increasing growth of mobile traffic – which, according to Statista, accounts for 59% of online traffic – Google moved to mobile-first indexation. In other words, your score on mobile has a direct impact on your desktop rankings.

To help your website load faster on mobile devices, you can use techniques like:

-

- Code-splitting to split your code into functional modules that are called on-demand instead of loading all JS at once.

- Setting browser caching policies to speed up your site speed from the second visit onwards.

- Minifying JS files to make them smaller and faster to download.

You can find more details on these techniques in our JavaScript mobile optimization guide.

By implementing these strategies, customers will enjoy a snappier and more consistent user experience, and you’ll get your SPA closer to a 60/100 PageSpeed score.

To push your SPA to near-perfect PageSpeed scores – including all core web vitals and server response time – you can use Prerender to deliver a fully rendered and interactive version of your pages to search engines.

Most of our customers experience a 100x PageSpeed improvement after implementation, and you’ll be able to monitor changes and technical issues directly from your Prerender dashboard.

3. Limited Crawl Budget

Crawl budget management is usually a topic reserved for large websites (10k+ URLs), as Google has more than enough resources to crawl (as long as they are well structured) small sites.

However, using a headless browser to render your SPA is a resource-intensive process that depletes your crawl budget faster than usual.

Related: Need a refresher about crawl budget? Download our Crawl Budget ebook—it’s FREE!

Search engines have to allocate more processing power and time to crawl and render your content – which most of the time they can’t, forcing them to limit resources allocated to your website.

As you can imagine, a limited crawl budget means a lot of your content is not being properly rendered or indexed, and you’ll be compared to your competitors’ websites at a clear disadvantage.

Doesn’t matter if your content is five times better than your competitors and provides a better user experience. If search engines can’t pick it up, you’ll always underperform on search results.

Of course, using Prerender will lift all crawl budget restrictions from its root by allowing search engines to skip the rendering stage – letting them allocate all resources to crawling and indexing actual content.

That said, you need to implement a few other optimizations to guarantee an efficient crawling experience. The following four challenges and tips we’ll be covering in the rest of this article will help you create a more crawlable SPA and avoid all pitfalls that can cost you your application’s success on search engines.

4. Hash URLs Break the Indexing Process

Although hash URLs are not directly related to JavaScript SEO, they still heavily affect your site’s indexability, so we think it’s worth mentioning here.

Single-page applications are called that way because they consist of a single HTML page. Unlike multi-page websites, SPAs only have one index.html file, and JavaScript injects content into it.

When a user navigates within a SPA, the browser’s URL bar does not actually change. Instead, the hash fragment at the end of the URL is updated to reflect the new application state.

| https://example.com/#/page1 |

This has many benefits for developers but is terrible for SEO.

Traditionally, fragments at the end of a URL are meant to link to a section within the same document – which, to be fair, is technically what the SPA is doing. Search engines recognize this and ignore everything after the hash (#) to avoid indexing the same page multiple times.

In that sense, all of these pages would be considered to be the homepage:

| https://example.com/#/abouthttps://example.com/#/contact-us https://example.com/#/bloghttps://example.com/ |

The better option to ensure Google can index your content is using the History API to mimic the traditional URL syntax without the hash fragment.

5. JavaScript Internal Links are Invisible to Search Engines

Can you imagine navigating to a website where it looks like there are links to click on in the menu (because you can see the words), but these are not clickable? That’s a similar experience for search engines when they get to your SPA, and all your links are event-triggered like:

| <a onclick=”goto(‘https://domain.com/product-category?page=2’)”></a> |

Search bots can’t really interact with your website like regular users would. Instead, they rely on a consistent link structure to find and follow all your links (crawling). These crawlers look for all <a> tags on the page and expect to see a complete URL within the “href” attribute.

However, if your links require interaction to execute a JavaScript directive, search bots can’t crawl your site, leaving all further pages undiscovered.

All your links should be structured like this:

| <a href=”https://domain.com/product-category?page=2″></a> |

Following this convention will allow crawlers to follow your links and discover all pages within your SPA.

6. Handling Metadata for Each New URL

Metadata has a lot of relevance for search engines because they use this information to understand your page better, display your links within search results and know how you want them to treat your pages (for example robots tags).

But we also add tags to our pages’ metadata for things like tracking and generating social media previews, so their importance goes beyond just SEO.

That said, if all pages within a SPA use the same HTML file and the page don’t reload when the visitor navigates the site, how will metadata change?

As it is, SPAs will show the same metadata for each subsequent page which is not ideal. Luckily, each framework’s community has come up with several solutions you can implement to make this easier to handle.

For example, you can use react-helmet-async for React or vue-meta for Vue. These libraries will help you set different metadata for your pages without having to change the raw HTML.

You can learn how to set these solutions from FreeCodeCamp’s tutorial.

7. Social Crawlers Can’t Find Your Graph Tags

Taking advantage we’re already talking about metadata, there’s a very fundamental issue for SPAs injecting metadata through JavaScript: social crawlers can’t render your pages.

Unlike Google, social crawlers don’t count with a rendering engine dedicated to rendering your pages. This means that even if you use the solution above to add different metadata for each page, social media platforms like Facebook and Twitter won’t be able to access them, and thus won’t be able to generate a dynamic preview from your Open Graph and Twitter Card tags.

Without rich previews on social media and messaging apps, it’s very unlikely your links will be shared or engaged with, hurting your social media visibility – which in today’s era is vital for your SPA’s success.

The simplest solution for this problem is prerendering your single-page application. Because it generates a static version of your page, crawlers will be able to find each and every tag without delays or problems.

Remember that you can use Prerender for free on the first 1,000 URLs, so unless your site outgrows this offer, you don’t need to commit to a paid plan.

Solving SPA SEO Challenges Boosts Your Content Visibility

We hope you found these SPA javascript SEO challenges and solutions useful. Solving these seven challenges will ensure your SPA is crawlable and indexable, and give you back your fighting chance to outperform your competitors in the SERPs.

Still, it’s important not to neglect traditional SEO best practices. After all, once your pages can be indexed, the next battle is making them the best choice to show in search results.

To help you in your optimization journey, check some of our guides on technical and on-page SEO to take your SPA to the next level:

- Why JavaScript Complicates Indexation

- Website Optimization For Humans vs. Bots—A Guide

- 10-Step Checklist to Help Google Index Your Site

- The Most Important Google Ranking Factors for 2023

- SEO Guide for Web Developers – Don’t Make These Mistakes

TL;DR – SEO Optimization Checklist for SPAs

- High-quality, relevant content: Target relevant keywords and provide valuable information for your users. This remains the foundation of good SEO for any website.

- Clear URL structure: Use descriptive, human-readable URLs that reflect your content hierarchy.

- Optimized title tags and meta descriptions: Write compelling titles and descriptions that accurately represent each page and encourage clicks in search results.

- Proper heading hierarchy: Use heading tags (H1-H6) to structure your content and improve readability.

- Pre-rendering or server-side rendering (SSR): Ensure search engine crawlers can access and understand your content, even if it relies heavily on JavaScript.

- Optimized mobile experience: Make sure your SPA offers a fast and user-friendly experience on all devices, especially mobile.

- Fast loading speed: Prioritize website speed by optimizing images, and code using caching mechanisms.

- Structured data markup: Implement schema markup to provide search engines with richer information about your content and improve search result snippets.

- Robots.txt and Sitemap: Ensure proper robots.txt configuration to guide crawlers and submit a sitemap to help search engines discover your pages.

- Internal linking: Create a strong internal linking structure to help search engines understand the relationships between your pages.

- Mobile-first indexing: Be aware of Google’s mobile-first indexing approach and optimize your SPA accordingly.

- Regular monitoring and testing: Use SEO tools like Google Search Console to monitor your website’s performance and identify any crawl or indexing issues.

By following this checklist and continuously monitoring your SEO performance, you can ensure your single-page application (SPA) has the best chance of ranking well in search results.

FAQs on SPA SEO Challenges and JavaScript Rendering Tools

Does JavaScript Hinder a Website’s Search Ranking?

While Google can process JavaScript pages, it takes longer and more resources than static HTML. This is because Google needs to render the JavaScript code to see the actual content. Delays can occur if a page gets stuck in a rendering queue, exceeds Google’s budget, or fails to render properly. These issues can negatively impact how Google indexes your content.

Do SPAs Respond Positively to SEO?

Many SPAs rank well, but they require extra effort to be SEO-friendly. This involves choosing the right rendering approach, using tools for proper metadata and URL handling, and optimizing performance with techniques like caching and lazy loading.

Does Prerendering Improve SPA SEO?

Yes, Prerender helps to improve the SEO of your SPAs. Your static HTML files are pre-rendered for specific routes within your app. This creates a static website that search engine crawlers can easily access and understand, ensuring your marketing content gets indexed effectively.

Take advantage of the prerender free trial to test its effectiveness for your specific needs. The prerender pricing plans cater to various website sizes.

How Can I Tell If My Technical Performance is Impacting My Site’s SEO?

You can use Prerender.io’s free site audit tool to get clear insights on your site’s performance. Simply enter your domain and email and get your personalized report in minutes.