Google’s 15MB crawl limit is a crucial factor to consider when optimizing your website.

But what exactly does this limit mean, and how does it affect your website’s performance in search results?

In this blog post, we’ll delve into these questions and more, providing you with a comprehensive understanding of Google’s 15MB crawl limit and how to effectively manage it.

What is Google’s 15MB Crawl Limit?

This limit refers to the maximum size of a web page that Googlebot will download and process for indexing. If a web page exceeds this limit, Googlebot will only process the first 15MB of the page.

It is applied per resource basis, meaning that each HTML file, JavaScript file, and CSS file on your site has its own 15MB limit. However, despite each resource having its limit, you still need to ensure your money-maker or “golden egg” content is considered. For JavaScript-heavy websites, on the other hand, the impact is even bigger. Naturally, JS files are large and can quickly exceed the 15MB limit.

The primary reason for Google implementing this rule is to manage the resources used during the crawling and indexing process. While it helps Googlebot crawl and index the vast number of web pages on the internet, it’s not always a win for your site.

Note that this limit only applies to the content retrieved for the initial request made by Googlebot, not the referenced resources inside the page.

Is the 15MB Limit the Same as Crawl Budget?

The limit is separate from but related to Google’s crawl budget. Your crawl budget refers to the number of pages Googlebot can crawl on your site within a certain timeframe. If a page is close to or exceeds the 15MB limit, Googlebot may use up more of your allocated crawl budget to download and process that page. This leaves fewer resources for crawling other pages on your site.

Related: How to Guide Googlebot in Crawling Important URLs

Crawl budgets are key for indexing. Once you exceed the first 15 MB limit, all the content after is dropped by Googlebot. Remember that this 15 MB limit only applies to fetches made by Googlebot. Optimizing your crawl budget will be a determining factor in how much online visibility your website can achieve.

More Reading: Crawl Budget Optimization Guide

The limit includes all resources that are embedded in the HTML of a webpage. This includes text content, HTML markup, CSS, and JavaScript. External resources like images and videos are not counted towards the 15MB limit unless their data is embedded directly in the HTML.

While images and videos are not counted towards the 15MB limit, large images and videos can still impact a page’s loading time, which can affect Googlebot’s ability to efficiently crawl the page.

Related: PageSpeed Explained

Is it possible to hit a 15MB file size for HTML pages? For most websites, it is not reasonable or necessary to have HTML pages that approach or exceed the 15MB limit. Most web pages are far smaller than this limit. However, JavaScript websites or JS-based elements pages can exceed this limit.

Strategies and Techniques to Avoid the 15MB Limit

There are several strategies you can employ to avoid this limit. Some of them include optimizing your HTML, CSS, and JavaScript files to reduce their size, using external resources instead of embedding large amounts of data within your HTML, and implementing techniques like lazy loading for images and videos. Let’s go into detail.

1. Server-Side Rendering (SSR)

Server-side rendering (SSR) can be used to process JavaScript; serving crawlers a fully rendered HTML version. Additionally, server-side optimizations can include techniques like code minification and compression, which reduce the size of HTML, CSS, and JavaScript files.

However, it’s important to note that server-side rendering is not the optimal choice for every website.

SSR requires a significant amount of server resources. This can lead to increased server load and potentially slower response times, especially for websites with heavy traffic or complex JavaScript applications. Additionally, implementing SSR can be a costly, complex process that requires significant changes to your website’s architecture and codebase.

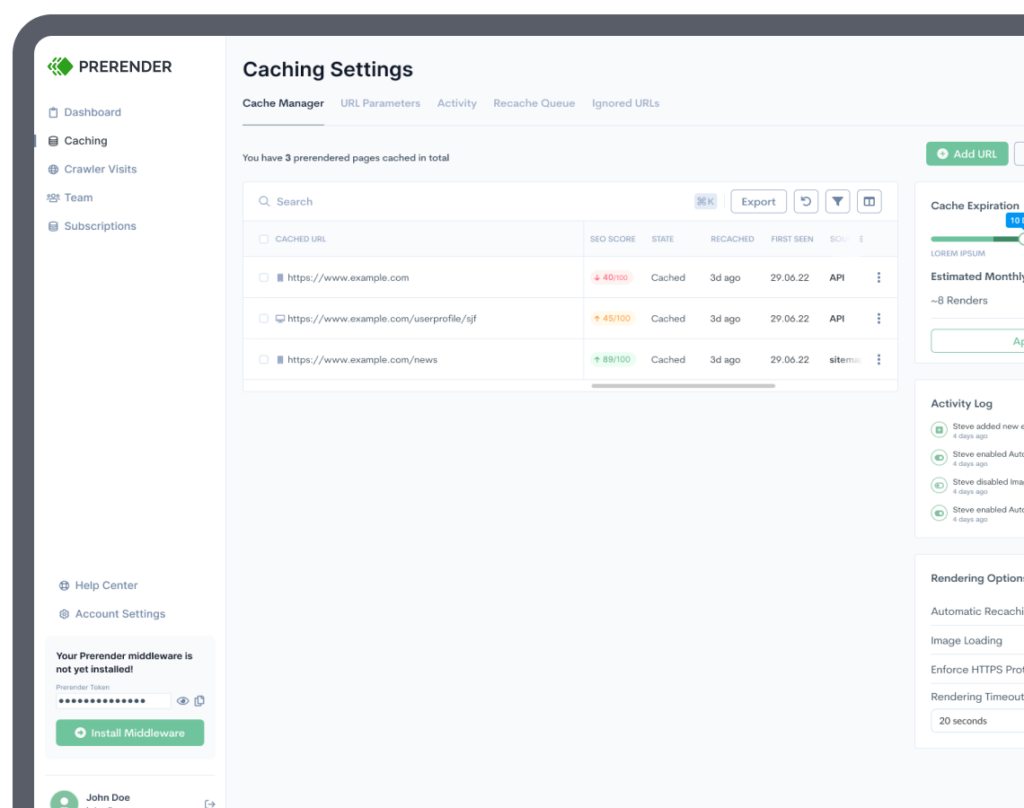

Dynamic rendering offers similar benefits at a fraction of the cost. A tool like Prerender, for example, helps Google to easily crawl and index a website by generating a static HTML version of each page.

Related: When You Should Consider Dynamic Rendering

2. Determining and Tracking Your Website’s Size

You can determine the size of your website using various tools and techniques.

One common method is to use an auditing tool to crawl your site and provide information about the size of each page. You can also manually check the size of your HTML, CSS, and JavaScript files.

Google Search Console provides detailed information about how Googlebot interacts with your site. Other tools like Screaming Frog can mimic the behaviour of web crawlers, allowing you to diagnose potential issues.

3. Make Use of Embedded or Linked SVGs

Including SVGs as image tags can help manage the page’s size, as the data for the image is not embedded in the HTML. However, this can increase the number of HTTP requests the page makes, which can impact load time. The best approach depends on the needs and constraints of your website.

Final Thoughts

In addition to the 15MB limit, increasing your crawl budget will ensure your most important pages get crawled and indexed by Google every time.

Struggling to get indexed? Get started with 1,000 URLs free.

FAQs on Crawl Limit

1.) What is Crawl limit?

Crawl limit refers to the number of pages on your website that a search engine bot (like Googlebot) is allowed to visit and process within a specific timeframe. It’s like a quota for Googlebot visits.

2.) How does Crawl limit affect my website’s SEO?

Crawl limit can indirectly affect your website’s SEO in a few ways:

- Limited Crawling, Limited Indexing: If Googlebot can’t crawl all your website’s pages due to crawl limit restrictions, important content might not get indexed. This means those pages wouldn’t appear in search results, potentially hurting your website’s visibility for relevant keywords.

- Prioritization: Google prioritizes crawling well-structured, high-quality content. If your website has crawl errors, slow loading speeds, or irrelevant content, Googlebot might spend its crawl budget on other websites, leaving your valuable content unindexed.

3.) Is there a way to check my website’s crawl limit?

No, there isn’t a way to directly check your website’s specific crawl limit set by Google. Crawl limit is an internal metric that Google uses to manage its crawling resources efficiently.

However, you can use tools and strategies to understand how Google interacts with your website and identify potential crawl limit issues

4.) Can I increase my website’s crawl limit?

While you can’t directly increase your website’s crawl limit set by Google, there are ways to indirectly influence how Googlebot allocates its crawl budget for your site. Simply focus on Website Optimization.

- Prioritize Important Pages: Ensure the most SEO-critical pages (product pages, service pages, blog posts) are well-structured, load quickly, and free of crawl errors. Googlebot is more likely to spend its crawl budget on these valuable pages.

- Optimize for Speed: Fast loading times encourage Googlebot to crawl more pages within its allotted time. Consider image optimization, caching mechanisms, and code minification to improve website speed.

- High-Quality Content: Create valuable, informative content that keeps users engaged. Google prioritizes crawling websites with fresh, relevant content that users find useful. Regularly update your website with new content and maintain existing content for accuracy.

- Fix Crawl Errors: Address any crawl errors identified by Google Search Console. Crawl errors can waste crawl budget on inaccessible pages. Fixing these errors ensures Googlebot spends its resources efficiently on valuable content.

Explore more about crawl budget optimization:

- 4 Ways to Optimize Your Crawl Budget with Internal Links

- How to Avoid Missing Content in Web Crawls

- 5 Ways to Use Log File Analysis to Optimize Your Crawl Budget