Managing a large website hosting thousands of URLs isn’t as simple as optimizing a small one. Large enterprise sites often struggle with one crucial aspect: page indexing issues. Left untreated, Google indexing issues can lead to poor visibility on SERPs and hurt your traffic.

In this SEO guide, we’ll look at some Google indexing issues commonly found on large sites. We’ll discuss why these crawl and indexing errors occur, their impact on a site’s ranking and traffic, and, most importantly, how to fix the page indexing issues so that your site can reach its full SEO potential.

Why Large Websites are Prone to Google Indexing Issues

Every SEO manager knows that the bigger the website size, the more complex the SEO problems. The challenge gets more difficult if you have a large-sized website with dynamic, fast-changing content (such as ecommerce and sports sites) and rely on JavaScript to generate most of its content.

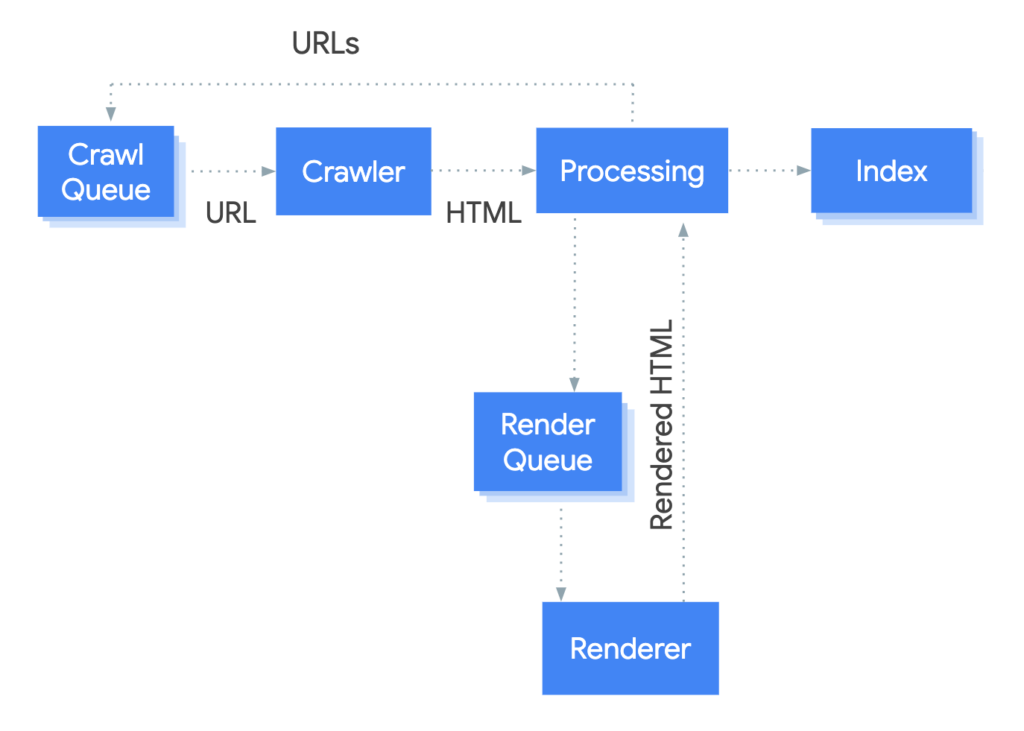

Google is still struggling to index JavaScript pages. It takes more resources (crawl budget) and time to fully process the content and index it before users can see it on SERPs. Due to the complexity of the JS indexing process, many pages are only partially indexed and missing some SEO elements that are intended to boost the page’s ranking.

As a result, your beautifully crafted content is struggling to rank high on Google SERPs—or if the page isn’t indexed at all, it won’t ever show up on the search engine results page.

This blog post provides a detailed explanation of Google’s crawling and indexing process. We encourage you to read it to deepen your understanding of how Google indexes websites and learn crucial steps to safeguard your site’s visibility.

Google’s Crawling and Indexing Process by Google Search Central

7 Google Indexing Issues and How to Fix Them

Here are some of the common factors that cause Google indexing issues on enterprise-size websites and solutions to solve them.

1. XML Sitemap Issues

XML sitemaps are essential tools for SEO. They help search engines understand the structure and hierarchy of a website’s content. That said, XML sitemap can also cause indexing problems, especially on large sites.

If the XML sitemap does not include all the relevant URLs or is outdated, search engines may not crawl and index the new or updated content. For large websites that frequently add or modify content, an incomplete or outdated sitemap leads to missing pages in search results. Moreover, if there are discrepancies between the sitemap and the actual site structure, search engines will struggle to understand the organization of content, hurting the website’s index quality.

How to Fix Google Page Indexing Issues Caused by XML Sitemap

To avoid having XML sitemap issues on a large site, keep your XML sitemaps up to date with new or refreshed content. You should also accurately set the priority and frequency attributes based on the importance and update frequency of the pages.

If the sitemap is excessively large, consider breaking it into smaller, logically organized sitemaps. This will aid Google in processing the pages and helping you audit the XML sitemap for duplicate entries, ensuring that URLs correctly redirect to their intended destinations.

2. Depleted Crawl Budget

A crawl budget refers to the number of pages a search engine’s crawler, such as Googlebot, is able to crawl and index within a given timeframe. Once that budget is exhausted, bots will no longer crawl or index your page until the next crawl—and without being indexed, your content won’t show up on search results.

Since the crawl budget is limited, large websites are more prone to crawling and indexing problems as they will need more resources to achieve a 100% indexing rate. When your crawl budget is depleted, some important pages, especially those deeper in the site’s hierarchy, may not get indexed, leading to missing content or no appearance in SERPs.

How to Fix Google Page Indexing Issues Caused by Depleted Crawl Budget

To optimize the crawl budget for large websites, you can guide bots to only crawl specific pages using robots.txt. For instance, block Google crawlers from accessing pages like login pages, admin dashboard, and other pages that are not intended for public access. Allowing Google to crawl and index these pages is only wasting your crawl budget. This guide will show you how to use the robots.txt disallow command in detail.

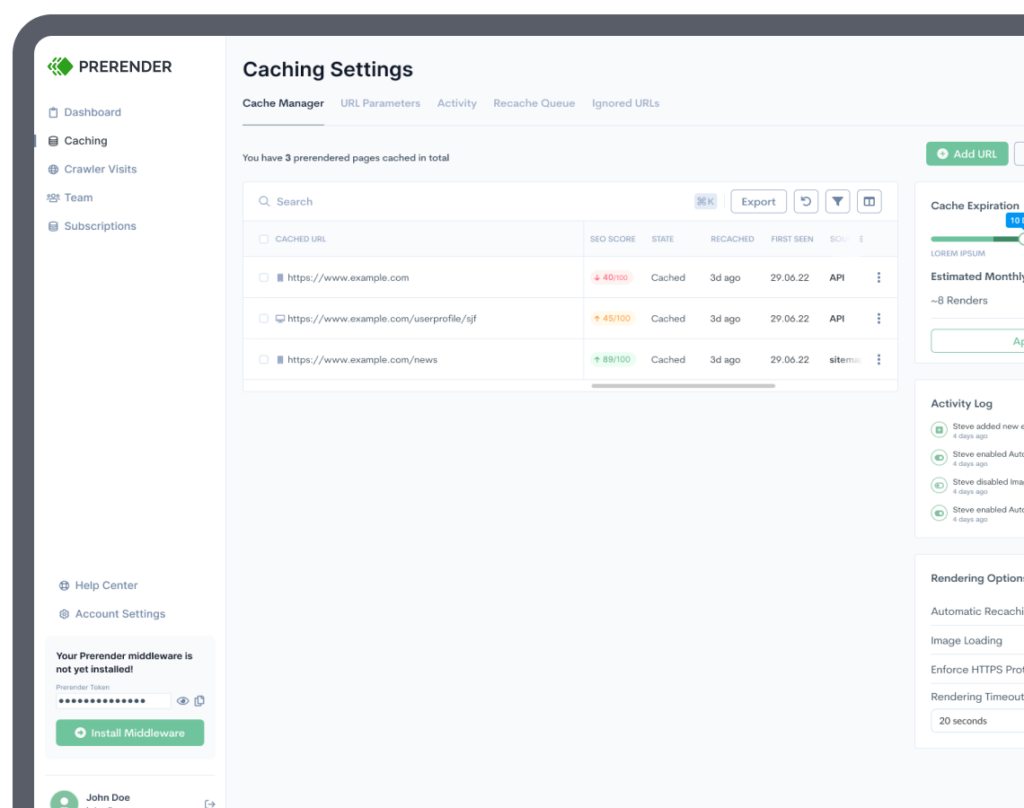

Besides using robots.txt to better manage your crawl budget, adopting a pre-rendering tool like Prerender.io can also help. More about Prerender will be discussed in the next section.

Related: learn 6 best practices for better managing your crawl budget.

3. JavaScript and AJAX Challenges

Most large websites rely heavily on JavaScript and AJAX for a good reason: they’re essential in creating dynamic web content and interactions. However, relying on these technologies has some side effects that can cause Google indexing issues, particularly when indexing new content.

For instance, search engines may not immediately render and execute JavaScript, leading to delays in indexing content that relies heavily on client-side rendering. Furthermore, AJAX dynamic content may not be indexed if search engines can’t interpret or access the content.

How to Fix Google Page Indexing Issues Caused by JavaScript Rendering Delays

Convenient solutions like lazy loading or other deferred loading techniques may solve these issues, but they will delay the immediate indexing of crucial information, especially on pages with extensive content. Another option is to use Prerender.

Prerender solves Google indexing issues by pre-rendering your JavaScript content into its HTML version. This means that when Google crawlers request the JS page, Prerender feeds them with the HTML version—a 100% ready-to-index format that allows Google to quickly and accurately index the content.

The rendered pages are also saved as a cache on Prerender’s cloud storage. The next time Google crawlers come to visit the same page (the content isn’t updated), instead of costing your valuable crawl budget to re-crawl, re-render, and re-index the page again, Prerender’s dynamic rendering solution will feed them with the cached content. This way, you save your valuable crawl budget.

Find out how Prerender works in detail here, or watch this video to learn more.

4. Duplicate Content

Google defines duplicate content as content that is identical or similar on multiple pages within a website or across different domains. Let’s take an ecommerce website as an example.

Due to the sheer volume of product pages, the complexity of the web structures, and variations of similar product descriptions, it is very easy for large ecommerce websites to have duplicate content. Left untreated, this can result in indexing and crawling errors, as well as wasted crawl budgets.

Websites using content management systems (CMS) that employ templates for certain types of pages (e.g., product pages and category pages) may also face duplication issues if the templates are not carefully customized.

How to Fix Google Page Indexing Issues Caused by Duplicate Content

To prevent duplicate content, you can use a technical SEO technique like canonical tags to indicate the preferred version of a page. This tag informs search engines which URL should be considered the original and indexed. It’s beneficial when you have multiple URLs that lead to the same or very similar content. Here’s an example:

<link rel="canonical" href="https://www.example.com/preferred-url">For paginated content (e.g., category pages with multiple pages of products), use rel=next and rel=prev tags to indicate the sequence of pages. Google should understand that these pages are part of a series.

If you have similar content on different pages, consider consolidating it into one comprehensive, authoritative page by removing or rewriting duplicate content where possible. There are several more ways to deal with duplicate content, and we go into those in great detail here.

5. Poor Content Quality

Content quality is a critical factor in determining how well a website performs in SERPs. While high-quality content is great for SEO, poor content quality has the opposite effect.

Google aims to deliver the most relevant and valuable results to users. That’s why when the content on a large website is of low relevance or lacks value to users, search engines will deprioritize crawling and indexing the pages.

Using outdated SEO tactics such as keyword stuffing or employing spammy techniques can also lead to lower content quality. And because Google’s algorithms are now designed to identify and penalize such practices, it can damage your indexing and ranking performance.

How to Fix Google Page Indexing Issues Caused by Low-Quality Content

To maintain your content quality, conduct regular content audits. Remove or update the low-quality, outdated, and irrelevant content to meet Google’s standards. Focus on creating content that provides genuine value to users, as this enhances user engagement by improving readability, time on page, and overall user experience.

Related: Discover 11 best technical SEO tools for a proper content audit.

6. Content is Blocked by noindex Tags

If you manage the SEO of large websites for years, it’s easy to lose track of what pages you have applied the noindex tags to. Without realizing it, you publish new content (blogs, pages, etc.) under that page hierarchy and are shocked to see that Google still hasn’t indexed your page in weeks.

How to Fix Google Page Indexing Issues Caused by noindex Tags

Check whether you implement noindex tag on the <meta> tag of a page or on their HTTP response header. Additionally, trace back to the main page to ensure that all noindex tag blockers have been removed.

Related: Discover the difference between nofollow and noindex and how they affect content indexing performance.

7. Broken Internal Links

When Google indexes your content, it crawls every word and SEO element, including internal links, to fully understand the content. When Google crawlers find broken links or orphaned pages, they can’t trace them, leaving them confused. Consequently, Google may misunderstand your content intention, deeming it as low-quality content.

How to Fix Google Page Indexing Issues Caused by Broken Link

Conduct a regular SEO audit to keep your internal links accurate. Don’t forget to redirect any pages that you’ve merged or deleted with the correct 3xx HTTP status codes (e.g., using a 301 redirect). This way, Google won’t end up on a dead link when crawling and indexing your pages.

Minimize Crawl Errors and JavaScript Rendering with Prerender

Overcoming Google indexing challenges on large websites can be challenging, but by taking some proactive measures, as discussed above, you can achieve effective indexing and maximize the online success of your enterprise website.

If you want to solve crawl errors and page indexing issues once and for all, adopt Prerender. Not only do we save your crawl budget and feed bots with ready-to-index JavaScript-based pages, but also offer other technical SEO services, including sitemap crawling and 404 checkers.

Achieve 100% indexed pages with Prerender. Sign up today and get 1,000 renders per month for free.

To help you reach your full SEO potential when dealing with 1000s of URLs, here are a few detailed guides to help you:

- 6-Step Recovery Process After Getting De-Indexed by Google

- Index Bloating: What It Means for Crawl Budgets and How to Fix It

- Understanding Google’s “Crawled – Currently Not Indexed” Coverage Guide

- 10-Step Checklist to Help Google Index Your Site

FAQs – How to Fix Google Page Indexing Issues

Answering your questions about Google page indexing issues and how to address them.

1. Google Hasn’t Indexed My New Content. What Should I Do?

If your new content hasn’t been indexed for a while, you should check the following factors that may cause Google to delay or not indexing your content at all:

- Robots.txt ‘disallow’ command and ‘noindex’ tags block Google crawlers from accessing your content

- The content isn’t informative or duplicated so Google deprioritizes it from indexation

- A depleted crawl budget forces Google to stop crawling and indexing your content

- Your XML sitemap is incorrect or outdated

2. How Can I Detect Google Indexing Issues?

To detect whether your website has any Google indexing issues, use Google Search Console. Go to Pages and look at the Page Indexing report. Spot the URLs with the ‘Not Indexed’ status and find out the reasons why they aren’t indexed in the ‘Why Pages Aren’t Indexed’ section.

3. How Can I Ask Google to Reindex My Content?

To ask Google to reindex your content, go to Google Search Console. Go to ‘Pages’ > open ‘Page Indexing’ report > choose one of the error lists that you want to validate > click ‘Validate Fix.’ This informs Google that you have solved the problem and ask Google to reindex the pages.

4. How Can I Accelerate Google Indexing?

There are several ways to accelerate Google indexing, such as:

- Remove infinite crawl spaces, such as redirect loops and pages returning a 200 status code

- Increase your Server Response Time (SRT) and other Core Web Vitals factors

- Improve your site structure and internal linking

- Optimize your sitemap

Get the details on how to get your website indexed faster by Google here.