Content that Google can’t render is effectively invisible to search engines. No matter how compelling your pages are, if their content isn’t indexed, it won’t contribute to your search engine rankings.

Worse, partially rendered pages—which often happen to JavaScript-generated content—can be misinterpreted as thin content or even spam, potentially harming your SEO.

In today’s JavaScript SEO article, we’re going to explore the causes of partially rendered or empty pages and provide practical solutions so Google can read and index all of your pages properly.

What are Partially Rendered (JavaScript) Pages?

Partially rendered pages are web pages that Google can’t render completely due to crawling budget constraints, poor critical rendering path optimization, or many more technical SEO variables.

Partially rendered pages occur when Google can index some, but not all, of a page’s content. This often happens with JavaScript-heavy websites.

While Google can typically render and index simpler HTML content, complex JavaScript, which requires more processing power (crawl budget), can be problematic. This often leads to incomplete indexing, with essential content, internal links, and other critical SEO elements missing from Google’s index.

Partially rendered pages put your site at a disadvantage, as these missing content and SEO elements can significantly impact your search rankings. In most cases, when websites have rendering issues across the board, it’s common for their URLs not to be indexed.

Note: There are also scenarios where your pages appear empty because Google fails to render your pages completely. That said, it’s not common for Google to index blank pages.

Related: discover your site’s rendering performance by calculating your render ratio.

Why Does Partial Rendering Happen?

We’ve touched on the most common reason for partial rendering above. Let’s dive deeper into other causes that can cause incomplete rendered content. This includes, how your websites are built (a heavy JavaScript-based website is prone to partial rendering), technical SEO factors, and Google’s limited resources.

1. You’re Blocking Critical Resources Through Your Robots.txt File

It’s a common mistake to stop Google from accessing JS, CSS, and other files to “save crawl budget.” The idea behind this is to force search engines to crawl only HTML files, which is where most sites’ content is.

However, for sites using JavaScript frameworks or injecting dynamic content into the page, this means Google won’t get the necessary files to build your page correctly and will miss all that extra content.

For single-page apps (SPAs), it is even worse. If Google can’t crawl JS files, it’ll see all your pages as blank files because there’s no content on the HTML template before JavaScript is executed.

Resource: Robot.txt SEO: Best Practices, Common Problems & Solutions

2. Poor JavaScript Optimization

Google needs more resources to render a JavaScript page than HTML static ones. This is a fact, and there’s no way around it.

It also means that your crawl budget suffers the consequences.

If you are not using your crawl budget efficiently and helping Google as much as possible, Google will probably deplete your crawl budget before rendering the entirety of your page.

That’s because JavaScript is resource-intensive and render-blocking, so before the crawler deals with it, it can’t move to the next step and render the rest of the page.

So, optimizing rendering and JavaScript, as a whole, is fundamental.

Resource: Why JavaScript Complicated Indexation

3. Low-Quality Prevents Google From Rendering

While still a theory, there’s evidence suggesting Google might prioritize certain sections of a web page layout over others, regardless of whether the content is rendered via HTML, CSS, or JavaScript. Onely conducted an experiment and found that Google may give less weight to content in certain locations on a page.

To summarize the study, they found that Google is trying to determine which sections contain the page’s main content to index and ignore some others, potentially to save resources and be able to index as much critical content as possible.

How can you plan your site’s architecture if Google randomly ignores sections?

This is a tough one, but it’s also a reality.

If we have to summarize why Google struggles with JavaScript, it’s because JS just is much more demanding than static HTML.

Source: Onely

Dynamic content takes up to 20X more resources to render than plain HTML – no wonder Google is looking to cut some corners.

How to See If You Have Partially Rendered Pages

Let’s focus on the two most straightforward ways to test your website’s rendering to see your content as Google does.

1. Test Your URLs Using Search Console

The first strategy is using the URL inspector within Google Search Console (GSC).

Submit the URL you want to test and click on “TEST LIVE URL” on the right side of your screen.

It’ll request your page, as the standard crawler does, and provide details about your page.

After the test, click “VIEW TESTED PAGE” to see the details of your rendered page.

It’ll show you the HTML rendered, a screenshot of the page, and details like HTTP response and JavaScript console messages.

All of this information is useful, but in this case, you want to look at the screenshot and the HTML fetched.

The screenshot will show how much of the page is rendered before the test is completed. There’s a good chance it won’t take a screenshot of your entire page but don’t worry.

What matters the most is that the above-the-fold content is completely rendered, and the layout works appropriately. If you are seeing missing content or layout issues at this stage, it’s time to optimize your critical rendering path to prioritize above-the-fold content.

Note: If your above-the-fold content isn’t rendered, there’s a big chance the rest of your content isn’t as well.

The next thing to check is whether or not your content is within the rendered HTML.

We’ll take the following sentence from our FAQ section for this example.

This section’s content is hidden and doesn’t appear on the screenshot, so it’s a good candidate for the test. That said, you should copy a fragment of text that’s only visible after JavaScript is executed. If you can find it, then Google can as well.

Do this for every page type or template you’re using, and you’ll be able to determine how much of your page is being rendered and what sections aren’t.

2. Using Google Search

Here’s a little trick you can use to see if Google is indexing a piece of text or not. Navigate to Google and use this formula for your search

| site: www.yourdomain.com “the text you want to search for” |

Note: Use the version of your site that’s getting indexed. In our case, using the “www” will return nothing.

Here’s an example using the same text fragment as before:

This means that Google has rendered and indexed the fragment.

How to Fix Partially Rendered (and Empty) JS Pages

To fix partially rendered pages, waiting for Google to do something about it shouldn’t be your first approach. Instead, you must solve the JavaScript dilemma.

1. Site Optimization is Critical

Creating the perfect conditions for Google to crawl, render, and index your pages properly requires a solid technical SEO foundation, clear site architecture, and UI/UX design to:

- Ensure content loads fast

- URLs (even deep ones) are easy to find

- Provide the best user experience possible

Here are some resources you can use to guide your efforts:

- How to Optimize Your Crawl Budget with Internal Links

- How to Find and Eliminate Render-Blocking Resources

- How to Avoid Missing Content in Web Crawls

- How to Optimize Your Website for Mobile-First Indexing

- 7 Common SPA SEO Challenges and Solutions

These optimizations will do more than help with rendering. They’ll help you make Google fall in love with your site – which is super important for your SEO campaign.

However, that’s the hard part. The easiest part – and most impactful – just takes a couple of hours.

2. Use Dynamic Rendering with Prerender

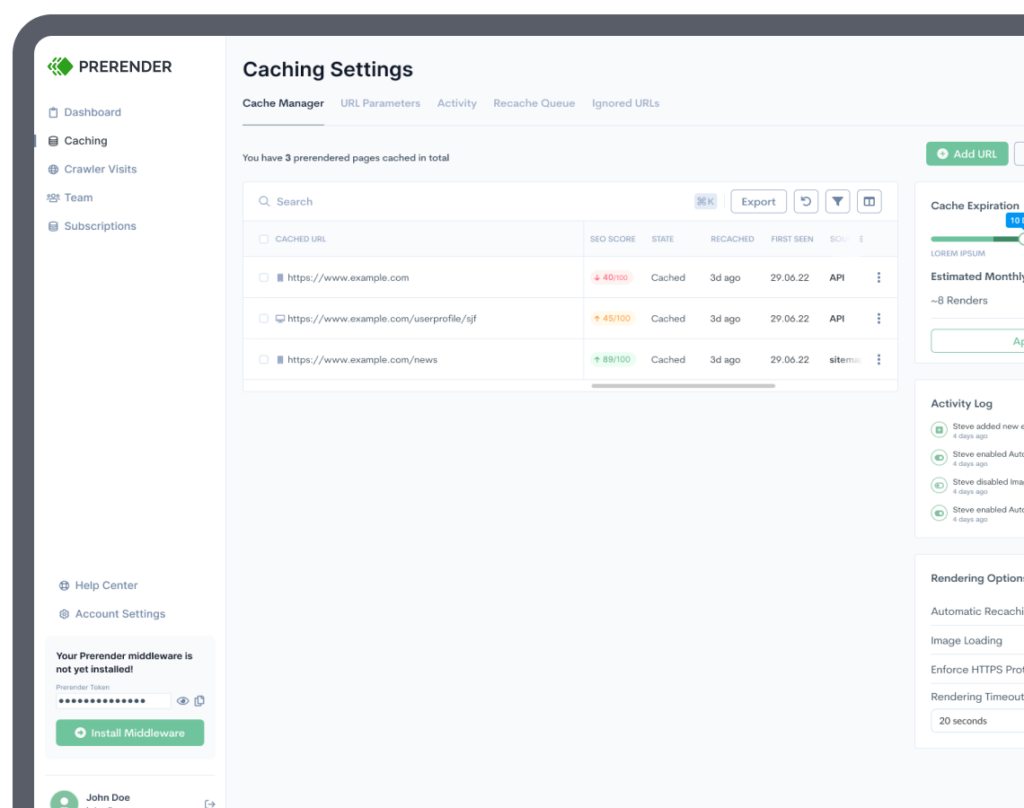

Prerender is a plug-and-play solution that takes complete care of the rendering process for search engines just by installing the middleware that matches your website’s tech stack and submitting your sitemap through your dashboard.

From there, Prerender will crawl your pages, generating and caching a fully rendered and functional snapshot of them. When a search bot requests one of these pages, Prerender will send the 100% ready-for-indexing snapshot, removing the barrier of JavaScript from the root.

Because the snapshot is fully rendered (including 3rd party scripts), your pages will gain near-perfect page performance scores (including core web vitals) and a server response time of 0.03s average.

No more rendering limitations holding your site back. Google will be able to index 100% of your content every time.

Learn how Prerender works in more detail.

Prerender.io Ensures 100% Rendered Pages

While some SEO experts and developers recommend DIY solutions like server-side rendering (SSR), static rendering, and hydration to address partially rendered JavaScript pages, each of these approaches has its own trade-offs, including cost and technical complexity.

We may be biased, but we believe Prerender offers a superior solution. Unlike SSR or hydration, which requires you to maintain your own server, Prerender handles server maintenance for you. Our pricing starts at just $90 per month, making Prerender the best JavaScript rendering solution to mitigate partially rendered or indexed pages.

Check our comparisons page for a detailed breakdown of how Prerender compares to other solutions, and the cost and resource comparison of implementing in-house SSR vs. the plug-and-play Prerender.io JS rendering solution.

Try Prerender free for your first 1,000 renders and see the improvement we can make to your rendering performance.

See you in the SERPs!

FAQs on Partially Rendered Pages and Their SEO Effects

Answering some of the most common questions about JavaScript rendering, partially rendered JS pages, and Prerender.io.

1. Why Does Partial Rendering Often Happen to JavaScript Websites?

Google’s indexing process for JavaScript-heavy websites involves two steps: first, crawling, and then rendering the JavaScript to see the full content. This rendering step can take weeks or even months, while the initial HTML is often indexed quickly. This discrepancy often results in pages being partially rendered and indexed, hindering their SEO potential and traffic generation, as Google doesn’t immediately see the complete content.

Learn more about the JavaScript crawling and indexing process here.

2. How Does Prerender Solve Partially Rendered JavaScript Pages?

Prerender renders JavaScript pages into a ready-to-index version (HTML) and saves them as caches. This rendering process can be set to be ahead of search engine crawler requests (hence the name of ‘pre-render’) or on the fly. Prerender then feeds the HTML version to crawlers, allowing them to easily understand and index its content perfectly and quickly.

Discover more about Prerender and its rendering process here.

3. Can Crawl Budget Cause Partially Rendered JS Pages?

Yes, a limited crawl budget can lead to partially rendered JavaScript pages. If Googlebot runs out of its allocated crawl budget before fully rendering and indexing your JavaScript content, the process will stop, leaving those pages only partially indexed.

You can manage your crawl budget by using robots.txt to prioritize important pages or, more effectively, by using a solution like Prerender, which enables Googlebot to quickly index fully rendered pages, thus optimizing crawl budget usage.