As your website grows in size and more collaborators start to work on it simultaneously, there’s a greater chance for technical SEO issues to appear. This makes technical optimizations more important for mid to enterprise websites competing for top positions.

Technical SEO is the foundation of your organic efforts; without a solid foundation, your content won’t rank on search engines. However, no company has unlimited resources, so you’ll want to focus on the tasks with the highest impact instead of wasting your budget on good-to-have but low-priority optimizations. Here are 6 technical SEO tasks you can focus on in 2023 to increase your organic reach:

1. Handle HTTP and Status Codes

When it comes to status codes, there are two main things to work on:

Serving HTTPS pages and handling error status codes.

Hypertext transfer protocol secure (HTTPS) has been confirmed as a ranking signal since 2014 when Google stated that they use HTTPS as a lightweight signal. It offers an extra layer of encryption that traditional HTTP doesn’t provide, which is crucial for modern websites that handle sensitive information.

Furthermore, HTTPS is also used as a factor in a more impactful ranking signal: page experience.

Just transitioning to HTTPS will give you extra points with Google, plus increase your customers’ sense of security – thus, increasing conversions. (Note: There’s also a chance your potential customers’ browsers and Google will block your HTTP pages.) So, in saying that, if search engines nor users can access your content, what’s the point of it? And the second thing to monitor and manage is error codes.

Finding and fixing error codes

Error codes themselves are not actually harmful.

They tell browsers and search engines the status of a page, and when set correctly, they can help you manage site chances without causing organic traffic drops (or keep them at a minimum).

For example, after removing a page, it’s ok to add a 404 not found message

(Note: You’ll also want to add links to help crawlers and users navigate to relevant pages.)

However, problems like soft 404 errors – pages returning a 404 error message, but the content is still there – can cause indexation problems for your website. Users will be able to find your page on Google – because it can still index the content – but when they click, they’ll receive a 404 not found message.

You can fix soft 404s by redirecting the URL to a working and relevant page on your domain.

Other errors you’ll want to handle as soon as possible are:

- Redirect chains – these are chains of redirection where Page A redirects to Page B, which then redirects to Page C until finally arriving at the live page. These are a waste of crawl budget, lower your PageSpeed score, and harm page experience. Make all redirects a one-step redirect.

- Redirect loops – unlike chains; these don’t get anywhere. This happens when Page A redirects to Page B, which redirects back to Page A (of course, they can become as large as you let them), trapping crawlers in loops and making them timeout without crawling the rest of your website.

- 4XX and 5XX errors – sometimes, pages break because of server issues or unwanted results from changes and migrations. In situations like these, you’ll want to handle all of these issues as soon as possible to avoid getting flagged as a low-quality website by search engines.

- Unnecessary redirects – when you merge content or create new pages covering older pages’ topics, redirecting is a great tool to pass beneficial signals. However, sometimes it doesn’t make sense to redirect, and it’s best just to set them as 404 pages.

You can use a tool such as Screaming Frog to imitate Googlebot and crawl your site. This tool will find all redirect chains, redirect loops, and 4XX and 5XX errors and organize them in easy-to-navigate reports. Also, it is advised that you look at Google Search Console reports for 404 pages under the Indexing tab.

Once you’ve arrived on the reports page, work your way through the list to fix them.

2. Optimize Site Architecture

Your website’s architecture is the hierarchical organization of the pages starting from the homepage.

Image source: Bluehost

For small sites, architecture isn’t the first thing in mind, but for enterprise websites, a clear and easy-to-navigate structure will improve crawlability, indexation, usability from users’ perspective, and scalability.

When you create a good site structure, your URLs will be easier to categorize and manage, thus making it easier for collaborators to create new pages without conflicting slugs or cannibalizing keywords.

Also, search engines will have more cohesive internal linking signals, which will help build thematic authority and find deeper pages within your domain – reducing the chance of orphan pages.

3. Improve Mobile Performance and Friendliness

Mobile traffic has increased exponentially in recent years. With more technologies making it easier for users to search for information, make transactions, and share content, this trend is not slowing down.

If your site is not mobile-friendly in terms of both performance and responsiveness, search engines and users will start to ignore your pages. In fact, mobile friendliness is such an important ranking factor Google has created unique tools to measure mobile performance and responsiveness, as well as introduced mobile-first indexing.

To improve your mobile performance, you’ll need to pay attention to your JavaScript, as mobile processors aren’t as powerful as desktop computers. Using code splitting, async or defer attributes, and browser catching are good starting points to optimize your JS for mobile devices.

To optimize your mobile site’s responsiveness and design, focus on creating a layout that reacts to the users’ screens (e.g., using media queries and viewport meta tags), simplify forms and buttons, and test your pages on different devices to ensure compatibility.

(Note: You can find a deeper explanation of mobile optimization in the links above.)

4. Pre-render Dynamic Pages to Ensure Proper Indexation

Handling JavaScript is one of the most critical tasks to prioritize for your enterprise website, as a lot of JS-related technical SEO issues need to be addressed to ensure proper crawlability and indexation.

These issues are even more prevalent on enterprise websites because of their size and complexity.

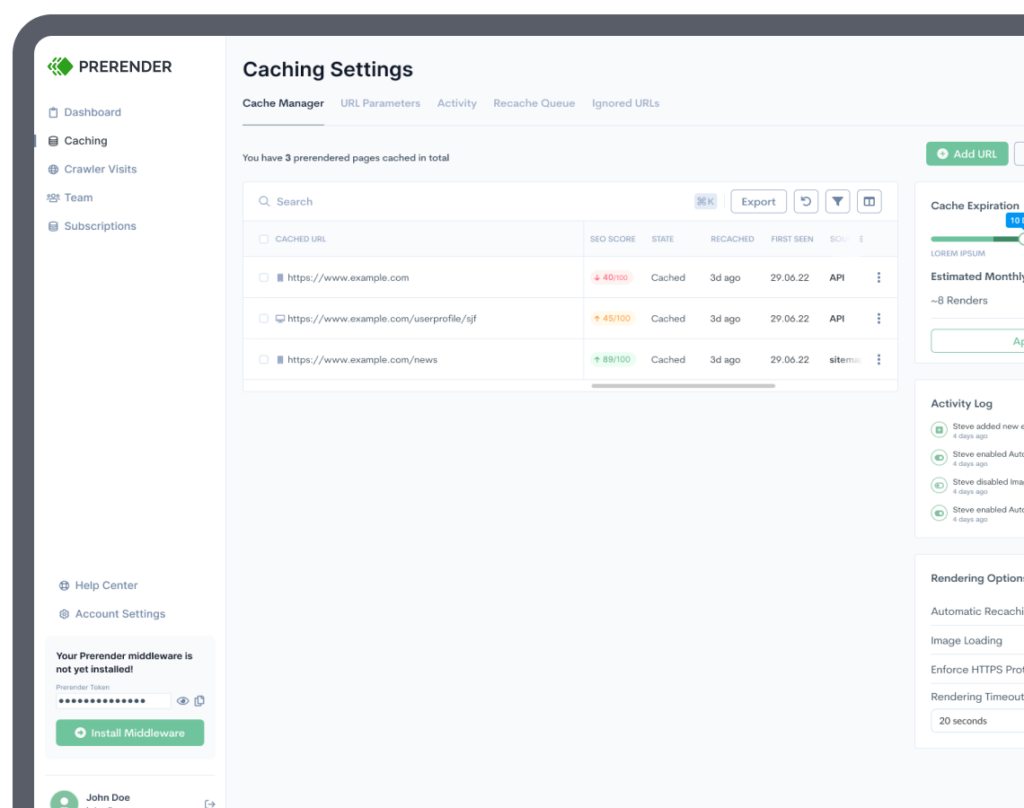

However, a quick and cost-effective solution is to pre-render your pages using Prerender.

For a long time, Prerender has been recommended by Google to set dynamic rendering correctly and without the overhead of setting and maintaining your own servers and renderers. This is how it works:

- Install Prerender’s middleware which will identify search engine user agents and send the request to Prerender.

- From there, Prerender will download all necessary files from your server and take a snapshot of the fully-rendered page

- It’ll send back a static HTML version of your page through your server to (for example) Googlebot.

This way, all JavaScript is executed on Prerender’s server, taking the burden from Google’s renderer and ensuring there’s no missing content, increasing your Page Speed scores (as Prerender will cache the snapshot and serve it instantly on request) and avoiding most JS-related SEO issues without compromising your users’ experience. It will also help Google find new links quicker because it won’t need to wait for your page to render before accessing the content, improving your website crawlability and allowing Googlebot to assign you a higher crawl budget.

(Note: Here is a deeper explanation of how Prerender works.)

5. Optimize Your XML Sitemaps and Keep Them Updated

Your XML sitemap is a great tool to tell Google which pages to prioritize, help Googlebot find new pages faster, and create a structured way to provide Hreflang metadata.

To begin with, clean all unnecessary and broken pages from the sitemap. For example, you don’t want paginated pages on your sitemap, as in most cases, you don’t want them to rank higher on Google.

Also, including redirected or broken pages will signal to Google that your sitemap isn’t optimized and could make Googlebot ignore your sitemap altogether because it can see it as an unreliable resource.

You only want to include pages that you want Google to find and rank on the SERPs – including category pages – and leave out pages with no SEO value. This will improve crawlability and make indexation much faster.

On the other hand, enterprise websites tend to have several localized versions of their pages. If that’s your case, you can use the following structure to specify all your Hreflang tags from within the sitemap:

| <url> <loc>https://prerender.io/react/</loc> <xhtml:link rel=”alternate” hreflang=”de” href=”https://prerender.io/deutsch/react/”/> <xhtml:link rel=”alternate” hreflang=”de-ch” href=”https://prerender.io/schweiz-deutsch/react/”/> <xhtml:link rel=”alternate” hreflang=”en” href=”https://prerender.io/react/”/></url> |

This system will help you avoid common Hreflang issues that can truly harm your organic performance.

6. Set Your Robot Directives Correctly and Robot.txt File

When traffic drops, one of the most common issues we’ve encountered are unoptimized Robot.txt files blocking resources, pages, or entire folders or with on-page robot directives.

Fix robot directives

After website migrations, redesigns, or site architecture changes, developers and web admins commonly miss pages with NoFollow and NoIndex directives on the head section. When you have a NoFollow meta tag on your page, you’re telling Google not to follow any of the links it finds within the page. For example, if this page is the only one linking to subtopic pages, the subtopic pages won’t be discovered by Google.

NoIndex tags, on the other hand, tells Google to leave the page out of their index, so even if crawlers discover the page, they won’t add it to Google’s index. Changing these directives to Follow, Index tags are a quick way to fix indexation problems and gain more real estate on the SERPs.

Optimize your Robot.txts

Your Robot.txt file is the central place to control what bots and user agents can access. This makes it a powerful tool to avoid wasting crawl budget on pages you don’t want ranking on the SERP or not even indexed at all. It’s also more efficient for blocking entire directories. But that’s also the main problem.

Because you can block entire directories without proper planning, you can block crucial pages that break your site architecture and block important pages from being crawled. For example, if you block pages that link to large amounts of content, Google won’t be able to discover those links, thus creating indexation issues and harming your site’s organic traffic.

It’s also common for some webmasters to block resources like CSS and JavaScript files to increase crawl budget. Still, without them, there’s no way for search engines to properly render your pages, hurting your site’s page experience or having a large number of pages tagged as thin content.

In many cases, large websites can also benefit from blocking unnecessary pages from crawlers, especially to optimize crawl budget.

(Note: It’s also a best practice to add your sitemap on your Robot.txt file because bots will always check this file before crawling the website.)

We’ve created an entire Robot.txt file optimization guide you can consult for a better implementation.

Optimizing meta descriptions and titles are usually some of the first tasks technical SEOs tend to focus on, but in most cases is due to how easy of a fix it is, not because it’s the most impactful.

In Conclusion

These six tasks will create a solid foundation for your SEO campaign/s and increase your chances of ranking higher. Each task will solve many technical SEO issues at once and allow your content to shine!